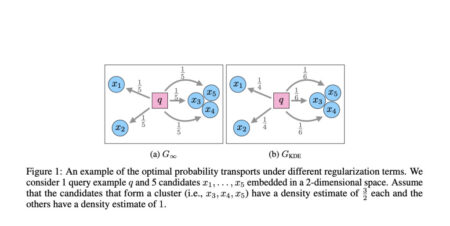

Estimating the density of a distribution from samples is a fundamental problem in statistics. In many practical settings, the Wasserstein…

Machine Learning

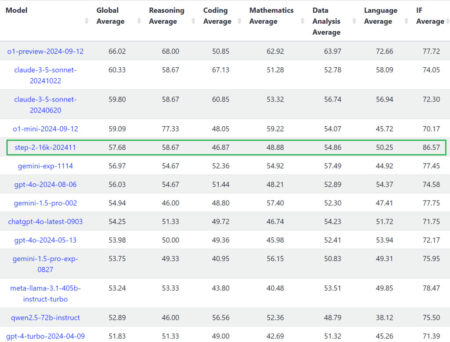

In the evolving landscape of artificial intelligence, building language models capable of replicating human understanding and reasoning remains a significant…

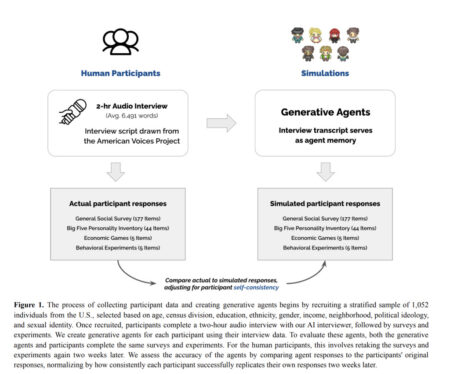

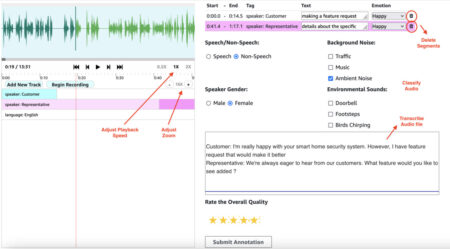

Generative agents are computational models replicating human behavior and attitudes across diverse contexts. These models aim to simulate individual responses…

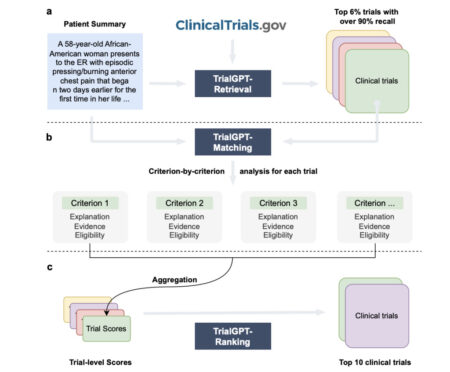

Matching patients to suitable clinical trials is a pivotal but highly challenging process in modern medical research. It involves analyzing…

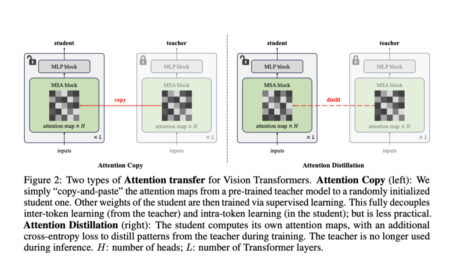

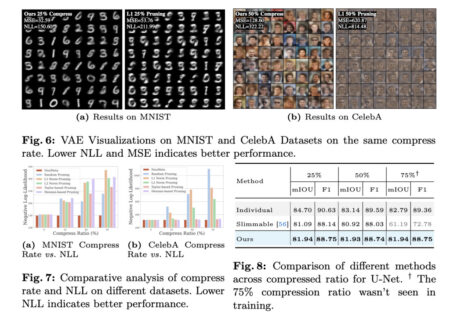

Vision Transformers (ViTs) have revolutionized computer vision by offering an innovative architecture that uses self-attention mechanisms to process image data.…

In the evolving field of machine learning, fine-tuning foundation models such as BERT or LLAMA for specific downstream tasks has…

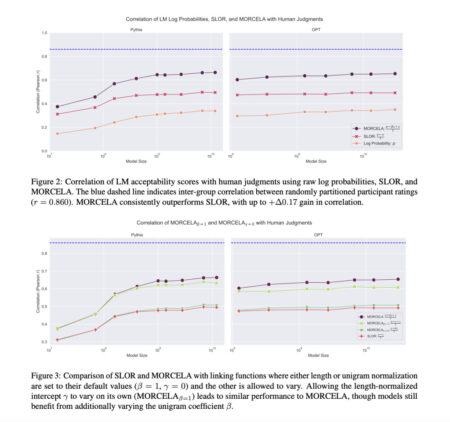

In natural language processing (NLP), a central question is how well the probabilities generated by language models (LMs) align with…

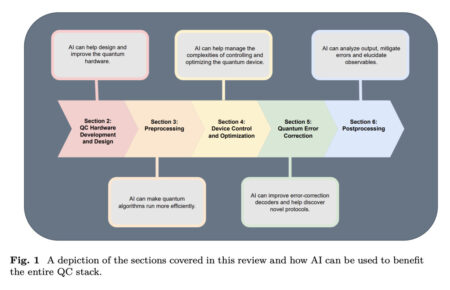

Quantum computing (QC) stands at the forefront of technological innovation, promising transformative potential across scientific and industrial domains. Researchers recognize…

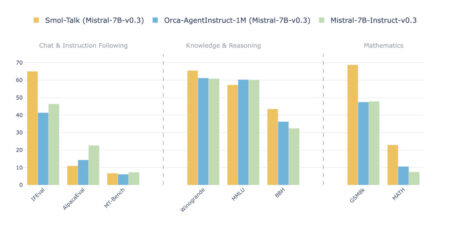

Recent advancements in natural language processing (NLP) have introduced new models and training datasets aimed at addressing the increasing demands…

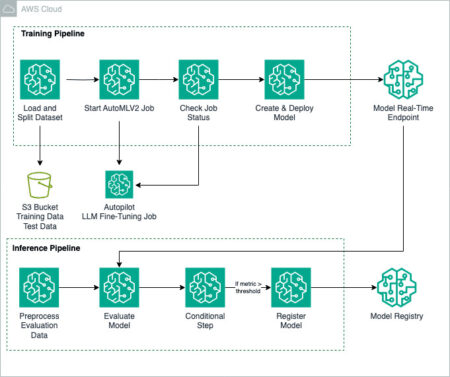

Fine-tuning foundation models (FMs) is a process that involves exposing a pre-trained FM to task-specific data and fine-tuning its parameters.…

Today, we’re excited to share the journey of the VW—an innovator in the automotive industry and Europe’s largest car maker—to…

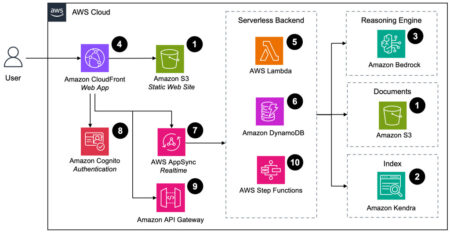

Amazon Bedrock is a fully managed service that offers a choice of high-performing foundation models (FMs) from leading AI companies…

As generative AI models advance in creating multimedia content, the difference between good and great output often lies in the…

In a world where visual content is increasingly essential, the ability to create and manipulate images with precision and creativity…

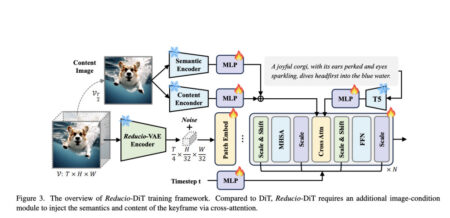

Recent advancements in video generation models have enabled the production of high-quality, realistic video clips. However, these models face challenges…

Large language models (LLMs) are commonly trained on datasets consisting of fixed-length token sequences. These datasets are created by randomly…

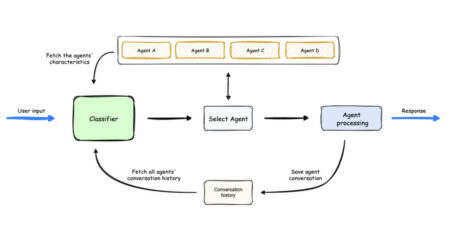

AI-driven solutions are advancing rapidly, yet managing multiple AI agents and ensuring coherent interactions between them remains challenging. Whether for…

Google has introduced a ‘memory’ feature for its Gemini Advanced chatbot, enabling it to remember user preferences and interests for…

Neural networks have traditionally operated as static models with fixed structures and parameters once trained, a limitation that hinders their…

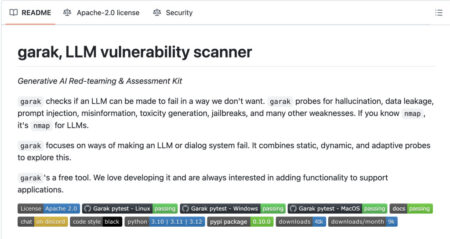

Large Language Models (LLMs) have transformed artificial intelligence by enabling powerful text-generation capabilities. These models require strong security against critical…