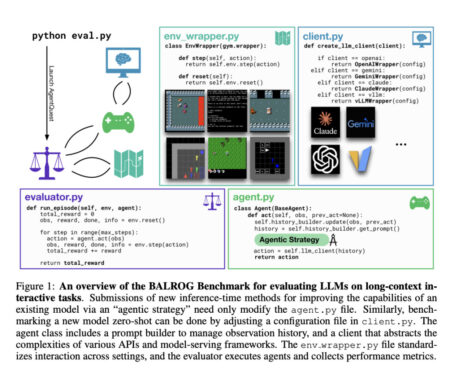

As AI agents become increasingly sophisticated and autonomous, the need for robust tools to manage and optimize their behavior becomes…

Machine Learning

Scientific literature synthesis is integral to scientific advancement, allowing researchers to identify trends, refine methods, and make informed decisions. However,…

In recent years, the rise of large language models (LLMs) and vision-language models (VLMs) has led to significant advances in…

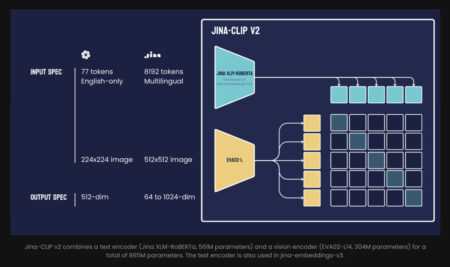

In an interconnected world, effective communication across multiple languages and mediums is increasingly important. Multimodal AI faces challenges in combining…

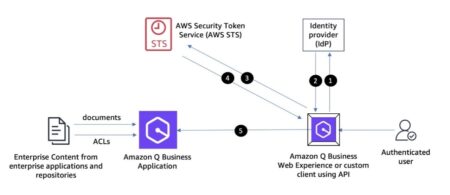

Amazon Q Business is a conversational assistant powered by generative AI that enhances workforce productivity by answering questions and completing…

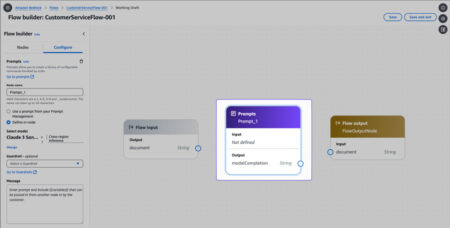

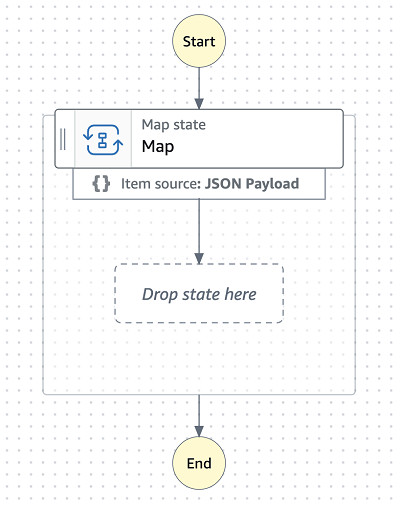

Today, we are excited to announce the general availability of Amazon Bedrock Flows (previously known as Prompt Flows). With Bedrock…

This post is part of an ongoing series about governing the machine learning (ML) lifecycle at scale. To view this…

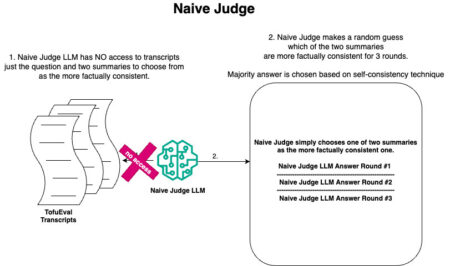

In this post, we demonstrate the potential of large language model (LLM) debates using a supervised dataset with ground truth.…

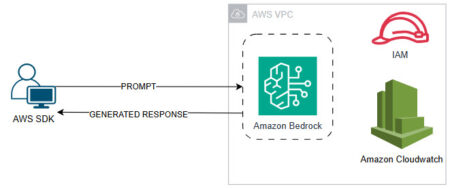

Amazon Bedrock is a fully managed service that offers a choice of high-performing foundation models (FMs) from leading AI companies…

Companies across all industries are harnessing the power of generative AI to address various use cases. Cloud providers have recognized…

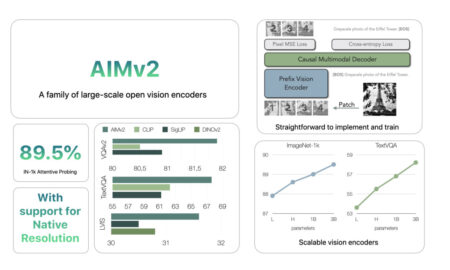

Vision models have evolved significantly over the years, with each innovation addressing the limitations of previous approaches. In the field…

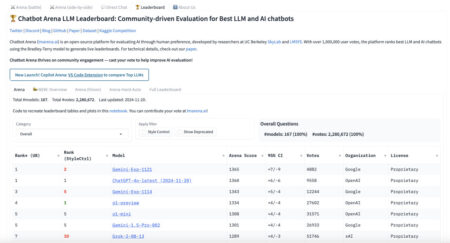

The field of artificial intelligence (AI) continues to evolve, with competition among large language models (LLMs) remaining intense. Despite recent…

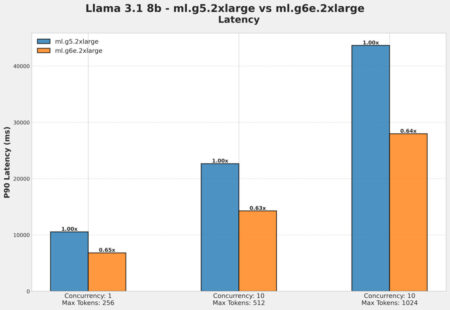

As the demand for generative AI continues to grow, developers and enterprises seek more flexible, cost-effective, and powerful accelerators to…

Companies across various scales and industries are using large language models (LLMs) to develop generative AI applications that provide innovative…

This paper was accepted at the Safe Generative AI Workshop (SGAIW) at NeurIPS 2024. Large language models (LLMs) could be…

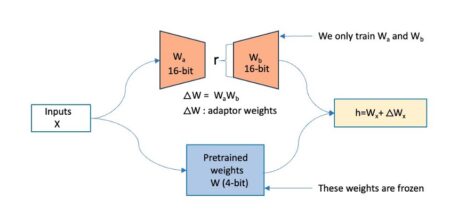

This paper was accepted at the Machine Learning and Compression Workshop at NeurIPS 2024. Compressing Large Language Models (LLMs) often…

Learning with identical train and test distributions has been extensively investigated both practically and theoretically. Much remains to be understood,…

We study the problem of private online learning, specifically, online prediction from experts (OPE) and online convex optimization (OCO). We…

We study private stochastic convex optimization (SCO) under user-level differential privacy (DP) constraints. In this setting, there are nnn users,…

We study the problem of differentially private stochastic convex optimization (DP-SCO) with heavy-tailed gradients, where we assume a kthk^{text{th}}kth-moment bound…