NuMind AI has officially released NuMarkdown-8B-Thinking, an open-source (MIT License) reasoning OCR Vision-Language Model (VLM) that redefines how complex documents…

Machine Learning

In this tutorial, we walk through building a compact but fully functional Cipher-based workflow. We start by securely capturing our…

Corpus Aware Training (CAT) leverages valuable corpus metadata during training by injecting corpus information into each training example, and has…

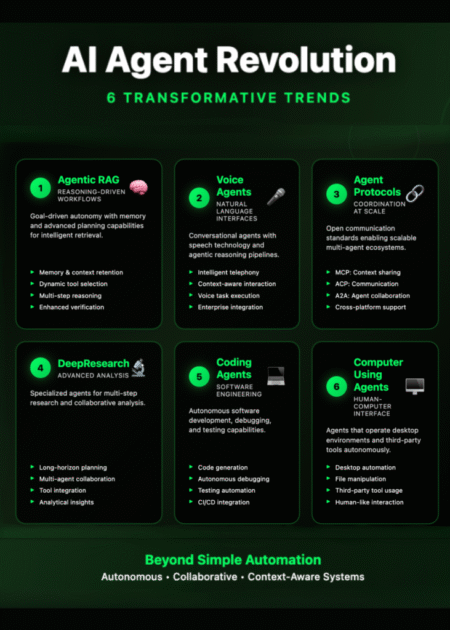

The year 2025 marks a defining moment in the evolution of artificial intelligence, ushering in an era where agentic systems—autonomous…

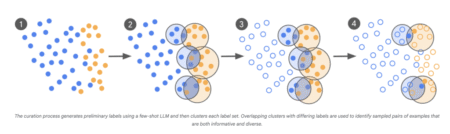

Google Research has unveiled a groundbreaking method for fine-tuning large language models (LLMs) that slashes the amount of required training…

RouteLLM is a flexible framework for serving and evaluating LLM routers, designed to maximize performance while minimizing cost. Key features:…

AI in Market Economics and Pricing Algorithms AI-driven pricing models, particularly those utilizing reinforcement learning (RL), can lead to outcomes…

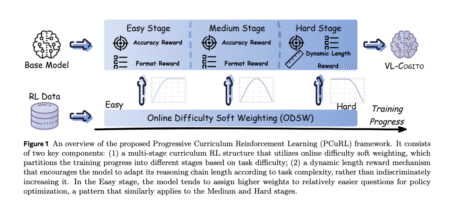

Multimodal reasoning, where models integrate and interpret information from multiple sources such as text, images, and diagrams, is a frontier…

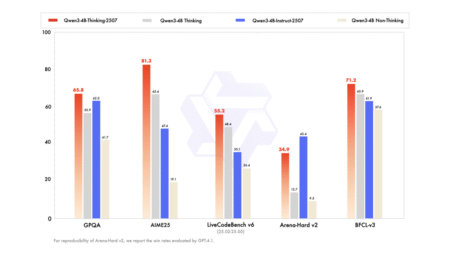

Smaller Models with Smarter Performance and 256K Context Support Alibaba’s Qwen team has introduced two powerful additions to its small…

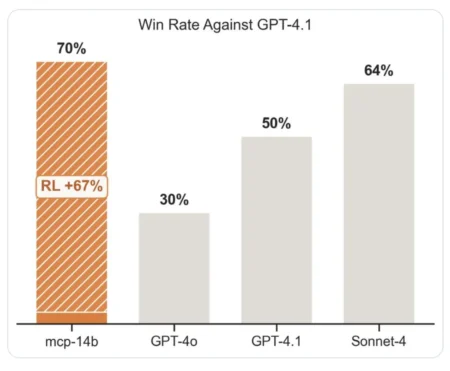

Table of contents Introduction What Is MCP- RL? ART: The Agent Reinforcement Trainer Code Walkthrough: Specializing LLMs with MCP- RL…

Table of contents TL;DR 1) What is an AI agent (2025 definition)? 2) What can agents do reliably today? 3)…

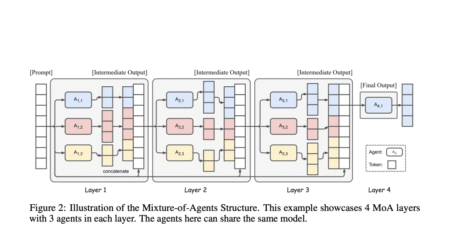

The Mixture-of-Agents (MoA) architecture is a transformative approach for enhancing large language model (LLM) performance, especially on complex, open-ended tasks…

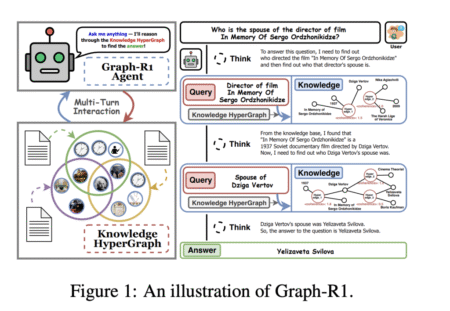

Introduction Large Language Models (LLMs) have set new benchmarks in natural language processing, but their tendency for hallucination—generating inaccurate outputs—remains…

In this tutorial, we walk through building an advanced PaperQA2 AI Agent powered by Google’s Gemini model, designed specifically for…

Table of contents Why Classic AI Agent Workflows Fail The 9 Agentic Workflow Patterns for 2025 Sequential Intelligence Parallel Processing…

Autoregressive language models are constrained by their inherently sequential nature, generating one token at a time. This paradigm limits inference…

Estimated reading time: 5 minutes Table of contents Introduction What Is a Proxy Server? Technical Architecture: Key Functions (2025): Types…

Contrastive Language-Image Pre-training (CLIP) has become important for modern vision and multimodal models, enabling applications such as zero-shot image classification…

In this tutorial, we begin by showcasing the power of OpenAI Agents as the driving force behind our multi-agent research…

Reading through Cloudflare’s detailed exposé and the extensive media coverage, the controversy surrounding Perplexity AI’s web scraping practices is deeper…

![Proxy Servers Explained: Types, Use Cases & Trends in 2025 [Technical Deep Dive]](https://devstacktips.com/wp-content/uploads/2025/08/a-clean-minimalist-diagram-illustrating-_9aCHlNyfQymMdgxTzJO73A_SCH0U_uISCeZb6TJycAZiA-1-1024x574-kck90b-450x252.png)