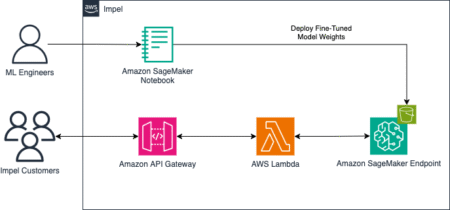

This post is co-written with Tatia Tsmindashvili, Ana Kolkhidashvili, Guram Dentoshvili, Dachi Choladze from Impel. Impel transforms automotive retail through…

Machine Learning

Tokenizer design significantly impacts language model performance, yet evaluating tokenizer quality remains challenging. While text compression has emerged as a…

Vision foundation models pre-trained on massive data encode rich representations of real-world concepts, which can be adapted to downstream tasks…

In this tutorial, we introduce an advanced, interactive web intelligence agent powered by Tavily and Google’s Gemini AI. We’ll learn…

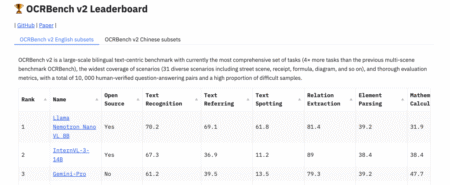

NVIDIA has introduced Llama Nemotron Nano VL, a vision-language model (VLM) designed to address document-level understanding tasks with efficiency and…

We propose a distillation scaling law that estimates distilled model performance based on a compute budget and its allocation between…

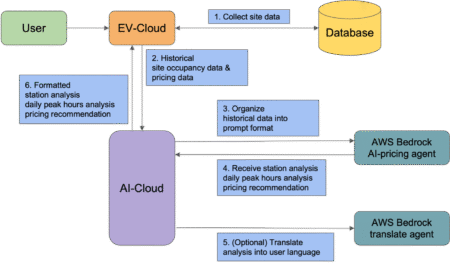

Noodoe is a global leader in EV charging innovation, offering advanced solutions that empower operators to optimize their charging station…

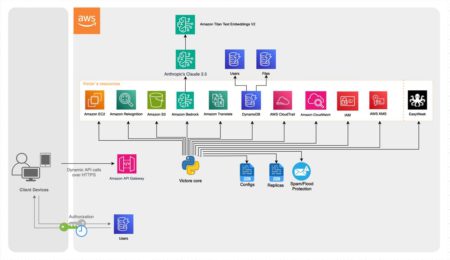

This post is co-written with Taras Tsarenko, Vitalil Bozadzhy, and Vladyslav Horbatenko. As organizations worldwide seek to use AI for…

We’ve witnessed remarkable advances in model capabilities as generative AI companies have invested in developing their offerings. Language models such…

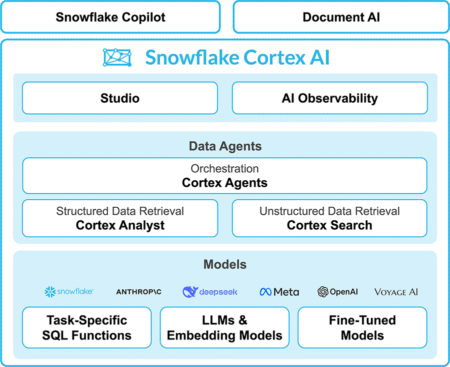

San Francisco, CA – The data cloud landscape is buzzing as Snowflake, a heavyweight in data warehousing and analytics, today…

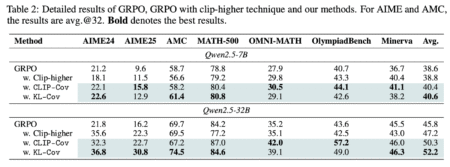

Recent advances in reasoning-centric large language models (LLMs) have expanded the scope of reinforcement learning (RL) beyond narrow, task-specific applications,…

Despite recent progress in robotic control via large-scale vision-language-action (VLA) models, real-world deployment remains constrained by hardware and data requirements.…

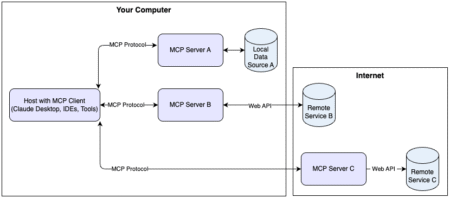

OpenAI has announced a set of targeted updates to its AI agent development stack, aimed at expanding platform compatibility, improving…

Cross-lingual transfer is a popular approach to increase the amount of training data for NLP tasks in a low-resource context.…

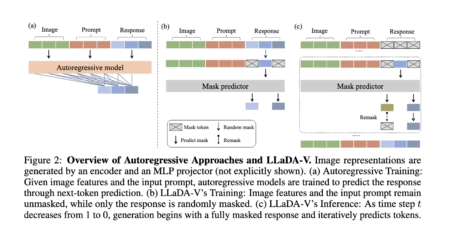

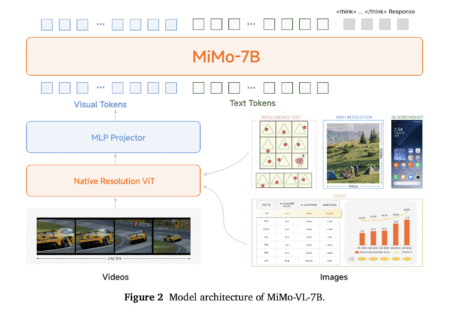

Multimodal large language models (MLLMs) are designed to process and generate content across various modalities, including text, images, audio, and…

The growing adoption of open-source large language models such as Llama has introduced new integration challenges for teams previously relying…

The Mistral Agents API enables developers to create smart, modular agents equipped with a wide range of capabilities. Key features…

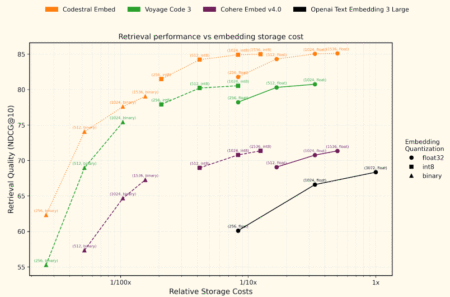

Modern software engineering faces growing challenges in accurately retrieving and understanding code across diverse programming languages and large-scale codebases. Existing…

Vision-language models (VLMs) have become foundational components for multimodal AI systems, enabling autonomous agents to understand visual environments, reason over…

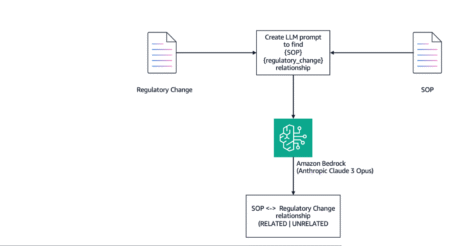

Standard operating procedures (SOPs) are essential documents in the context of regulations and compliance. SOPs outline specific steps for various…