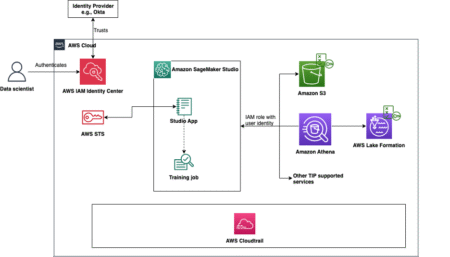

AWS supports trusted identity propagation, a feature that allows AWS services to securely propagate a user’s identity across service boundaries.…

Machine Learning

This paper was accepted at the IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA) 2025 Non-negative…

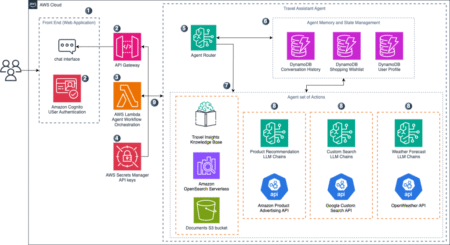

Traveling is enjoyable, but travel planning can be complex to navigate and a hassle. Travelers must book accommodations, plan activities,…

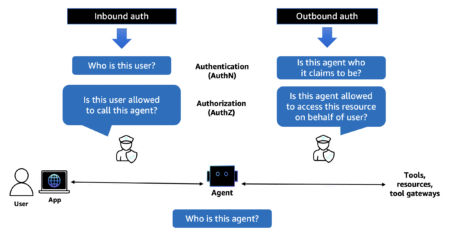

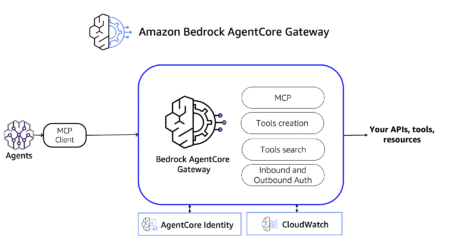

We’re excited to introduce Amazon Bedrock AgentCore Identity, a comprehensive identity and access management service purpose-built for AI agents. With…

Chat-based assistants powered by Retrieval Augmented Generation (RAG) are transforming customer support, internal help desks, and enterprise search, by delivering…

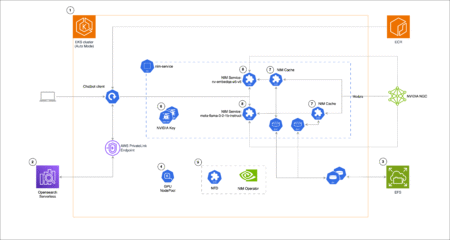

This post is a joint collaboration between Salesforce and AWS and is being cross-published on both the Salesforce Engineering Blog…

The MERN (MongoDB, Express, React, Node.js) stack is a popular JavaScript web development framework. The combination of technologies is well-suited…

To fulfill their tasks, AI Agents need access to various capabilities including tools, data stores, prompt templates, and other agents.…

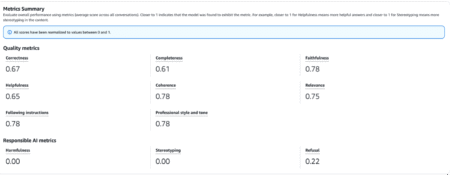

Large language models (LLMs) have become increasingly prevalent across both consumer and enterprise applications. However, their tendency to “hallucinate” information…

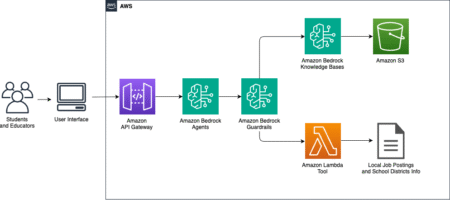

This post was co-authored with Laura Lee Williams and John Jabara from University Startups. University Startups, headquartered in Bethesda, MD,…

Amazon Q Business is a generative AI-powered enterprise assistant that helps organizations unlock value from their data. By connecting to…

Upgrading legacy systems has become increasingly important to stay competitive in today’s market as outdated infrastructure can cost organizations time,…

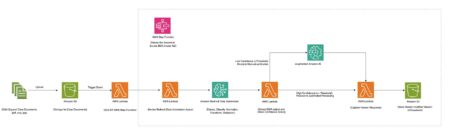

Intelligent document processing (IDP) is a technology to automate the extraction, analysis, and interpretation of critical information from a wide…

We show the performance of Automatic Speech Recognition (ASR) systems that use semi-supervised speech representations can be boosted by a…

Large language models (LLMs) have achieved impressive performance, leading to their widespread adoption as decision-support tools in resource-constrained contexts like…

The landscape of software engineering automation is evolving rapidly, driven by advances in Large Language Models (LLMs). However, most approaches…

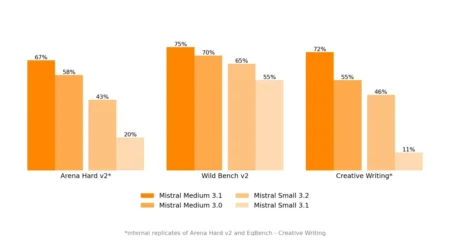

Mistral AI has introduced Mistral Medium 3.1, setting new standards in multimodal intelligence, enterprise readiness, and cost-efficiency for large language…

Artificial intelligence and machine learning workflows are notoriously complex, involving fast-changing code, heterogeneous dependencies, and the need for rigorously repeatable…

In this tutorial, we explore how we can build a fully functional conversational AI agent from scratch using the Pipecat…

In the rapidly evolving field of agentic AI and AI Agents, staying informed is essential. Here’s a comprehensive, up-to-date list…