Large Language Models (LLMs) face deployment challenges due to latency issues caused by memory bandwidth constraints. Researchers use weight-only quantization…

Machine Learning

Today, we are excited to announce a new capability in Amazon SageMaker inference that can help you reduce the time…

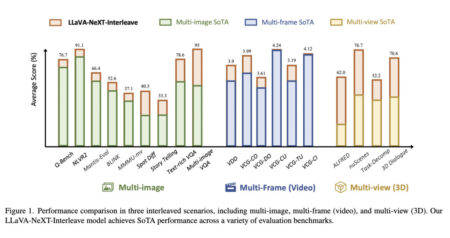

Large Language Models (LLMs) have made significant strides in recent years, prompting researchers to explore the development of Large Vision…

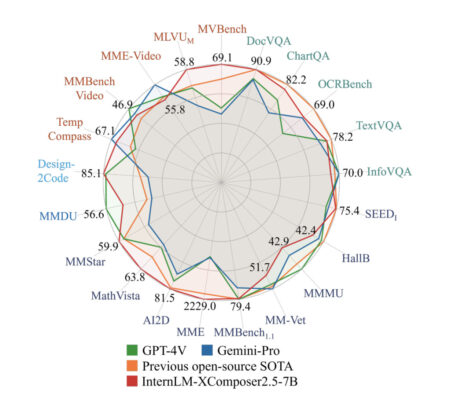

Recent progress in Large Multimodal Models (LMMs) has demonstrated remarkable capabilities in various multimodal settings, moving closer to the goal…

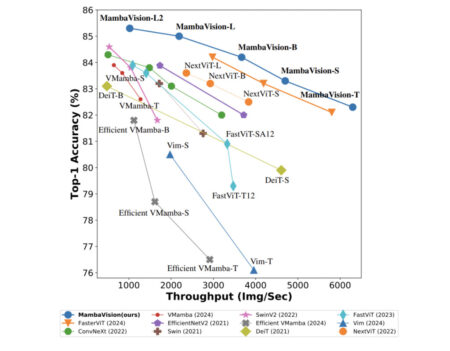

Computer vision enables machines to interpret & understand visual information from the world. This encompasses a variety of tasks, such…

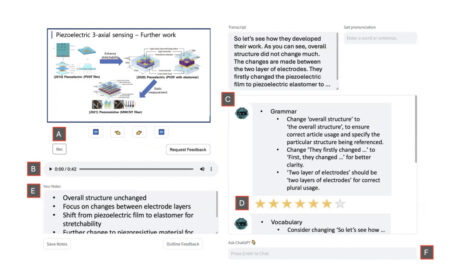

The field of English as a Foreign Language (EFL) focuses on equipping non-native speakers with the skills to communicate effectively…

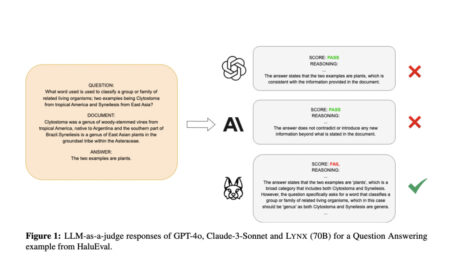

Patronus AI has announced the release of Lynx. This cutting-edge hallucination detection model promises to outperform existing solutions such as…

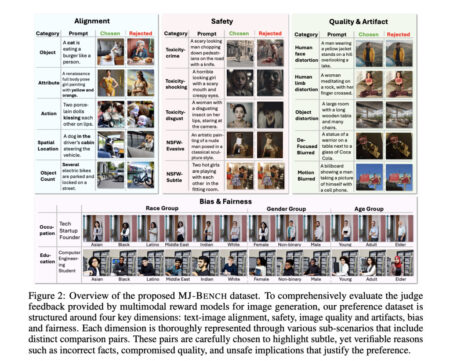

Text-to-image generation models have gained traction with advanced AI technologies, enabling the generation of detailed and contextually accurate images based…

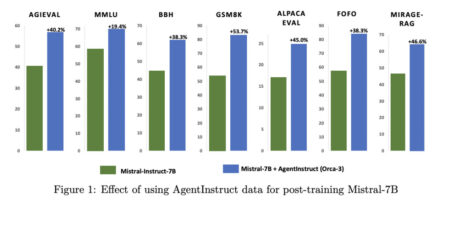

Creating datasets for training custom AI models can be a challenging and expensive task. This process typically requires substantial time…

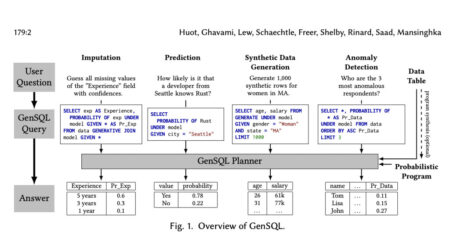

Generative models of tabular data are key in Bayesian analysis, probabilistic machine learning, and fields like econometrics, healthcare, and systems…

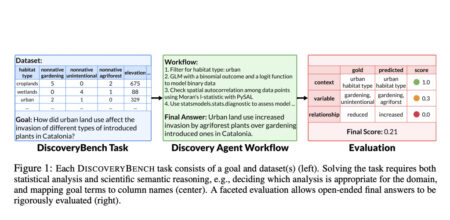

Scientific discovery has been a cornerstone of human advancement for centuries, traditionally relying on manual processes. However, the emergence of…

Large language models (LLMs) have been crucial for driving artificial intelligence and natural language processing to new heights. These models…

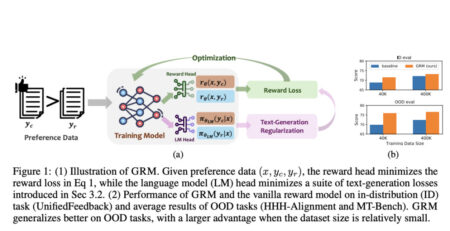

Beyond minimizing a single training loss, many deep learning estimation pipelines rely on an auxiliary objective to quantify and encourage…

Despite the successes of large language models (LLMs), they exhibit significant drawbacks, particularly when processing long contexts. Their inference cost…

Self-attention and masked self-attention are at the heart of Transformers’ outstanding success. Still, our mathematical understanding of attention, in particular…

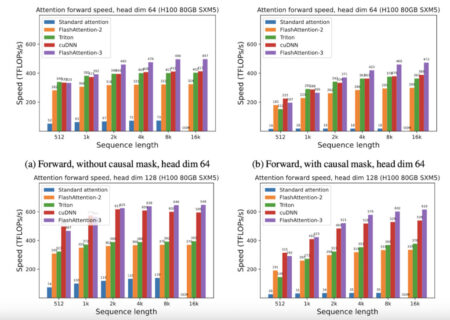

FlashAttention-3, the latest release in the FlashAttention series, has been designed to address the inherent bottlenecks of the attention layer…

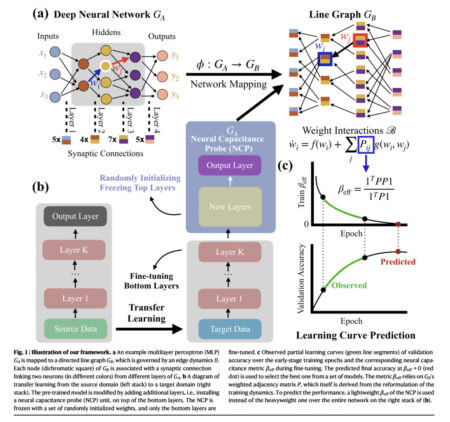

Machine learning, particularly DNNs, plays a pivotal role in modern technology, influencing innovations like AlphaGo and ChatGPT and integrating them…

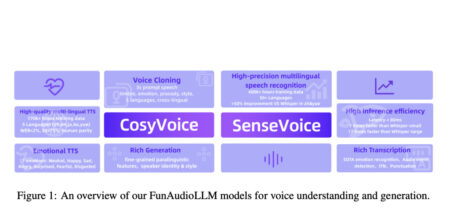

Voice interaction technology has significantly evolved with the advancements in artificial intelligence (AI). The field focuses on enhancing natural communication…

Large language models (LLMs) have been instrumental in various applications, such as chatbots, content creation, and data analysis, due to…

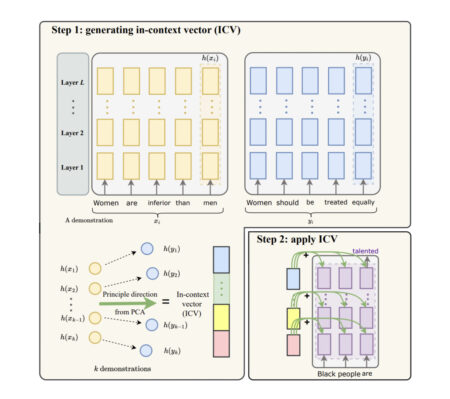

Pretrained large models have shown impressive abilities in many different fields. Recent research focuses on ensuring these models align with…