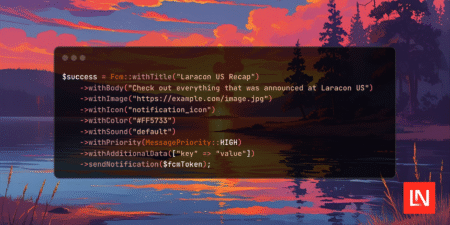

A Laravel package for sending Firebase Cloud Messaging (FCM) notifications with support for Laravel’s notification system. The post Send Notifications…

Libraries & Frameworks

In Agile software development, the demand for high-quality test data is crucial, but traditional production data often leads to bottlenecks due to privacy and compliance concerns. Synthetic test data, generated by AI, offers a secure, flexible alternative by mimicking real data without exposing sensitive information. It speeds up testing, improves coverage, and uncovers edge cases missed by traditional data. With synthetic data, teams can quickly generate tailored datasets for each test scenario, reducing waiting times and improving efficiency. Embracing synthetic test data helps businesses accelerate delivery, enhance product quality, and innovate faster.

The post Break Free from Legacy Bottlenecks – How Synthetic Test Data Fuels Agile Innovation first appeared on TestingXperts.

Imagine this familiar scene: it’s Friday evening, and your team is prepping a hot-fix release. The code passes unit tests, the sprint board is almost empty, and you’re already tasting weekend freedom. Suddenly, a support ticket pings:“Screen-reader users can’t reach the checkout button. The focus keeps looping back to the promo banner.”The clock is ticking,

The post AI in Accessibility Testing: The Future Awaits appeared first on Codoid.

Introducing Laravel Boost, your AI coding starter kit. The post Laravel Boost, your AI coding starter kit appeared first on…

A quick overview of all changes and news from the entire Total.js Platform. Read more about our work. Source: Read…

The Shift-Right approach is rapidly becoming a cornerstone of modern quality engineering, offering enterprises a smarter way to ensure software excellence post-deployment. Unlike the traditional Shift-Left model, which focuses on early testing in the development lifecycle, Shift-Right emphasizes continuous validation in production using real-user data and behavior insights. This strategy helps organizations improve customer experience, resolve issues faster, and make data-informed decisions with greater precision. Read the blog now

The post Shift-Right Testing Isn’t Optional Here’s How AI and Real Users Are Making It Work first appeared on TestingXperts.

Please keep in mind, I’m just trying to get points so I can join a particular group. I need 20. But if you feel like you could give a decent answer, I’m all ears.

The code architecture thing being bugless is more of me perusing through stack exchange and seeing so many “How come when I do this this happens” or “when I place this and I’ve been doing it for years. This happens but I can’t do it now with this new thing?”

It seems like a lot of of stuff not working cuz they’re no longer compatible or something’s “flipped” incorrectly or needs to be “connected” to the correct calibrator (I’m sure I’m saying gibberish), but the idea is essentially the same. Why are there so many tiny technical incompatibilities? Isn’t there some form of architecture that just limits this or makes it impossible to happen?

Someone should get on that (I ask ignorantly)

Just curious: I’ve always written tests in a declarative style especially with page object model. But doesn’t this break the single responsibility principle? I used to write things with an imperative style but maintenance was a headache and it was harder to read.

So my question is: Is there a general consensus of which we should be using in our tests? And if it IS declarative, doesn’t that break SOLID (specifically the S) principles?

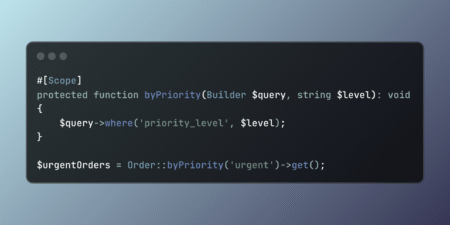

Transform Laravel database queries with reusable scope patterns that encapsulate common filtering logic. These powerful abstractions enable elegant query composition…

Caleb Porzio presented Livewire 4 on the second day of Laracon US 2025, unveiling the next major version of Livewire.…

This blog shows how to install the Total.js IoT platform step by step, including setting up the database, downloading the…

In today’s rapidly evolving software testing and development landscape, ensuring quality at scale can feel like an uphill battle without the right tools. One critical element that facilitates scalable and maintainable test automation is effective configuration management. YAML, short for “YAML Ain’t Markup Language,” stands out as a powerful, easy-to-use tool for managing configurations in

The post YAML for Scalable and Simple Test Automation appeared first on Codoid.

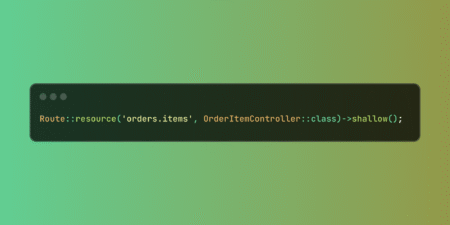

Optimize Laravel API routes with shallow nesting to reduce URL complexity while preserving resource relationships. This approach eliminates redundant parent…

Useful Laravel links to read/watch for this week of July 31, 2025. Source: Read MoreÂ

Nuno Maduro was the second speaker at Laracon US 2025 on Tuesday, unveiling new features for the upcoming Pest v4.0.…

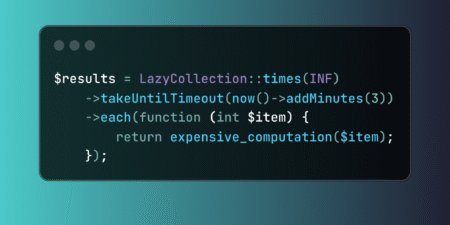

Control long-running Laravel data processing operations with LazyCollection timeout methods. These tools enable efficient dataset handling within time constraints, preventing…

The Laravel passgenerator package allows you to create wallet passes compatible with Apple Wallet easily. The post Create Apple Wallet…

Starting July 30, 2025, JetBrains is making Laravel Idea free for PhpStorm users. The post The Laravel Idea Plugin is…

Post Content Source: Read More

Have you ever wondered why some software teams are consistently great at handling unexpected issues, while others scramble whenever a bug pops up? It comes down to preparation and more specifically, software testing technique known as bebugging. You’re probably already familiar with traditional debugging, where developers identify and fix bugs that naturally occur during software

The post Master Bebugging: Fix Bugs Quickly and Confidently appeared first on Codoid.