What an exciting time to be a developer! GPT-5 just dropped and it’s already available in GitHub Copilot. I was literally watching OpenAI’s livestream when they announced it, and I couldn’t contain my excitement — especially knowing we could start building with it immediately with our favorite AI peer programmer.

If you’ve been following our Rubber Duck Thursday streams, you know I love exploring new AI models and tools. This stream was all about two game-changing releases: GPT-5 and the GitHub Model Context Protocol (MCP) server. And wow, did we cover some ground!

Let me walk you through what we built, what we learned, and how you can start using these powerful tools in your own development workflow today.

GPT-5: Bringing increased reasoning capabilities to your workflows

GPT-5 is OpenAI’s most advanced model yet, and the best part? It’s now available to use in your favorite IDE. You can access it in ask, edit, and agent modes in VS Code — which is incredible because not all models are available across all modes.

What really impressed me was the speed. This is a reasoning model, and the response time was genuinely faster than I expected. When I was building with it, suggestions came back almost instantly, and the quality was noticeably different.

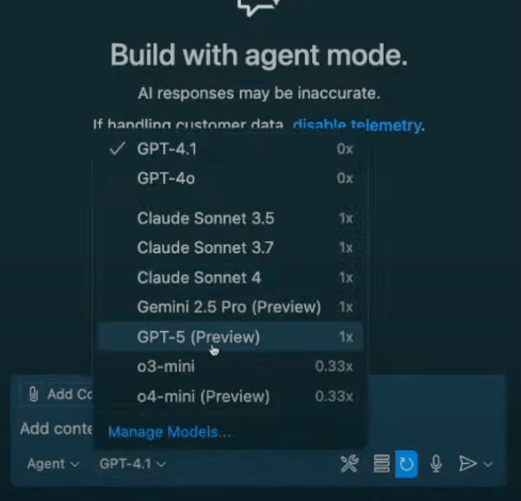

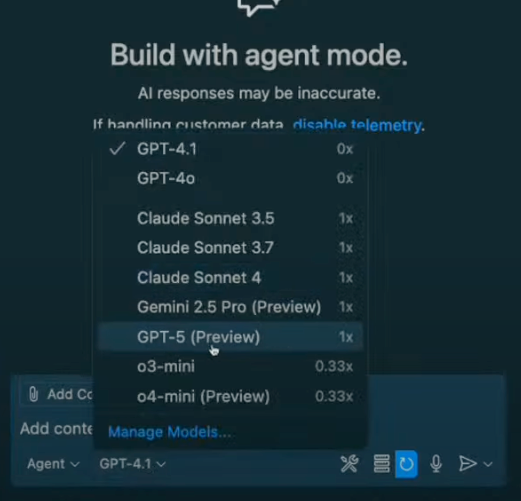

How to enable GPT-5 in GitHub Copilot:

- Open the model picker in your Copilot interface

- Select GPT-5 from the available options

- Start building!

| Enterprise note: If you’re using GitHub Copilot through your company, enterprise and business administrators need to opt in to enable GPT-5 access. Chat with your IT team if you don’t see it available yet. |

Live test: Building a Magic Tiles game in under 60 seconds

I wanted to put GPT-5 to the test immediately, so I asked my stream audience what I should build. The overwhelming response? A game! Someone suggested Magic Tiles (which, I’ll be honest, I had no idea how to play). But that’s where GPT-5 really shined.

Here’s my approach when building with AI (what I call spec-driven development):

Step 1: Let AI create the product requirements

Instead of jumping straight into code, I asked GPT-5:

Do you know the game Magic Tiles? If you do, can you describe the game in simple MVP terms? No auth, just core functionality.GPT-5 delivered an incredibly detailed response with:

- Task breakdown and core gameplay loop

- Minimal feature set requirements

- Data model structure

- Clear checklist for building the game

This is exactly why context is king with LLMs. By asking for a spec first, I gave GPT-5 enough context to build something cohesive and functional.

Step 2: Build with a simple prompt

With the MVP spec ready, I simply said:

Build this.That’s it. No framework specifications, no technology stack requirements — just “build this.” And you know what? GPT-5 made smart choices:

- Using HTML, CSS, and JavaScript for a simple MVP

- Creating a canvas-based game with proper input handling

- Adding scoring, combo tracking, and speed progression

- Implementing game over functionality

The entire build took less than a minute. I’m not exaggerating; GPT-5 delivered a working prototype that quickly.

Step 3: Iterate with natural language

When I realized the game needed better user instructions, I asked:

Can you provide user instructions on how to play the game before the user clicks start?GPT-5 immediately updated the HTML with clear instructions and even gave me suggestions for next features. This iterative approach felt incredibly natural, like having a conversation with a very smart coding partner.

Next, let’s take a look at the GitHub MCP Server!

GitHub MCP server: Automating GitHub with natural language

Now, let’s talk about something that’s going to change how you interact with GitHub: the Model Context Protocol (MCP) server.

What is MCP and why should you care?

MCP is a standard for connecting AI assistants to external tools and applications. Think of it as a bridge that lets your large language model (LLM) talk to:

- GitHub repositories and issues

- Gmail accounts

- SQL servers

- Figma projects

- And so much more

Without MCP, your LLMs live in isolation. With MCP, they become powerful automation engines that can interact with your entire development ecosystem.

MCP follows a client-server architecture, similar to REST APIs. In VS Code, for example, VS Code acts as both the host (providing the environment) and the client (connecting to MCP servers).

Setting up GitHub MCP server (surprisingly simple!)

Getting started with GitHub’s MCP server takes less than 5 minutes:

1. Create the configuration file

Create a .vscode/mcp.json file in your workspace root:

{

"servers": {

"github": {

"command": "npx",

"args": ["-y", "@github/mcp-server-github"]

}

}

}2. Authenticate with GitHub

Click the “Start” button in your MCP configuration. You’ll go through a standard GitHub OAuth flow (with passkey support!).

3. Access your tools

Once authenticated, you’ll see GitHub MCP server tools available in your Copilot interface.

That’s it! No complex setup, no API keys to manage, just simple configuration and authentication.

Real-world MCP automation that will blow your mind

During the stream, I demonstrated some genuinely useful MCP workflows that you can start using today.

Creating a repository with natural language

I previously built a project called “Teenyhost” (a clone of Tiinyhost for temporarily deploying documents to the web), and I want to create a repository for it. Instead of manually creating a GitHub repo, I simply asked Copilot:

Can you create a repository for this project called teenyhost?GPT-5 asked for the required details:

- Repository name: teenyhost

- Owner: my GitHub username

- Visibility: public

- Optional descriptionI provided these details, and within seconds, Copilot used the MCP server to:

- Create the repository on GitHub

- Push my local code to the new repo

- Set up the proper Git remotes

This might seem simple, but think about the workflow implications. How many times have you been deep in a coding session and wanted to quickly push a project to GitHub? Instead of context-switching to the browser, you can now handle it with natural language right in your editor.

Bulk issue creation from natural language

Here’s where things get really interesting. I asked Copilot:

What additional features and improvements can I implement in this app?It came back with categorized suggestions:

- Low effort quick wins

- Core robustness improvements

- Enhanced user experience features

- Advanced functionality

Then I said:

Can you create issues for all the low effort improvements in this repo?And just like that, Copilot created five properly formatted GitHub issues with:

- Descriptive titles

- Detailed descriptions

- Implementation suggestions

- Appropriate labels

Think about how powerful this is for capturing project ideas. Instead of losing great suggestions in Slack threads or meeting notes, you can immediately convert conversations into actionable GitHub issues.

What makes this workflow revolutionary

After using both GPT-5 and the GitHub MCP server extensively, here’s what stood out:

Speed and context retention

GPT-5’s processing speed is genuinely impressive. This isn’t just about faster responses — it’s about maintaining flow state while building. When your AI assistant can keep up with your thought process, the entire development experience becomes more fluid.

Natural language as a development interface

The GitHub MCP server eliminates the friction between having an idea and taking action. No more:

- Switching between VS Code and github.com

- Manually formatting issue descriptions

- Context-switching between coding and project management

Human-in-the-loop automation

What I love about this setup is that you maintain control. When Copilot wanted to push directly to the main branch, I could cancel that action. The AI handles the tedious parts while you make the important decisions.

Your action plan: Start building today

Want to dive in? Here’s exactly what to do:

Try GPT-5 immediately

- Open GitHub Copilot in your IDE

- Switch to the GPT-5 model in your model picker

- Start with agent mode for complex builds

- Try the spec-driven approach: ask for requirements first, then build

Set up GitHub MCP server

- Create

.vscode/mcp.jsonin your workspace - Add the GitHub MCP server configuration

- Authenticate with GitHub

- Start automating your GitHub workflows with natural language

- Experiment with automation workflows:

- Create repositories for side projects

- Generate issues from brainstorming sessions

- Automate branch creation and pull request workflows

- Explore the full range of MCP tools available

On the horizon

The combination of GPT-5 and GitHub MCP server represents a significant shift in how we interact with our development tools. We’re moving from manual, interface-driven workflows to conversational, intent-driven automation.

On our next Rubber Duck Thursday, I’m planning to build our first custom MCP server from scratch. I’ve never built one before, so we’ll learn together — which is always the most fun way to explore new technology.

In the meantime, I encourage you to:

- Install the GitHub MCP server and experiment with it.

- Try building something with GPT-5 using the spec-driven approach.

- Share your experiments and results with the community.

The tools are here, they’re accessible, and they’re ready to supercharge your development workflow. What are you going to build first?

Get started with GitHub Copilot >

The post GPT-5 in GitHub Copilot: How I built a game in 60 seconds appeared first on The GitHub Blog.

Source: Read MoreÂ