In this post, we demonstrate how to build an end-to-end solution for text classification using the Amazon Bedrock batch inference capability with the Anthropic’s Claude Haiku model. Amazon Bedrock batch inference offers a 50% discount compared to the on-demand price, which is an important factor when dealing with a large number of requests. We walk through classifying travel agency call center conversations into categories, showcasing how to generate synthetic training data, process large volumes of text data, and automate the entire workflow using AWS services.

Challenges with high-volume text classification

Organizations across various sectors face a common challenge: the need to efficiently handle high-volume classification tasks. From travel agency call centers categorizing customer inquiries to sales teams analyzing lost opportunities and finance departments classifying invoices, these manual processes are a daily necessity. But these tasks come with significant challenges.

The manual approach to analyzing and categorizing these classification requests is not only time-intensive but also prone to inconsistencies. As teams process the high volume of data, the potential for errors and inefficiencies grows. By implementing automated systems to classify these interactions, multiple departments stand to gain substantial benefits. They can uncover hidden trends in their data, significantly enhance the quality of their customer service, and streamline their operations for greater efficiency.

However, the path to effective automated classification has its own challenges. Organizations must grapple with the complexities of efficiently processing vast amounts of textual information while maintaining consistent accuracy in their classification results. In this post, we demonstrate how to create a fully automated workflow while keeping operational costs under control.

Data

For this solution, we used synthetic call center conversation data. For realistic training data that maintains user privacy, we generated synthetic conversations using Anthropic’s Claude 3.7 Sonnet. We used the following prompt generate synthetic data:

The synthetic dataset includes the following information:

- Customer inquiries about flight bookings

- Hotel reservation discussions

- Travel package negotiations

- Customer service complaints

- General travel inquiries

Solution overview

The solution architecture uses a serverless, event-driven, scalable design to effectively handle and classify large quantities of classification requests. Built on AWS, it automatically starts working when new classification request data arrives in an Amazon Simple Storage Service (Amazon S3) bucket. The system then uses Amazon Bedrock batch processing to analyze and categorize the content at scale, minimizing the need for constant manual oversight.

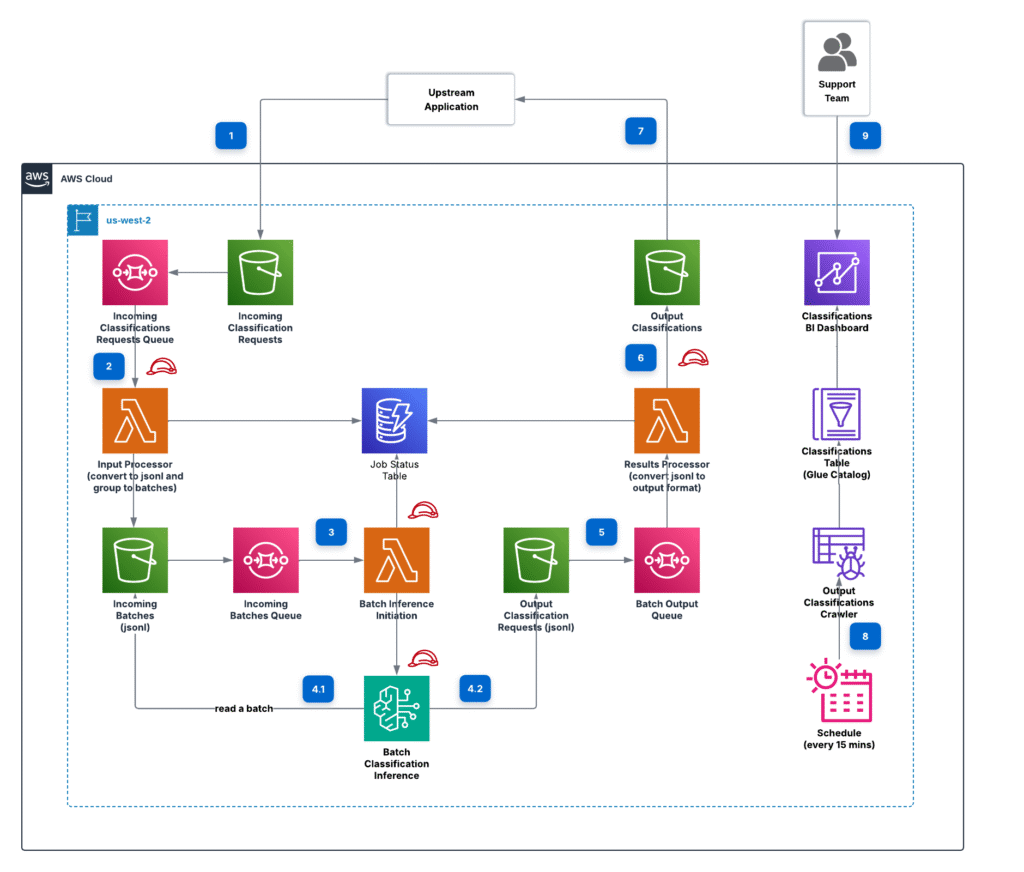

The following diagram illustrates the solution architecture.

The architecture follows a well-structured flow that facilitates reliable processing of classification requests:

- Data preparation – The process begins when the user or application submits classification requests into the S3 bucket (Step 1). These requests are ingested into an Amazon Simple Queue Service (Amazon SQS) queue, providing a reliable buffer for incoming data and making sure no requests are lost during peak loads. A serverless data processor, implemented using an AWS Lambda function, reads messages from the queue and begins its data processing work (Step 2). It prepares the data for batch inference, crafting it into the JSONL format with schema that Amazon Bedrock requires. It stores files in a separate S3 bucket to maintain a clear separation from the original S3 bucket shared with the customer’s application, enhancing security and data management.

- Batch inference – When the data arrives in the S3 bucket, it initiates a notification to an SQS queue. This queue activates the Lambda function batch initiator, which starts the batch inference process. The function submits Amazon Bedrock batch inference jobs through the

CreateModelInvocationJobAPI (Step 3). This initiator acts as the bridge between the queued data and the powerful classification capabilities of Amazon Bedrock. Amazon Bedrock then efficiently processes the data in batches. This batch processing approach allows for optimal use of resources while maintaining high throughput. When Amazon Bedrock completes its task, the classification results are stored in an output S3 bucket (Step 4) for postprocessing and analysis. - Classification results processing – After classification is complete, the system processes the results through another SQS queue (Step 5) and specialized Lambda function, which organizes the classifications into simple-to-read files, such as CSV, JSON, or XLSX (Step 6). These files are immediately available to both the customer’s applications and support teams who need to access this information (Step 7).

- Analytics – We built an analytics layer that automatically catalogs and organizes the classification results, transforming raw classification data into actionable insights. An AWS Glue crawler catalogs everything so it can be quickly found later (Step 8). Now your business teams can use Amazon Athena to run SQL queries against the data, uncovering patterns and trends in the classified categories. We also built an Amazon QuickSight dashboard that provides visualization capabilities, so stakeholders can transform datasets into actionable reports ready for decision-making. (Step 9).

We use AWS best practices in this solution, including event-driven and batch processing for optimal resource utilization, batch operations for cost-effectiveness, decoupled components for independent scaling, and least privilege access patterns. We implemented the system using the AWS Cloud Development Kit (AWS CDK) with TypeScript for infrastructure as code (IaC) and Python for application logic, making sure we achieve seamless automation, dynamic scaling, and efficient processing of classification requests, positioning it to effectively address both current requirements and future demands.

Prerequisites

To perform the solution, you must have the following prerequisites:

- An active AWS account.

- An AWS Region from the list of batch inference supported Regions for Amazon Bedrock.

- Access to your selected models hosted on Amazon Bedrock. Make sure the selected model has been enabled in Amazon Bedrock. The solution is configured to use Anthropic’s Claude 3 Haiku by default.

- Sign up for QuickSight in the same Region where the main application will be deployed. While subscribing, make sure to configure access to Athena and Amazon S3.

- In QuickSight, create a group named

quicksight-accessfor managing dashboard access permissions. Make sure to add your own role to this group so you can access the dashboard after it’s deployed. If you use a different group name, modify the corresponding name in the code accordingly. - To set up the AWS CDK, install the AWS CDK Command Line Interface (CLI). For instructions, see AWS CDK CLI reference.

Deploy the solution

The solution is accessible in the GitHub repository.

Complete the following steps to set up and deploy the solution:

- Clone the Repository: Run the following command:

git clone git@github.com:aws-samples/sample-genai-bedrock-batch-classifier.git - Set Up AWS Credentials: Create an AWS Identity and Access Management (IAM) user with appropriate permissions, generate credentials for AWS Command Line Interface (AWS CLI) access, and create a profile. For instructions, see Authenticating using IAM user credentials for the AWS CLI. You can use the Admin Role for testing purposes, although it violates the principle of least privilege and should be avoided in production environments in favor of custom roles with minimal required permissions.

- Bootstrap the Application: In the CDK folder, run the command

npm install & cdk bootstrap --profile {your_profile_name}, replacing{your_profile_name}with your AWS profile name. - Deploy the Solution: Run the command

cdk deploy --all --profile {your_profile_name}, replacing{your_profile_name}with your AWS profile name.

After you complete the deployment process, you will see a total of six stacks created in your AWS account, as illustrated in the following screenshot.

SharedStack acts as a central hub for resources that multiple parts of the system need to access. Within this stack, there are two S3 buckets: one handles internal operations behind the scenes, and the other serves as a bridge between the system and customers, so they can both submit their classification requests and retrieve their results.

DataPreparationStack serves as a data transformation engine. It’s designed to handle incoming files in three specific formats: XLSX, CSV, and JSON, which at the time of writing are the only supported input formats. This stack’s primary role is to convert these inputs into the specialized JSONL format required by Amazon Bedrock. The data processing script is available in the GitHub repo. This transformation makes sure that incoming data, regardless of its original format, is properly structured before being processed by Amazon Bedrock. The format is as follows:

BatchClassifierStack handles the classification operations. Although currently powered by Anthropic’s Claude Haiku, the system maintains flexibility by allowing straightforward switches to alternative models as needed. This adaptability is made possible through a comprehensive constants file that serves as the system’s control center. The following configurations are available:

- PREFIX – Resource naming convention (

genaiby default). - BEDROCK_AGENT_MODEL – Model selection.

- BATCH_SIZE – Number of classifications per output file (enables parallel processing); the minimum should be 100.

- CLASSIFICATION_INPUT_FOLDER – Input folder name in the S3 bucket that will be used for uploading incoming classification requests.

- CLASSIFICATION_OUTPUT_FOLDER – Output folder name in the S3 bucket where the output files will be available after the classification completes.

- OUTPUT_FORMAT – Supported formats (CSV, JSON, XLSX).

- INPUT_MAPPING – A flexible data integration approach that adapts to your existing file structures rather than requiring you to adapt to ours. It consists of two key fields:

- record_id – Optional unique identifier (auto-generated if not provided).

- record_text – Text content for classification.

- PROMPT – Template for guiding the model’s classification behavior. A sample prompt template is available in the GitHub repo. Pay attention to the structure of the template that guides the AI model through its decision-making process. The template not only combines a set of possible categories, but also contains instructions, requiring the model to select a single category and present it within

<class>tags. These instructions help maintain consistency in how the model processes incoming requests and saves the output.

BatchResultsProcessingStack functions as the data postprocessing stage, transforming the Amazon Bedrock JSONL output into user-friendly formats. At the time of writing, the system supports CSV, JSON, and XLSX. These processed files are then stored in a designated output folder in the S3 bucket, organized by date for quick retrieval and management. The conversion scripts are available in the GitHub repo. The output files have the following schema:

- ID – Resource naming convention

- INPUT_TEXT – Initial text that was used for classification

- CLASS – The classification category

- RATIONALE – Reasoning or explanation of given classification

AnalyticsStack provides a business intelligence (BI) dashboard that displays a list of classifications and allows filtering based on defined in prompt categories. It offers the following key configuration options:

- ATHENA_DATABASE_NAME – Defines the name of Athena database that is used as a main data source for the QuickSight dashboard.

- QUICKSIGHT_DATA_SCHEMA – Defines how labels should be displayed on the dashboard and specifies which columns are filterable.

- QUICKSIGHT_PRINCIPAL_NAME – Designates the principal group that will have access to the QuickSight dashboard. The group should be created manually before deploying the stack.

- QUICKSIGHT_QUERY_MODE – You can choose between SPICE or direct query for fetching data, depending on your use case, data volume, and data freshness requirements. The default setting is direct query.

Now that you’ve successfully deployed the system, you can prepare your data file—this can be either real customer data or the synthetic dataset we provided for testing. When your file is ready, go to the S3 bucket named {prefix}-{account_id}-customer-requests-bucket-{region} and upload your file to input_data folder. After the batch inference job is complete, you can view the classification results on the dashboard. You can find it under the name {prefix}-{account_id}-classifications-dashboard-{region}. The following screenshot shows a preview of what you can expect.

The dashboard will not display data until Amazon Bedrock finishes processing the batch inference jobs and the AWS Glue crawler creates the Athena table. Without these steps completed, the dashboard can’t connect to the table because it doesn’t exist yet. Additionally, you must update the QuickSight role permissions that were set up during pre-deployment. To update permissions, complete the following steps:

- On the QuickSight console, choose the user icon in the top navigation bar and choose Manage QuickSight.

- In the navigation pane, choose Security & Permissions.

- Verify that the role has been granted proper access to the S3 bucket with the following path format:

{prefix}-{account_id}-internal-classifications-{region}.

Results

To test the solution’s performance and reliability, we tested 1,190 synthetically generated travel agency conversations from a single Excel file across multiple runs. The results were remarkably consistent across 10 consecutive runs, with processing times ranging between 11–12 minutes per batch (200 classifications in a single batch).Our solution achieved the following:

- Speed – Maintained consistent processing times around 11–12 minutes

- Accuracy – Achieved 100% classification accuracy on our synthetic dataset

- Cost-effectiveness – Optimized expenses through efficient batch processing

Challenges

For certain cases, the generated class didn’t exactly match the class name given in the prompt. For instance, in multiple cases, it output “Hotel/Flight Booking Inquiry” instead of “Booking Inquiry,” which was defined as the class in the prompt. This was addressed by prompt engineering and asking the model to check the final class output to match exactly with one of the provided classes.

Error handling

For troubleshooting purposes, the solution includes an Amazon DynamoDB table that tracks batch processing status, along with Amazon CloudWatch Logs. Error tracking is not automated and requires manual monitoring and validation.

Key takeaways

Although our testing focused on travel agency scenarios, the solution’s architecture is flexible and can be adapted to various classification needs across different industries and use cases.

Known limitations

The following are key limitations of the classification solution and should be considered when planning its use:

- Minimum batch size – Amazon Bedrock batch inference requires at least 100 classifications per batch.

- Processing time – The completion time of a batch inference job depends on various factors, such as job size. Although Amazon Bedrock strives to complete a typical job within 24 hours, this time frame is a best-effort estimate and not guaranteed.

- Input file formats – The solution currently supports only CSV, JSON, and XLSX file formats for input data.

Clean up

To avoid additional charges, clean up your AWS resources when they’re no longer needed by running the command cdk destroy --all --profile {your_profile_name}, replacing {your_profile_name} with your AWS profile name.

To remove resources associated with this project, complete the following steps:

- Delete the S3 buckets:

- On the Amazon S3 console, choose Buckets in the navigation pane.

- Locate your buckets by searching for your

{prefix}. - Delete these buckets to facilitate proper cleanup.

- Clean up the DynamoDB resources:

- On the DynamoDB console, choose Tables in the navigation pane.

- Delete the table

{prefix}-{account_id}-batch-processing-status-{region}.

This comprehensive cleanup helps make sure residual resources don’t remain in your AWS account from this project.

Conclusion

In this post, we explored how Amazon Bedrock batch inference can transform your large-scale text classification workflows. You can now automate time-consuming tasks your teams handle daily, such as analyzing lost sales opportunities, categorizing travel requests, and processing insurance claims. This solution frees your teams to focus on growing and improving your business.

Furthermore, this solution gives the opportunity to create a system that provides real-time classifications, seamlessly integrates with your communication channels, offers enhanced monitoring capabilities, and supports multiple languages for global operations.

This solution was developed for internal use in test and non-production environments only. It is the responsibility of the customer to perform their due diligence to verify the solution aligns with their compliance obligations.

We’re excited to see how you will adapt this solution to your unique challenges. Share your experience or questions in the comments—we’re here to help you get started on your automation journey.

About the authors

Nika Mishurina is a Senior Solutions Architect with Amazon Web Services. She is passionate about delighting customers through building end-to-end production-ready solutions for Amazon. Outside of work, she loves traveling, working out, and exploring new things.

Farshad Harirchi is a Principal Data Scientist at AWS Professional Services. He helps customers across industries, from retail to industrial and financial services, with the design and development of generative AI and machine learning solutions. Farshad brings extensive experience in the entire machine learning and MLOps stack. Outside of work, he enjoys traveling, playing outdoor sports, and exploring board games.

Source: Read MoreÂ