The Challenge of Multimodal Reasoning

Recent breakthroughs in text-based language models, such as DeepSeek-R1, have demonstrated that RL can aid in developing strong reasoning skills. Motivated by this, researchers have attempted to apply the same RL techniques to MLLMs to enhance their ability to reason across both visual and textual inputs. However, these attempts haven’t been entirely successful; MLLMs still struggle with complex reasoning tasks. This suggests that simply reusing RL strategies from text-only models may not work well in multimodal settings, where the interaction between different data types introduces new challenges that require more tailored approaches.

Evolution of Multimodal Language Models

Recent research in MLLMs builds on the progress of LLMs by combining visual inputs with language understanding. Early models, such as CLIP and MiniGPT-4, laid the groundwork, followed by instruction-tuned models like LLaMA. While closed-source models demonstrate strong reasoning through lengthy CoT outputs, open-source models have primarily focused on fine-tuning and CoT adaptations. However, these often yield brief answers that limit in-depth rationale. RL, including techniques like RLHF and GRPO, has shown promise for enhancing reasoning in LLMs. Inspired by this, recent work now aims to apply RL in MLLMs to improve visual reasoning and support richer, longer outputs.

Introduction of ReVisual-R1

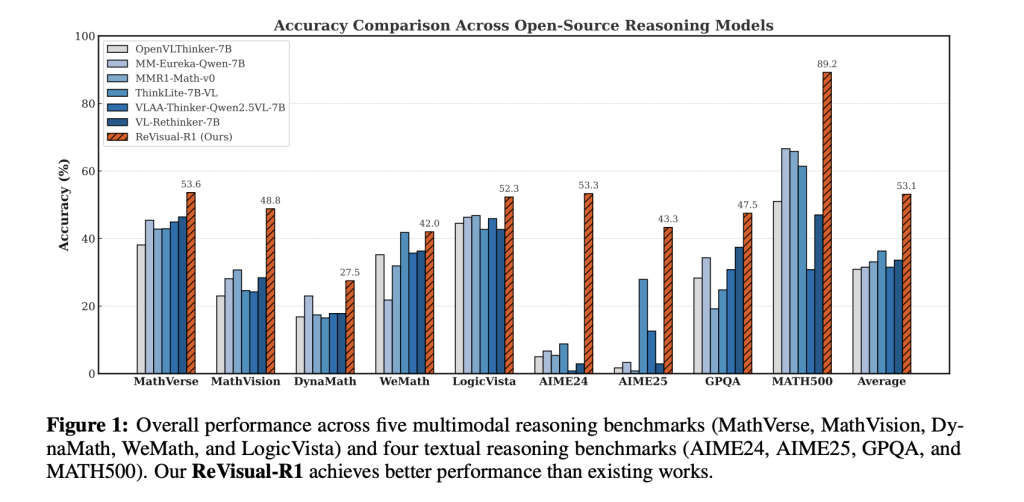

Researchers from Tsinghua University, Shanghai Jiao Tong University, and the Shanghai Artificial Intelligence Laboratory have introduced ReVisual-R1, a 7B-parameter open-source MLLM that sets a new standard in multimodal reasoning. Their study reveals three key insights: (1) Careful text-only pretraining provides a strong cold-start, outperforming many existing MLLMs even before RL; (2) The commonly used GRPO algorithm suffers from gradient stagnation, which they address with a novel method called Prioritized Advantage Distillation (PAD); and (3) Adding a final text-only RL phase after multimodal RL further enhances reasoning. Their three-stage approach, which includes text pretraining, multimodal RL, and final text RL, strikes an effective balance between visual grounding and deep cognitive reasoning.

Developing the GRAMMAR Dataset

The GRAMMAR dataset was developed after it was noticed that existing multimodal cold-start datasets lack the depth necessary to train strong reasoning models. Text-only datasets, like DeepMath, showed better gains in both text and multimodal tasks, suggesting that textual complexity better stimulates reasoning. To address this, GRAMMAR combines diverse textual and multimodal samples using a multi-stage curation process. This data fuels the Staged Reinforcement Optimization (SRO) framework, which first trains models using multimodal RL, enhanced by Prioritized Advantage Distillation to avoid stalled learning and an efficient-length reward to curb verbosity, followed by a text-only RL phase to boost reasoning and language fluency.

Three-Stage Training Pipeline

The experiments for ReVisual-R1 followed a structured three-stage training process: starting with pure text data to build a language foundation, then incorporating multimodal reinforcement learning for visual-text reasoning, and finally fine-tuning with text-only RL to refine reasoning and fluency. It was tested across various benchmarks and outperformed both open-source and some commercial models in multimodal and math reasoning tasks. The model achieved top results on 9 out of 10 benchmarks. Ablation studies confirmed the importance of training order and the Prioritized Advantage Distillation method, which helped focus learning on high-quality responses, resulting in a significant improvement in overall performance.

Summary and Contributions

In conclusion, ReVisual-R1 is a 7B open-source MLLM built to tackle the challenges of complex multimodal reasoning. Instead of relying solely on scale, it uses a well-designed three-stage training process: starting with high-quality text data for foundational rationale, followed by a multimodal RL phase enhanced with a new PAD technique for stability, and ending with a final text-based RL refinement. This thoughtful curriculum significantly boosts performance. ReVisual-R1 sets a new benchmark among 7B models, excelling in tasks like MathVerse and AIME. The work highlights how structured training can unlock deeper reasoning in MLLMs.

Check out the Paper and GitHub Page. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter.

The post ReVisual-R1: An Open-Source 7B Multimodal Large Language Model (MLLMs) that Achieves Long, Accurate and Thoughtful Reasoning appeared first on MarkTechPost.

Source: Read MoreÂ