This post is co-written with Vicky Andonova and Jonathan Karon from Anomalo.

Generative AI has rapidly evolved from a novelty to a powerful driver of innovation. From summarizing complex legal documents to powering advanced chat-based assistants, AI capabilities are expanding at an increasing pace. While large language models (LLMs) continue to push new boundaries, quality data remains the deciding factor in achieving real-world impact.

A year ago, it seemed that the primary differentiator in generative AI applications would be who could afford to build or use the biggest model. But with recent breakthroughs in base model training costs (such as DeepSeek-R1) and continual price-performance improvements, powerful models are becoming a commodity. Success in generative AI is becoming less about building the right model and more about finding the right use case. As a result, the competitive edge is shifting toward data access and data quality.

In this environment, enterprises are poised to excel. They have a hidden goldmine of decades of unstructured text—everything from call transcripts and scanned reports to support tickets and social media logs. The challenge is how to use that data. Transforming unstructured files, maintaining compliance, and mitigating data quality issues all become critical hurdles when an organization moves from AI pilots to production deployments.

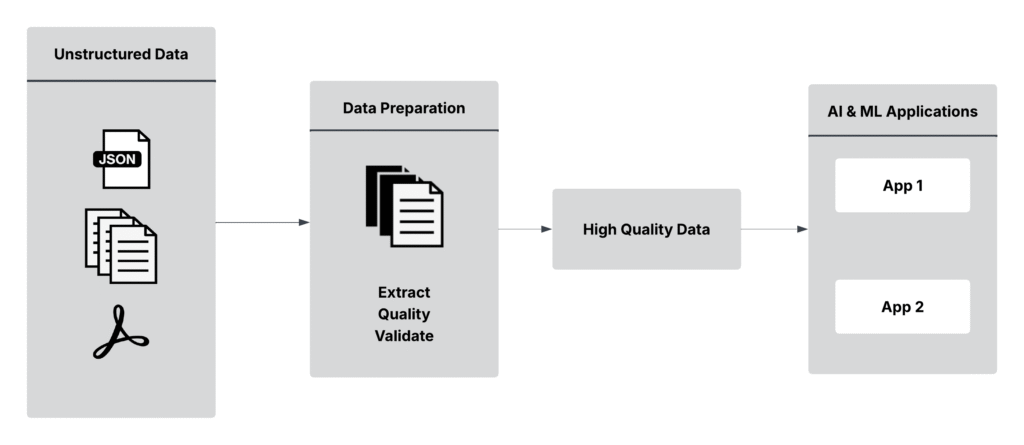

In this post, we explore how you can use Anomalo with Amazon Web Services (AWS) AI and machine learning (AI/ML) to profile, validate, and cleanse unstructured data collections to transform your data lake into a trusted source for production ready AI initiatives, as shown in the following figure.

The challenge: Analyzing unstructured enterprise documents at scale

Despite the widespread adoption of AI, many enterprise AI projects fail due to poor data quality and inadequate controls. Gartner predicts that 30% of generative AI projects will be abandoned in 2025. Even the most data-driven organizations have focused primarily on using structured data, leaving unstructured content underutilized and unmonitored in data lakes or file systems. Yet, over 80% of enterprise data is unstructured (according to MIT Sloan School research), spanning everything from legal contracts and financial filings to social media posts.

For chief information officers (CIOs), chief technical officers (CTOs), and chief information security officers (CISOs), unstructured data represents both risk and opportunity. Before you can use unstructured content in generative AI applications, you must address the following critical hurdles:

- Extraction – Optical character recognition (OCR), parsing, and metadata generation can be unreliable if not automated and validated. In addition, if extraction is inconsistent or incomplete, it can result in malformed data.

- Compliance and security – Handling personally identifiable information (PII) or proprietary intellectual property (IP) demands rigorous governance, especially with the EU AI Act, Colorado AI Act, General Data Protection Regulation (GDPR), California Consumer Privacy Act (CCPA), and similar regulations. Sensitive information can be difficult to identify in unstructured text, leading to inadvertent mishandling of that information.

- Data quality – Incomplete, deprecated, duplicative, off-topic, or poorly written data can pollute your generative AI models and Retrieval Augmented Generation (RAG) context, yielding hallucinated, out-of-date, inappropriate, or misleading outputs. Making sure that your data is high-quality helps mitigate these risks.

- Scalability and cost – Training or fine-tuning models on noisy data increases compute costs by unnecessarily growing the training dataset (training compute costs tend to grow linearly with dataset size), and processing and storing low-quality data in a vector database for RAG wastes processing and storage capacity.

In short, generative AI initiatives often falter—not because the underlying model is insufficient, but because the existing data pipeline isn’t designed to process unstructured data and still meet high-volume, high-quality ingestion and compliance requirements. Many companies are in the early stages of addressing these hurdles and are facing these problems in their existing processes:

- Manual and time-consuming – The analysis of vast collections of unstructured documents relies on manual review by employees, creating time-consuming processes that delay projects.

- Error-prone – Human review is susceptible to mistakes and inconsistencies, leading to inadvertent exclusion of critical data and inclusion of incorrect data.

- Resource-intensive – The manual document review process requires significant staff time that could be better spent on higher-value business activities. Budgets can’t support the level of staffing needed to vet enterprise document collections.

Although existing document analysis processes provide valuable insights, they aren’t efficient or accurate enough to meet modern business needs for timely decision-making. Organizations need a solution that can process large volumes of unstructured data and help maintain compliance with regulations while protecting sensitive information.

The solution: An enterprise-grade approach to unstructured data quality

Anomalo uses a highly secure, scalable stack provided by AWS that you can use to detect, isolate, and address data quality problems in unstructured data–in minutes instead of weeks. This helps your data teams deliver high-value AI applications faster and with less risk. The architecture of Anomalo’s solution is shown in the following figure.

- Automated ingestion and metadata extraction – Anomalo automates OCR and text parsing for PDF files, PowerPoint presentations, and Word documents stored in Amazon Simple Storage Service (Amazon S3) using auto scaling Amazon Elastic Cloud Compute (Amazon EC2) instances, Amazon Elastic Kubernetes Service (Amazon EKS), and Amazon Elastic Container Registry (Amazon ECR).

- Continuous data observability – Anomalo inspects each batch of extracted data, detecting anomalies such as truncated text, empty fields, and duplicates before the data reaches your models. In the process, it monitors the health of your unstructured pipeline, flagging surges in faulty documents or unusual data drift (for example, new file formats, an unexpected number of additions or deletions, or changes in document size). With this information reviewed and reported by Anomalo, your engineers can spend less time manually combing through logs and more time optimizing AI features, while CISOs gain visibility into data-related risks.

- Governance and compliance – Built-in issue detection and policy enforcement help mask or remove PII and abusive language. If a batch of scanned documents includes personal addresses or proprietary designs, it can be flagged for legal or security review—minimizing regulatory and reputational risk. You can use Anomalo to define custom issues and metadata to be extracted from documents to solve a broad range of governance and business needs.

- Scalable AI on AWS – Anomalo uses Amazon Bedrock to give enterprises a choice of flexible, scalable LLMs for analyzing document quality. Anomalo’s modern architecture can be deployed as software as a service (SaaS) or through an Amazon Virtual Private Cloud (Amazon VPC) connection to meet your security and operational needs.

- Trustworthy data for AI business applications – The validated data layer provided by Anomalo and AWS Glue helps make sure that only clean, approved content flows into your application.

- Supports your generative AI architecture – Whether you use fine-tuning or continued pre-training on an LLM to create a subject matter expert, store content in a vector database for RAG, or experiment with other generative AI architectures, by making sure that your data is clean and validated, you improve application output, preserve brand trust, and mitigate business risks.

Impact

Using Anomalo and AWS AI/ML services for unstructured data provides these benefits:

- Reduced operational burden – Anomalo’s off-the-shelf rules and evaluation engine save months of development time and ongoing maintenance, freeing time for designing new features instead of developing data quality rules.

- Optimized costs – Training LLMs and ML models on low-quality data wastes precious GPU capacity, while vectorizing and storing that data for RAG increases overall operational costs, and both degrade application performance. Early data filtering cuts these hidden expenses.

- Faster time to insights – Anomalo automatically classifies and labels unstructured text, giving data scientists rich data to spin up new generative prototypes or dashboards without time-consuming labeling prework.

- Strengthened compliance and security – Identifying PII and adhering to data retention rules is built into the pipeline, supporting security policies and reducing the preparation needed for external audits.

- Create durable value – The generative AI landscape continues to rapidly evolve. Although LLM and application architecture investments may depreciate quickly, trustworthy and curated data is a sure bet that won’t be wasted.

Conclusion

Generative AI has the potential to deliver massive value–Gartner estimates 15–20% revenue increase, 15% cost savings, and 22% productivity improvement. To achieve these results, your applications must be built on a foundation of trusted, complete, and timely data. By delivering a user-friendly, enterprise-scale solution for structured and unstructured data quality monitoring, Anomalo helps you deliver more AI projects to production faster while meeting both your user and governance requirements.

Interested in learning more? Check out Anomalo’s unstructured data quality solution and request a demo or contact us for an in-depth discussion on how to begin or scale your generative AI journey.

About the authors

Vicky Andonova is the GM of Generative AI at Anomalo, the company reinventing enterprise data quality. As a founding team member, Vicky has spent the past six years pioneering Anomalo’s machine learning initiatives, transforming advanced AI models into actionable insights that empower enterprises to trust their data. Currently, she leads a team that not only brings innovative generative AI products to market but is also building a first-in-class data quality monitoring solution specifically designed for unstructured data. Previously, at Instacart, Vicky built the company’s experimentation platform and led company-wide initiatives to grocery delivery quality. She holds a BE from Columbia University.

Vicky Andonova is the GM of Generative AI at Anomalo, the company reinventing enterprise data quality. As a founding team member, Vicky has spent the past six years pioneering Anomalo’s machine learning initiatives, transforming advanced AI models into actionable insights that empower enterprises to trust their data. Currently, she leads a team that not only brings innovative generative AI products to market but is also building a first-in-class data quality monitoring solution specifically designed for unstructured data. Previously, at Instacart, Vicky built the company’s experimentation platform and led company-wide initiatives to grocery delivery quality. She holds a BE from Columbia University.

Jonathan Karon leads Partner Innovation at Anomalo. He works closely with companies across the data ecosystem to integrate data quality monitoring in key tools and workflows, helping enterprises achieve high-functioning data practices and leverage novel technologies faster. Prior to Anomalo, Jonathan created Mobile App Observability, Data Intelligence, and DevSecOps products at New Relic, and was Head of Product at a generative AI sales and customer success startup. He holds a BA in Cognitive Science from Hampshire College and has worked with AI and data exploration technology throughout his career.

Jonathan Karon leads Partner Innovation at Anomalo. He works closely with companies across the data ecosystem to integrate data quality monitoring in key tools and workflows, helping enterprises achieve high-functioning data practices and leverage novel technologies faster. Prior to Anomalo, Jonathan created Mobile App Observability, Data Intelligence, and DevSecOps products at New Relic, and was Head of Product at a generative AI sales and customer success startup. He holds a BA in Cognitive Science from Hampshire College and has worked with AI and data exploration technology throughout his career.

Mahesh Biradar is a Senior Solutions Architect at AWS with a history in the IT and services industry. He helps SMBs in the US meet their business goals with cloud technology. He holds a Bachelor of Engineering from VJTI and is based in New York City (US)

Mahesh Biradar is a Senior Solutions Architect at AWS with a history in the IT and services industry. He helps SMBs in the US meet their business goals with cloud technology. He holds a Bachelor of Engineering from VJTI and is based in New York City (US)

Emad Tawfik is a seasoned Senior Solutions Architect at Amazon Web Services, boasting more than a decade of experience. His specialization lies in the realm of Storage and Cloud solutions, where he excels in crafting cost-effective and scalable architectures for customers.

Emad Tawfik is a seasoned Senior Solutions Architect at Amazon Web Services, boasting more than a decade of experience. His specialization lies in the realm of Storage and Cloud solutions, where he excels in crafting cost-effective and scalable architectures for customers.

Source: Read MoreÂ