This post is based on a technical report written by Kazuki Fujii, who led the Llama 3.3 Swallow model development.

The Institute of Science Tokyo has successfully trained Llama 3.3 Swallow, a 70-billion-parameter large language model (LLM) with enhanced Japanese capabilities, using Amazon SageMaker HyperPod. The model demonstrates superior performance in Japanese language tasks, outperforming GPT-4o-mini and other leading models. This technical report details the training infrastructure, optimizations, and best practices developed during the project.

This post is organized as follows:

- Overview of Llama 3.3 Swallow

- Architecture for Llama 3.3 Swallow training

- Software stack and optimizations employed in Llama 3.3 Swallow training

- Experiment management

We discuss topics relevant to machine learning (ML) researchers and engineers with experience in distributed LLM training and familiarity with cloud infrastructure and AWS services. We welcome readers who understand model parallelism and optimization techniques, especially those interested in continuous pre-training and supervised fine-tuning approaches.

Overview of the Llama 3.3 Swallow

Llama 3.3 Swallow is a 70-billion-parameter LLM that builds upon Meta’s Llama 3.3 architecture with specialized enhancements for Japanese language processing. The model was developed through a collaboration between the Okazaki Laboratory and Yokota Laboratory at the School of Computing, Institute of Science Tokyo, and the National Institute of Advanced Industrial Science and Technology (AIST).

The model is available in two variants on Hugging Face:

- Llama 3.3 Swallow 70B Base v0.4 – The pretrained base model, which serves as the foundation for Japanese language understanding

- Llama 3.3 Swallow 70B Instruct v0.4 – The instruction-tuned model, optimized for dialogue and task completion

Both variants are accessible through the tokyotech-llm organization on Hugging Face, providing researchers and developers with flexible options for different application needs.

Training methodology

The base model was developed through continual pre-training from Meta Llama 3.3 70B Instruct, maintaining the original vocabulary without expansion. The training data primarily consisted of the Swallow Corpus Version 2, a carefully curated Japanese web corpus derived from Common Crawl. To secure high-quality training data, the team employed the Swallow Education Classifier to extract educationally valuable content from the corpus. The following table summarizes the training data used for the base model training with approximately 314 billion tokens. For compute, the team used 32 ml.p5.48xlarge Amazon Elastic Compute Cloud (Amazon EC2) instances (H100, 80 GB, 256 GPUs) for continual pre-training with 16 days and 6 hours.

| Training Data | Number of Training Tokens |

|---|---|

| Japanese Swallow Corpus v2 | 210 billion |

| Japanese Wikipedia | 5.3 billion |

| English Wikipedia | 6.9 billion |

| English Cosmopedia | 19.5 billion |

| English DCLM baseline | 12.8 billion |

| Laboro ParaCorpus | 1.4 billion |

| Code Swallow-Code | 50.2 billion |

| Math Finemath-4+ | 7.85 billion |

For the instruction-tuned variant, the team focused exclusively on Japanese dialogue and code generation tasks. This version was created through supervised fine-tuning of the base model, using the same Japanese dialogue data that proved successful in the previous Llama 3.1 Swallow v0.3 release. Notably, the team made a deliberate choice to exclude English dialogue data from the fine-tuning process to maintain focus on Japanese language capabilities. The following table summarizes the instruction-tuning data used for the instruction-tuned model.

| Training Data | Number of Training Samples |

|---|---|

| Gemma-2-LMSYS-Chat-1M-Synth | 240,000 |

| Swallow-Magpie-Ultra-v0.1 | 42,000 |

| Swallow-Gemma-Magpie-v0.1 | 99,000 |

| Swallow-Code-v0.3-Instruct-style | 380,000 |

Performance and benchmarks

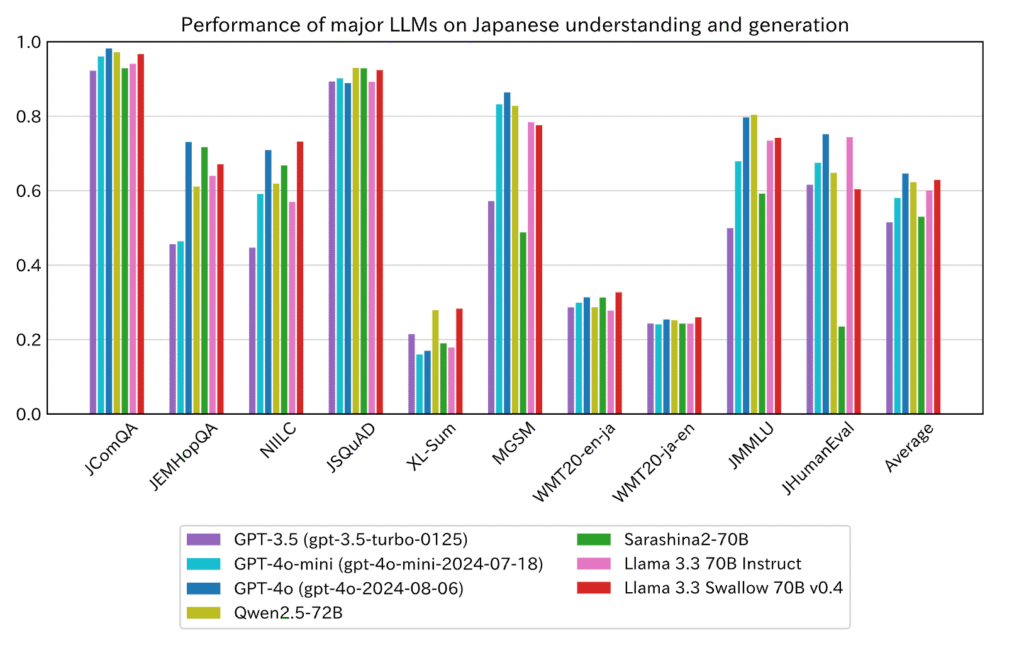

The base model has demonstrated remarkable performance in Japanese language tasks, consistently outperforming several industry-leading models. In comprehensive evaluations, it has shown superior capabilities compared to OpenAI’s GPT-4o (gpt-4o-2024-08-06), GPT-4o-mini (gpt-4o-mini-2024-07-18), GPT-3.5 (gpt-3.5-turbo-0125), and Qwen2.5-72B. These benchmarks reflect the model’s enhanced ability to understand and generate Japanese text. The following graph illustrates the base model performance comparison across these different benchmarks (original image).

The instruction-tuned model has shown particularly strong performance on the Japanese MT-Bench, as evaluated by GPT-4o-2024-08-06, demonstrating its effectiveness in practical applications. The following graph presents the performance metrics (original image).

Licensing and usage

The model weights are publicly available on Hugging Face and can be used for both research and commercial purposes. Users must comply with both the Meta Llama 3.3 license and the Gemma Terms of Use. This open availability aims to foster innovation and advancement in Japanese language AI applications while enforcing responsible usage through appropriate licensing requirements.

Training infrastructure architecture

The training infrastructure for Llama 3.3 Swallow was built on SageMaker HyperPod, with a focus on high performance, scalability, and observability. The architecture combines compute, network, storage, and monitoring components to enable efficient large-scale model training. The base infrastructure stack is available as an AWS CloudFormation template for seamless deployment and replication. This template provisions a comprehensive foundation by creating a dedicated virtual private cloud (VPC). The networking layer is complemented by a high-performance Amazon FSx for Lustre file system, alongside an Amazon Simple Storage Service (Amazon S3) bucket configured to store lifecycle scripts, which are used to configure the SageMaker HyperPod cluster.

Before deploying this infrastructure, it’s essential to make sure the AWS account has the appropriate service quotas. The deployment of SageMaker HyperPod requires specific quota values that often exceed default limits. You should check your current quota against the requirements detailed in SageMaker HyperPod quotas and submit a quota increase request as needed.

The following diagram illustrates the high-level architecture of the training infrastructure.

Compute and network configuration

The compute infrastructure is based on SageMaker HyperPod using a cluster of 32 EC2 P5 instances, each equipped with 8 NVIDIA H100 GPUs. The deployment uses a single spine configuration to provide minimal latency between instances. All communication between GPUs is handled through NCCL over an Elastic Fabric Adapter (EFA), providing high-throughput, low-latency networking essential for distributed training. The SageMaker HyperPod Slurm configuration manages the deployment and orchestration of these resources effectively.

Storage architecture

The project implements a hierarchical storage approach that balances performance and cost-effectiveness. At the foundation is Amazon S3, providing long-term storage for training data and checkpoints. To prevent storage bottlenecks during training, the team deployed FSx for Lustre as a high-performance parallel file system. This configuration enables efficient data access patterns across all training nodes, crucial for handling the massive datasets required for the 70-billion-parameter model.

The following diagram illustrates the storage hierarchy implementation.

The integration between Amazon S3 and FSx for Lustre is managed through a data repository association, configured using the following AWS Command Line Interface (AWS CLI) command:

Observability stack

The monitoring infrastructure combines Amazon Managed Service for Prometheus and Amazon Managed Grafana to provide comprehensive observability. The team integrated DCGM Exporter for GPU metrics and EFA Exporter for network metrics, enabling real-time monitoring of system health and performance. This setup allows for continuous tracking of GPU health, network performance, and training progress, with automated alerting for any anomalies through Grafana Dashboards. The following screenshot shows an example of a GPU health dashboard.

Software stack and training optimizations

The training environment is built on SageMaker HyperPod DLAMI, which provides a preconfigured Ubuntu base Amazon Machine Image (AMI) with essential components for distributed training. The software stack includes CUDA drivers and libraries (such as cuDNN and cuBLAS), NCCL for multi-GPU communication, and AWS-OFI-NCCL for EFA support. On top of this foundation, the team deployed Megatron-LM as the primary framework for model training. The following diagram illustrates the software stack architecture.

Distributed training implementation

The training implementation uses Megatron-LM’s advanced features for scaling LLM training. The framework provides sophisticated model parallelism capabilities, including both tensor and pipeline parallelism, along with efficient data parallelism that supports communication overlap. These features are essential for managing the computational demands of training a 70-billion-parameter model.

Advanced parallelism and communication

The team used a comprehensive 4D parallelism strategy of Megatron-LM that maximizes GPU utilization through careful optimization of communication patterns across multiple dimensions: data, tensor, and pipeline, and sequence parallelism. Data parallelism splits the training batch across GPUs, tensor parallelism divides individual model layers, pipeline parallelism splits the model into stages across GPUs, and sequence parallelism partitions the sequence length dimension—together enabling efficient training of massive models.

The implementation overlaps communication across data parallelism, tensor parallelism, and pipeline parallelism domains, significantly reducing blocking time during computation. This optimized configuration enables efficient scaling across the full cluster of GPUs while maintaining consistently high utilization rates. The following diagram illustrates this communication and computation overlap in distributed training (original image).

Megatron-LM enables fine-grained communication overlapping through multiple configuration flags: --overlap-grad-reduce and --overlap-param-gather for data-parallel operations, --tp-comm-overlap for tensor parallel operations, and built-in pipeline-parallel communication overlap (enabled by default). These optimizations work together to improve training scalability.

Checkpointing strategy

The training infrastructure implements an optimized checkpointing strategy using Distributed Checkpoint (DCP) and asynchronous I/O operations. DCP parallelizes checkpoint operations across all available GPUs, rather than being constrained by tensor and pipeline parallel dimensions as in traditional Megatron-LM implementations. This parallelization, combined with asynchronous I/O, enables the system to:

- Save checkpoints up to 10 times faster compared to synchronous approaches

- Minimize training interruption by offloading I/O operations

- Scale checkpoint performance with the total number of GPUs

- Maintain consistency through coordinated distributed saves

The checkpointing system automatically saves model states to the FSx Lustre file system at configurable intervals, with metadata tracked in Amazon S3. For redundancy, checkpoints are asynchronously replicated to Amazon S3 storage.

For implementation details on asynchronous DCP, see Asynchronous Saving with Distributed Checkpoint (DCP).

Experiment management

In November 2024, the team introduced a systematic approach to resource optimization through the development of a sophisticated memory prediction tool. This tool accurately predicts per-GPU memory usage during training and semi-automatically determines optimal training settings by analyzing all possible 4D parallelism configurations. Based on proven algorithmic research, this tool has become instrumental in maximizing resource utilization across the training infrastructure. The team plans to open source this tool with comprehensive documentation to benefit the broader AI research community.

The following screenshot shows an example of the memory consumption prediction tool interface (original image).

Training pipeline management

The success of the training process heavily relied on maintaining high-quality data pipelines. The team implemented rigorous data curation processes and robust cleaning pipelines, maintaining a careful balance in dataset composition across different languages and domains.For experiment planning, version control was critical. The team first fixed the versions of pre-training libraries and instruction tuning libraries to be used in the next experiment cycle. For libraries without formal version releases, the team managed versions using Git branches or tags to provide reproducibility. After the versions were locked, the team conducted short-duration training runs to:

- Measure throughput with different numbers of GPU nodes

- Search for optimal configurations among distributed training settings identified by the memory prediction library

- Establish accurate training time estimates for scheduling

The following screenshot shows an example experiment schedule showing GPU node allocation, expected training duration, and key milestones across different training phases (original image).

To optimize storage performance before beginning experiments, training data was preloaded from Amazon S3 to the FSx for Lustre file system to prevent I/O bottlenecks during training. This preloading process used parallel transfers to maximize throughput:

Monitoring and performance management

The team implemented a comprehensive monitoring system focused on real-time performance tracking and proactive issue detection. By integrating with Weights & Biases, the system continuously monitors training progress and delivers automated notifications for key events such as job completion or failure and performance anomalies. Weights & Biases provides a set of tools that enable customized alerting through Slack channels. The following screenshot shows an example of a training monitoring dashboard in Slack (original image).

The monitoring infrastructure excels at identifying both job failures and performance bottlenecks like stragglers. The following figure presents an example of straggler detection showing training throughput degradation.

Conclusion

The successful training of Llama 3.3 Swallow represents a significant milestone in the development of LLMs using cloud infrastructure. Through this project, the team has demonstrated the effectiveness of combining advanced distributed training techniques with carefully orchestrated cloud resources. The implementation of efficient 4D parallelism and asynchronous checkpointing has established new benchmarks for training efficiency, and the comprehensive monitoring and optimization tools have provided consistent performance throughout the training process.

The project’s success is built on several foundational elements: a systematic approach to resource planning and optimization, robust data pipeline management, and a comprehensive monitoring and alerting system. The efficient storage hierarchy implementation has proven particularly crucial in managing the massive datasets required for training a 70-billion-parameter model.Looking ahead, the project opens several promising directions for future development. The team plans to open source the memory prediction tools, so other researchers can benefit from the optimizations developed during this project. Further improvements to the training pipelines are under development, along with continued enhancement of Japanese language capabilities. The project’s success also paves the way for expanded model applications across various domains.

Resources and references

This section provides key resources and references for understanding and replicating the work described in this paper. The resources are organized into documentation for the infrastructure and tools used, as well as model-specific resources for accessing and working with Llama 3.3 Swallow.

Documentation

The following resources provide detailed information about the technologies and frameworks used in this project:

- Amazon SageMaker HyperPod Workshop (Slurm)

- Amazon SageMaker HyperPod Workshop (Amazon EKS)

- AWSome Distributed Training

Model resources

For more information about Llama 3.3 Swallow and access to the model, refer to the following resources:

About the Authors

Kazuki Fujii graduated with a bachelor’s degree in Computer Science from Tokyo Institute of Technology in 2024 and is currently a master’s student there (2024–2026). Kazuki is responsible for the pre-training and fine-tuning of the Swallow model series, a state-of-the-art multilingual LLM specializing in Japanese and English as of December 2023. Kazuki focuses on distributed training and building scalable training systems to enhance the model’s performance and infrastructure efficiency.

Daisuke Miyamato is a Senior Specialist Solutions Architect for HPC at Amazon Web Services. He is mainly supporting HPC customers in drug discovery, numerical weather prediction, electronic design automation, and ML training.

Kei Sasaki is a Senior Solutions Architect on the Japan Public Sector team at Amazon Web Services, where he helps Japanese universities and research institutions navigate their cloud migration journeys. With a background as a systems engineer specializing in high-performance computing, Kei supports these academic institutions in their large language model development initiatives and advanced computing projects.

Keita Watanabe is a Senior GenAI World Wide Specialist Solutions Architect at Amazon Web Services, where he helps develop machine learning solutions using OSS projects such as Slurm and Kubernetes. His background is in machine learning research and development. Prior to joining AWS, Keita worked in the ecommerce industry as a research scientist developing image retrieval systems for product search. Keita holds a PhD in Science from the University of Tokyo.

Source: Read MoreÂ