AI agents powered by LLMs show great promise for handling complex business tasks, especially in areas like Customer Relationship Management (CRM). However, evaluating their real-world effectiveness is challenging due to the lack of publicly available, realistic business data. Existing benchmarks often focus on simple, one-turn interactions or narrow applications, such as customer service, missing out on broader domains, including sales, CPQ processes, and B2B operations. They also fail to test how well agents manage sensitive information. These limitations make it challenging to fully comprehend how LLM agents perform across the diverse range of real-world business scenarios and communication styles.

Previous benchmarks have largely focused on customer service tasks in B2C scenarios, overlooking key business operations, such as sales and CPQ processes, as well as the unique challenges of B2B interactions, including longer sales cycles. Moreover, many benchmarks lack realism, often ignoring multi-turn dialogue or skipping expert validation of tasks and environments. Another critical gap is the absence of confidentiality evaluation, vital in workplace settings where AI agents routinely engage with sensitive business and customer data. Without assessing data awareness, these benchmarks fail to address serious practical concerns, such as privacy, legal risk, and trust.

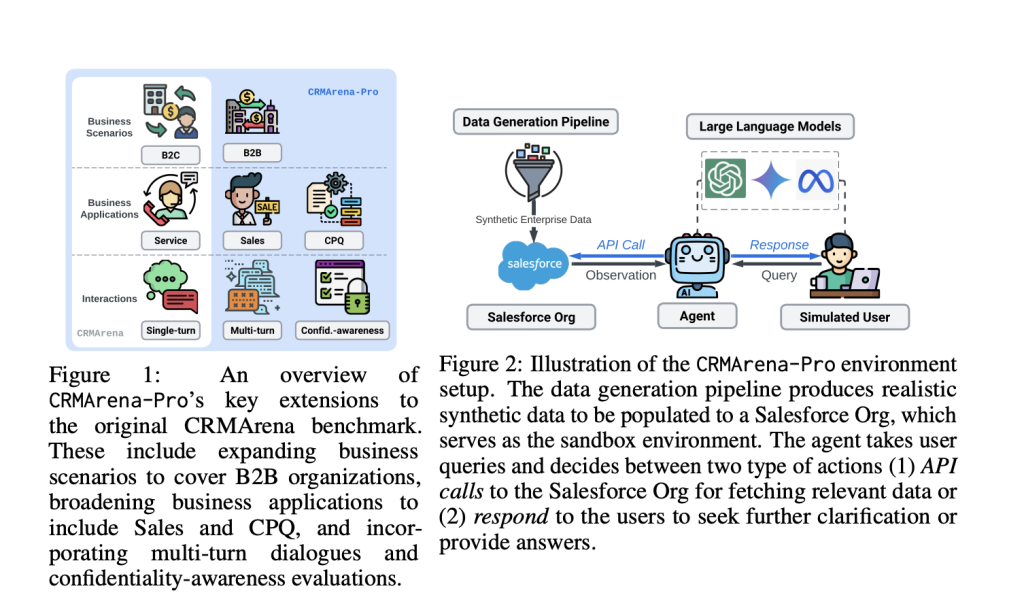

Researchers from Salesforce AI Research have introduced CRMArena-Pro, a benchmark designed to realistically evaluate LLM agents like Gemini 2.5 Pro in professional business environments. It features expert-validated tasks across customer service, sales, and CPQ, spanning both B2B and B2C contexts. The benchmark tests multi-turn conversations and assesses confidentiality awareness. Findings show that even top-performing models such as Gemini 2.5 Pro achieve only around 58% accuracy in single-turn tasks, with performance dropping to 35% in multi-turn settings. Workflow Execution is an exception, where Gemini 2.5 Pro exceeds 83%, but confidentiality handling remains a major challenge across all evaluated models.

CRMArena-Pro is a new benchmark created to rigorously test LLM agents in realistic business settings, including customer service, sales, and CPQ scenarios. Built using synthetic yet structurally accurate enterprise data generated with GPT-4 and based on Salesforce schemas, the benchmark simulates business environments through sandboxed Salesforce Organizations. It features 19 tasks grouped under four key skills: database querying, textual reasoning, workflow execution, and policy compliance. CRMArena-Pro also includes multi-turn conversations with simulated users and tests confidentiality awareness. Expert evaluations confirmed the realism of the data and environment, ensuring a reliable testbed for LLM agent performance.

The evaluation compared top LLM agents across 19 business tasks, focusing on task completion and awareness of confidentiality. Metrics varied by task type—exact match was used for structured outputs, and F1 score for generative responses. A GPT-4o-based LLM Judge assessed whether models appropriately refused to share sensitive information. Models like Gemini-2.5-Pro and o1, with advanced reasoning, clearly outperformed lighter or non-reasoning versions, especially in complex tasks. While performance was similar across B2B and B2C settings, nuanced trends emerged based on model strength. Confidentiality-aware prompts improved refusal rates but sometimes reduced task accuracy, highlighting a trade-off between privacy and performance.

In conclusion, CRMArena-Pro is a new benchmark designed to test how well LLM agents handle real-world business tasks in customer relationship management. It includes 19 expert-reviewed tasks across both B2B and B2C scenarios, covering sales, service, and pricing operations. While top agents performed decently in single-turn tasks (about 58% success), their performance dropped sharply to around 35% in multi-turn conversations. Workflow execution was the easiest area, but most other skills proved challenging. Confidentiality awareness was low, and improving it through prompting often reduced task accuracy. These findings reveal a clear gap between the capabilities of LLMs and the needs of enterprises.

Check out the Paper, GitHub Page, Hugging Face Page and Technical Blog. All credit for this research goes to the researchers of this project.

Did you know? Marktechpost is the fastest-growing AI media platform—trusted by over 1 million monthly readers. Book a strategy call to discuss your campaign goals. Also, feel free to follow us on Twitter and don’t forget to join our 95k+ ML SubReddit and Subscribe to our Newsletter.

Did you know? Marktechpost is the fastest-growing AI media platform—trusted by over 1 million monthly readers. Book a strategy call to discuss your campaign goals. Also, feel free to follow us on Twitter and don’t forget to join our 95k+ ML SubReddit and Subscribe to our Newsletter.

The post Salesforce AI Introduces CRMArena-Pro: The First Multi-Turn and Enterprise-Grade Benchmark for LLM Agents appeared first on MarkTechPost.

Source: Read MoreÂ