Organizations are increasingly seeking to modernize their database infrastructure by migrating from legacy database engines such as Microsoft SQL Server and Oracle to more cost-effective and scalable open source alternatives such as PostgreSQL. This transition not only reduces licensing costs but also unlocks the flexibility and innovation offered by PostgreSQL’s rich feature set.

In this post, we demonstrate how to convert and test database code from Microsoft SQL Server and Oracle to PostgreSQL using the generative AI capabilities of Amazon Bedrock.

Amazon Bedrock is a generative AI platform that you can use to simplify and accelerate migrations. By taking advantage of the advanced AI capabilities of Amazon Bedrock, you can automate and streamline migration tasks, reducing the effort, time, and risks associated with database migration. Because Amazon Q Developer is built on Amazon Bedrock, you can also follow the post using Amazon Q Developer and get the same behavior.

Database migration challenges

Migrating from Microsoft SQL Server or Oracle to PostgreSQL involves several steps, including:

- Schema conversion – Adapting database objects such as tables, views, indexes, and constraints to the PostgreSQL syntax and architecture

- Business logic transformation – Converting stored procedures, functions, and triggers into PostgreSQL-compatible equivalents

- Data migration – Running extract, transform, and load (ETL) jobs on data while providing accuracy and consistency

- Application changes – Updating application code to accommodate PostgreSQL’s syntax and behavior.

- Performance tuning – Optimizing the migrated database for performance and scalability

The role of Amazon Bedrock in database migration

You can use Amazon Bedrock to overcome database migration challenges by using generative AI to automate and enhance the migration process. Key contributions include:

- Automated schema and code conversion – Amazon Bedrock simplifies schema conversion by analyzing the source database and generating PostgreSQL-compatible schemas. It can also transform complex business logic—including stored procedures, functions, and triggers—with a high degree of accuracy, significantly reducing manual effort.

- AI-driven data transformation – With Amazon Bedrock, you can automate the extraction and transformation of data to align with PostgreSQL’s data types and structures. This provides seamless data migration with minimal errors.

- Code compatibility insights – Amazon Bedrock identifies potential compatibility issues in application code, providing actionable recommendations to adapt SQL queries and a smooth integration with the new PostgreSQL backend.

- Intelligent testing and validation – By generating test cases and validation scripts and evaluating test code coverage with Amazon Bedrock, you can make sure that the migrated database meets functional and performance requirements before production deployment.

Through prompt engineering, you can tailor AI models such as Anthropic’s Claude using Amazon Bedrock to better understand the specific requirements of code conversion tasks. For instance, well-designed prompts can guide the AI to focus on critical aspects such as aligning with PostgreSQL syntax, preserving business logic, and adhering to best practices for performance optimization. This approach helps minimize errors and ambiguities in the converted code while increasing efficiency. Prompt engineering also enables iterative refinement, allowing you to fine-tune the conversion process based on feedback, thereby providing higher accuracy and reducing manual effort.

Code conversion example using Amazon Bedrock

In the following example we show how you can use Amazon Bedrock to convert code from Microsoft SQL Server to PostgreSQL.

After you have access to the model:

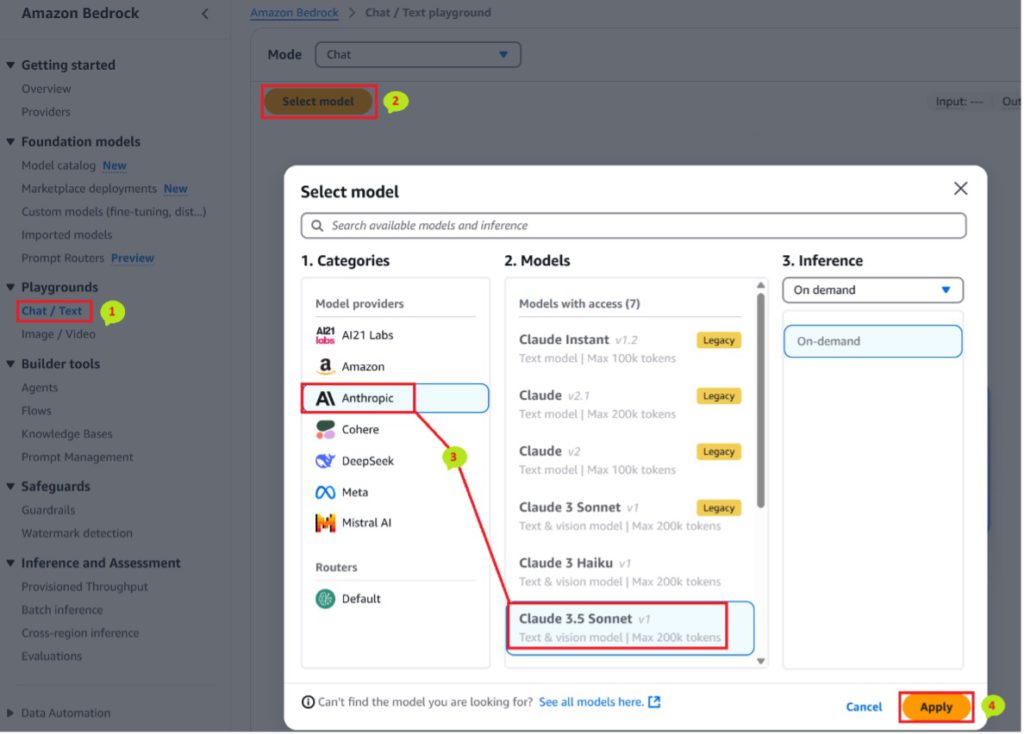

- In the AWS Management Console for Amazon Bedrock, choose Chat/Text under Playgrounds in the navigation pane.

- In the new window, choose Select model.

- Select the Anthropic category and Claude 3.5 Sonnet

- Choose Apply (it will take you to the Amazon Bedrock chat window with a prompt to ask questions).

To follow along, you can copy and paste the prompts in your Amazon Bedrock chat window.

Start with the following prompt:

Can you convert the Microsoft SQL server procedure to PostgreSQL function and generate the test cases covering complete code coverage

"CREATE PROCEDURE dbo.UpdateEmployeeSalaries @DepartmentID INT, @IncreasePercentage DECIMAL(5,2), @MaxIncreaseAmount MONEY, @EffectiveDate DATE AS BEGIN SET NOCOUNT ON;

DECLARE @ErrorMsg NVARCHAR(4000);

DECLARE @RowCount INT = 0;

BEGIN TRY

-- Validate input parameters

IF @IncreasePercentage <= 0 OR @IncreasePercentage > 25

THROW 50001, 'Invalid increase percentage. Must be between 0 and 25.', 1;

IF @MaxIncreaseAmount <= 0

THROW 50002,'Invalid max increase amount. Must be greater than 0.',1;

-- Begin transaction

BEGIN TRANSACTION;

-- Temporary table to store employee IDs and their new salaries

CREATE TABLE #TempSalaryUpdates (EmployeeID INT, NewSalary MONEY );

-- Calculate new salaries

INSERT INTO #TempSalaryUpdates (EmployeeID, NewSalary)

SELECT e.EmployeeID,

CASE

WHEN e.Salary*(1+@IncreasePercentage/100) - e.Salary > @MaxIncreaseAmount

THEN e.Salary + @MaxIncreaseAmount

ELSE e.Salary * (1 + @IncreasePercentage/100)

END AS NewSalary

FROM Employees e

WHERE e.DepartmentID = @DepartmentID

AND e.EmploymentStatus = 'Active'

AND DATEDIFF(YEAR, e.HireDate, @EffectiveDate) >= 1;

-- Update employee salaries

UPDATE e

SET e.Salary = t.NewSalary,

e.LastSalaryUpdateDate = @EffectiveDate

FROM Employees e

INNER JOIN #TempSalaryUpdates t ON e.EmployeeID = t.EmployeeID;

SET @RowCount = @@ROWCOUNT;

-- Log salary updates

INSERT INTO SalaryUpdateLog (EmployeeID, OldSalary, NewSalary, UpdateDate)

SELECT e.EmployeeID, e.Salary AS OldSalary, t.NewSalary, @EffectiveDate

FROM Employees e

INNER JOIN #TempSalaryUpdates t ON e.EmployeeID = t.EmployeeID;

-- Commit transaction

COMMIT TRANSACTION;

-- Return success message

SELECT CONCAT(@RowCount, ' employee salaries updated successfully.') AS ResultMessage;

END TRY

BEGIN CATCH

-- Rollback transaction on error

IF @@TRANCOUNT > 0

ROLLBACK TRANSACTION;

SET @ErrorMsg = ERROR_MESSAGE();

THROW 50000, @ErrorMsg, 1;

END CATCH;

END;"

We get the following response. Because generative AI is probabilistic, you might not always get the same result –

Here's the PostgreSQL equivalent of the Microsoft SQL Server procedure, along with test cases for complete code coverage:

CREATE OR REPLACE FUNCTION update_employee_salaries(

p_department_id INT,

p_increase_percentage DECIMAL(5,2),

p_max_increase_amount DECIMAL(10,2),

p_effective_date DATE

)

RETURNS TEXT AS $$

DECLARE

v_error_msg TEXT;

v_row_count INT := 0;

BEGIN

-- Validate input parameters

IF p_increase_percentage <= 0 OR p_increase_percentage > 25 THEN

RAISE EXCEPTION 'Invalid increase percentage. Must be between 0 and 25.' USING ERRCODE = '50001';

END IF;

IF p_max_increase_amount <= 0 THEN

RAISE EXCEPTION 'Invalid max increase amount. Must be greater than 0.' USING ERRCODE = '50002';

END IF;

-- Begin transaction

BEGIN

-- Create temporary table to store employee IDs and their new salaries

CREATE TEMPORARY TABLE temp_salary_updates (

employee_id INT,

new_salary DECIMAL(10,2)

) ON COMMIT DROP;

-- Calculate new salaries

INSERT INTO temp_salary_updates (employee_id, new_salary)

SELECT e.employee_id,

CASE

WHEN e.salary * (1 + p_increase_percentage/100) - e.salary > p_max_increase_amount

THEN e.salary + p_max_increase_amount

ELSE e.salary * (1 + p_increase_percentage/100)

END AS new_salary

FROM employees e

WHERE e.department_id = p_department_id

AND e.employment_status = 'Active'

AND p_effective_date - e.hire_date >= INTERVAL '1 year';

-- Update employee salaries

UPDATE employees e

SET salary = t.new_salary,

last_salary_update_date = p_effective_date

FROM temp_salary_updates t

WHERE e.employee_id = t.employee_id;

GET DIAGNOSTICS v_row_count = ROW_COUNT;

-- Log salary updates

INSERT INTO salary_update_log (employee_id, old_salary, new_salary, update_date)

SELECT e.employee_id, e.salary AS old_salary, t.new_salary, p_effective_date

FROM employees e

INNER JOIN temp_salary_updates t ON e.employee_id = t.employee_id;

-- Return success message

RETURN v_row_count || ' employee salaries updated successfully.';

EXCEPTION

WHEN OTHERS THEN

-- Rollback is automatic in PostgreSQL for unhandled exceptions

v_error_msg := SQLERRM;

RAISE EXCEPTION '%', v_error_msg USING ERRCODE = '50000';

END;

END;

$$ LANGUAGE plpgsql;

Now, let's create test cases to cover the code paths:

-- Test Case 1: Valid input, successful update

SELECT update_employee_salaries(1, 5.00, 1000.00, '2023-06-01');

-- Test Case 2: Invalid increase percentage (too low)

SELECT update_employee_salaries(1, 0.00, 1000.00, '2023-06-01');

-- Test Case 3: Invalid increase percentage (too high)

SELECT update_employee_salaries(1, 26.00, 1000.00, '2023-06-01');

-- Test Case 4: Invalid max increase amount

SELECT update_employee_salaries(1, 5.00, 0.00, '2023-06-01');

-- Test Case 5: No eligible employees

SELECT update_employee_salaries(999, 5.00, 1000.00, '2023-06-01');

-- Test Case 6: Max increase amount applied

INSERT INTO employees (employee_id, department_id, salary, employment_status, hire_date)

VALUES (1001, 1, 50000.00, 'Active', '2022-01-01');

SELECT update_employee_salaries(1, 10.00, 1000.00, '2023-06-01');

-- Test Case 7: Percentage increase applied

INSERT INTO employees (employee_id, department_id, salary, employment_status, hire_date)

VALUES (1002, 1, 40000.00, 'Active', '2022-01-01');

SELECT update_employee_salaries(1, 5.00, 5000.00, '2023-06-01');

-- Test Case 8: Employee not eligible due to employment status

INSERT INTO employees (employee_id, department_id, salary, employment_status, hire_date)

VALUES (1003, 1, 45000.00, 'Inactive', '2022-01-01');

SELECT update_employee_salaries(1, 5.00, 1000.00, '2023-06-01');

-- Test Case 9: Employee not eligible due to hire date

INSERT INTO employees (employee_id, department_id, salary, employment_status, hire_date)

VALUES (1004, 1, 55000.00, 'Active', '2023-05-01');

SELECT update_employee_salaries(1, 5.00, 1000.00, '2023-06-01');

-- Test Case 10: Multiple eligible employees

INSERT INTO employees (employee_id, department_id, salary, employment_status, hire_date)

VALUES (1005, 1, 60000.00, 'Active', '2022-01-01'),

(1006, 1, 70000.00, 'Active', '2021-01-01');

SELECT update_employee_salaries(1, 5.00, 2000.00, '2023-06-01');

These test cases cover:

1. Successful update 2-4. Input validation

2. No eligible employees

3. Max increase amount applied

4. Percentage increase applied

5. Ineligible due to employment status

6. Ineligible due to hire date

7. Multiple eligible employees

To ensure complete code coverage, you should also test the error handling by simulating database errors (e.g., table not found, insufficient privileges) if possible in your testing environment.

You can see that Amazon Bedrock generated the code for the PostgreSQL function in addition to the test cases. However, the naming convention got changed to all lowercase and the output added some underscores. Let’s ask Amazon Bedrock to retain the naming conventions and see what it does:

I see the naming convention is modified by adding "_". Can you generate the code again by retaining the naming conventions similar to the source?

We get the following response:

Certainly! I apologize for the confusion. Here's the PostgreSQL function with naming conventions more closely aligned to the original Microsoft SQL Server procedure:

CREATE OR REPLACE FUNCTION UpdateEmployeeSalaries(

DepartmentID INT,

IncreasePercentage DECIMAL(5,2),

MaxIncreaseAmount DECIMAL(10,2),

EffectiveDate DATE

)

RETURNS TEXT AS $$

DECLARE

ErrorMsg TEXT;

RowCount INT := 0;

BEGIN

-- Validate input parameters

IF IncreasePercentage <= 0 OR IncreasePercentage > 25 THEN

RAISE EXCEPTION 'Invalid increase percentage. Must be between 0 and 25.' USING ERRCODE = '50001';

END IF;

IF MaxIncreaseAmount <= 0 THEN

RAISE EXCEPTION 'Invalid max increase amount. Must be greater than 0.' USING ERRCODE = '50002';

END IF;

-- Begin transaction

BEGIN

-- Create temporary table to store employee IDs and their new salaries

CREATE TEMPORARY TABLE TempSalaryUpdates (

EmployeeID INT,

NewSalary DECIMAL(10,2)

) ON COMMIT DROP;

-- Calculate new salaries

INSERT INTO TempSalaryUpdates (EmployeeID, NewSalary)

SELECT e.EmployeeID,

CASE

WHEN e.Salary * (1 + IncreasePercentage/100) - e.Salary > MaxIncreaseAmount

THEN e.Salary + MaxIncreaseAmount

ELSE e.Salary * (1 + IncreasePercentage/100)

END AS NewSalary

FROM Employees e

WHERE e.DepartmentID = DepartmentID

AND e.EmploymentStatus = 'Active'

AND EffectiveDate - e.HireDate >= INTERVAL '1 year';

-- Update employee salaries

UPDATE Employees e

SET Salary = t.NewSalary,

LastSalaryUpdateDate = EffectiveDate

FROM TempSalaryUpdates t

WHERE e.EmployeeID = t.EmployeeID;

GET DIAGNOSTICS RowCount = ROW_COUNT;

-- Log salary updates

INSERT INTO SalaryUpdateLog (EmployeeID, OldSalary, NewSalary, UpdateDate)

SELECT e.EmployeeID, e.Salary AS OldSalary, t.NewSalary, EffectiveDate

FROM Employees e

INNER JOIN TempSalaryUpdates t ON e.EmployeeID = t.EmployeeID;

-- Return success message

RETURN RowCount || ' employee salaries updated successfully.';

EXCEPTION

WHEN OTHERS THEN

-- Rollback is automatic in PostgreSQL for unhandled exceptions

ErrorMsg := SQLERRM;

RAISE EXCEPTION '%', ErrorMsg USING ERRCODE = '50000';

END;

END;

$$ LANGUAGE plpgsql;

Now, here are the test cases with the naming convention aligned to the original:

-- Test Case 1: Valid input, successful update

SELECT UpdateEmployeeSalaries(1, 5.00, 1000.00, '2023-06-01');

-- Test Case 2: Invalid increase percentage (too low)

SELECT UpdateEmployeeSalaries(1, 0.00, 1000.00, '2023-06-01');

-- Test Case 3: Invalid increase percentage (too high)

SELECT UpdateEmployeeSalaries(1, 26.00, 1000.00, '2023-06-01');

-- Test Case 4: Invalid max increase amount

SELECT UpdateEmployeeSalaries(1, 5.00, 0.00, '2023-06-01');

-- Test Case 5: No eligible employees

SELECT UpdateEmployeeSalaries(999, 5.00, 1000.00, '2023-06-01');

-- Test Case 6: Max increase amount applied

INSERT INTO Employees (EmployeeID, DepartmentID, Salary, EmploymentStatus, HireDate)

VALUES (1001, 1, 50000.00, 'Active', '2022-01-01');

SELECT UpdateEmployeeSalaries(1, 10.00, 1000.00, '2023-06-01');

-- Test Case 7: Percentage increase applied

INSERT INTO Employees (EmployeeID, DepartmentID, Salary, EmploymentStatus, HireDate)

VALUES (1002, 1, 40000.00, 'Active', '2022-01-01');

SELECT UpdateEmployeeSalaries(1, 5.00, 5000.00, '2023-06-01');

-- Test Case 8: Employee not eligible due to employment status

INSERT INTO Employees (EmployeeID, DepartmentID, Salary, EmploymentStatus, HireDate)

VALUES (1003, 1, 45000.00, 'Inactive', '2022-01-01');

SELECT UpdateEmployeeSalaries(1, 5.00, 1000.00, '2023-06-01');

-- Test Case 9: Employee not eligible due to hire date

INSERT INTO Employees (EmployeeID, DepartmentID, Salary, EmploymentStatus, HireDate)

VALUES (1004, 1, 55000.00, 'Active', '2023-05-01');

SELECT UpdateEmployeeSalaries(1, 5.00, 1000.00, '2023-06-01');

-- Test Case 10: Multiple eligible employees

INSERT INTO Employees (EmployeeID, DepartmentID, Salary, EmploymentStatus, HireDate)

VALUES (1005, 1, 60000.00, 'Active', '2022-01-01'),

(1006, 1, 70000.00, 'Active', '2021-01-01');

SELECT UpdateEmployeeSalaries(1, 5.00, 2000.00, '2023-06-01');

These test cases cover the same scenarios as before, but now use the naming convention that matches the original Microsoft SQL Server procedure. The function and test cases should now be more consistent with the source code's style.

This version maintains the original camel case naming convention for variables and parameters, which is more consistent with the Microsoft SQL Server procedure. The function name and test cases now use the same naming convention as the original stored procedure.

The code coverage analysis remains the same as in the previous response, because logic and structure of the function haven’t changed.

In both prompts, we don’t see the code coverage. Let’s ask Amazon Bedrock what the code coverage is based on the generated test cases:

Can you provide the test code coverage based on the test cases generated?

Can you provide the test code coverage based on the test cases generated?

We get the following response:

Certainly! I'll provide an analysis of the test code coverage based on the test cases generated. This analysis will show which parts of the function are covered by each test case.

Code Coverage Analysis:

1. Input parameter validation:

- Test Case 1: Valid inputs (covered)

- Test Case 2: Invalid IncreasePercentage (too low) (covered)

- Test Case 3: Invalid IncreasePercentage (too high) (covered)

- Test Case 4: Invalid MaxIncreaseAmount (covered)

2. Main function body:

- Test Case 1, 5, 6, 7, 8, 9, 10: Execute main body (covered)

3. Temporary table creation:

- Test cases that execute the main body (1, 5, 6, 7, 8, 9, 10) (covered)

4. Salary calculation logic:

- Test Case 6: Max increase amount applied (covered)

- Test Case 7: Percentage increase applied (covered)

5. Employee eligibility criteria:

- Test Case 8: EmploymentStatus check (covered)

- Test Case 9: HireDate check (covered)

6. Multiple employee updates:

- Test Case 10: Multiple eligible employees (covered)

7. No eligible employees scenario:

- Test Case 5: No eligible employees (covered)

8. Update operations:

- Test Cases 1, 6, 7, 10: Successful updates (covered)

9. Logging:

- Test Cases 1, 6, 7, 10: Salary update logging (covered)

10. Exception handling:

- Test Cases 2, 3, 4: Raise exceptions for invalid inputs (covered)

11. Return message:

- Test Cases 1, 5, 6, 7, 8, 9, 10: Return success message (covered)

Coverage Summary:

- Major code paths are covered by the test cases.

- Both successful and error scenarios are tested.

- Different types of salary increases (percentage-based and max amount) are verified.

- Employee eligibility criteria are checked.

- Multiple employee updates and no eligible employee scenarios are included.

Areas for Potential Additional Coverage:

1. Edge cases for date calculations (e.g., employees hired exactly one year ago).

2. Testing with very large numbers to ensure no overflow issues.

3. Simulating database errors (if possible in the testing environment) to fully test the exception handling block.

Overall, the test cases provide comprehensive coverage of the function's logic and error handling. They test various scenarios and edge cases, ensuring that the function behaves correctly under different conditions. The main areas of the function, including input validation, salary calculation, employee eligibility, update operations, and logging, are covered by the test cases.

Remember that although high code coverage is valuable, it doesn’t guarantee that each and every possible scenario is tested. It’s important to combine these unit tests with integration and system tests for comprehensive quality assurance.

To get a more precise measurement of code coverage, you would typically use a specialized code coverage tool for PostgreSQL, such as pgTAP or a combination of pgTAP and a coverage analysis tool. These tools can provide exact line-by-line coverage information and help to identify remaining uncovered code paths.

To validate the responses from Amazon Bedrock, run the results in your PostgreSQL environment. You can ask Amazon Bedrock to request the necessary scripts for creating tables and data for testing if you do not have the dependent tables.

For that we can issue the following prompt:

Certainly! I'll provide the table creation scripts and insert statements for test data for the tables used in the function: Employees and SalaryUpdateLog.

Table Creation Scripts:

-- Create Employees table

CREATE TABLE Employees (

EmployeeID INT PRIMARY KEY,

DepartmentID INT NOT NULL,

Salary DECIMAL(10, 2) NOT NULL,

EmploymentStatus VARCHAR(20) NOT NULL,

HireDate DATE NOT NULL,

LastSalaryUpdateDate DATE

);

-- Create SalaryUpdateLog table

CREATE TABLE SalaryUpdateLog (

LogID SERIAL PRIMARY KEY,

EmployeeID INT NOT NULL,

OldSalary DECIMAL(10, 2) NOT NULL,

NewSalary DECIMAL(10, 2) NOT NULL,

UpdateDate DATE NOT NULL

);

Test Data:

-- Insert test data into Employees table

INSERT INTO Employees (EmployeeID, DepartmentID, Salary, EmploymentStatus, HireDate, LastSalaryUpdateDate)

VALUES

(1001, 1, 50000.00, 'Active', '2022-01-01', '2022-01-01'),

(1002, 1, 40000.00, 'Active', '2022-01-01', '2022-01-01'),

(1003, 1, 45000.00, 'Inactive', '2022-01-01', '2022-01-01'),

(1004, 1, 55000.00, 'Active', '2023-05-01', '2023-05-01'),

(1005, 1, 60000.00, 'Active', '2022-01-01', '2022-01-01'),

(1006, 1, 70000.00, 'Active', '2021-01-01', '2022-01-01'),

(2001, 2, 55000.00, 'Active', '2021-06-01', '2022-06-01'),

(2002, 2, 48000.00, 'Active', '2022-03-15', '2022-03-15'),

(3001, 3, 65000.00, 'Active', '2020-11-01', '2022-11-01');

-- The SalaryUpdateLog table will be populated by the function, so we don't need to insert test data here

This test data includes:

1. Employees in different departments

2. Employees with various salaries

3. Both active and inactive employees

4. Employees with different hire dates (some less than a year, some more)

5. Employees with different last salary update dates

You can use this data to test various scenarios in your function. For example:

- Employees 1001, 1002, 1005, and 1006 are eligible for salary updates when testing Department 1.

- Employee 1003 is inactive and should be excluded.

- Employee 1004 was hired less than a year ago and should be excluded.

- Employees 2001 and 2002 can be used to test updates in a different department.

- Employee 3001 can be used to test a case where no employees in a department are updated.

This data set allows you to verify that your function correctly applies salary increases, respects the eligibility criteria (employment status and hire date), and handles different departments appropriately.

Further enhancements to automate test cases and generate the summary

You can interact with Amazon Bedrock in a chat manner or through APIs. Using the Amazon Bedrock API calls, you can develop an application to automate the testing of generated test cases against the source and target environments and verify the test results. This process helps in automating business logic validation, identifying failed test cases, and reducing manual testing efforts. Additionally, the custom application can measure latency between the source and target environments, assess the performance of the converted code, and optimize specific business logic functionality—eliminating the need for manual verification of each procedure and function.

You can also use AWS provided tools to accelerate code conversion during database migration. For more information, see Accelerate database modernization with generative AI using AWS Database Migration Service Schema Conversion.

Conclusion

AWS offers a comprehensive suite of tools and services that represent transformative solutions in database migration. In this post, we showed you how to use Amazon Bedrock with an Anthropic’s Claude model to help with code conversion from Microsoft SQL Server to PostgreSQL and generate test cases to validate the converted code. By using this approach, you can reduce migration time, costs, and risks.

Whether you’re embarking on your migration journey or looking to enhance your current strategy, Amazon Bedrock can help you achieve success with confidence.

For instructions on how to get started with Amazon Bedrock, see Getting started with Amazon Bedrock

About the authors

Viswanatha Shastry Medipalli is a Senior Architect with the AWS ProServe team. He brings extensive expertise and experience in database migrations, having architected and designed numerous successful solutions to address complex business requirements. His work spans Oracle, SQL Server, and PostgreSQL databases, supporting reporting, business intelligence (BI) applications, and AI initiatives. In addition, he has strong knowledge of automation and orchestration, enabling scalable and efficient implementations.

Viswanatha Shastry Medipalli is a Senior Architect with the AWS ProServe team. He brings extensive expertise and experience in database migrations, having architected and designed numerous successful solutions to address complex business requirements. His work spans Oracle, SQL Server, and PostgreSQL databases, supporting reporting, business intelligence (BI) applications, and AI initiatives. In addition, he has strong knowledge of automation and orchestration, enabling scalable and efficient implementations.

Jose Amado-Blanco is a Senior Cloud Architect with over 25 years of transformative experience in enterprise technology solutions. As an AWS expert, he specializes in guiding companies through complex cloud migrations and modernization initiatives, with particular emphasis on data platform optimization and architectural excellence.

Jose Amado-Blanco is a Senior Cloud Architect with over 25 years of transformative experience in enterprise technology solutions. As an AWS expert, he specializes in guiding companies through complex cloud migrations and modernization initiatives, with particular emphasis on data platform optimization and architectural excellence.

Swanand Kshirsagar is a Cloud Architect in the Professional Services Expert Services division at AWS. He specializes in collaborating with clients to architect and implement scalable, robust, and security-compliant solutions in the AWS Cloud. His primary expertise lies in orchestrating seamless migrations, encompassing both homogenous and heterogeneous transitions, and facilitating the relocation of on-premises databases to Amazon RDS and Amazon Aurora PostgreSQL with efficiency. Swanand has also delivered multiple sessions and conducted workshops at conferences.

Swanand Kshirsagar is a Cloud Architect in the Professional Services Expert Services division at AWS. He specializes in collaborating with clients to architect and implement scalable, robust, and security-compliant solutions in the AWS Cloud. His primary expertise lies in orchestrating seamless migrations, encompassing both homogenous and heterogeneous transitions, and facilitating the relocation of on-premises databases to Amazon RDS and Amazon Aurora PostgreSQL with efficiency. Swanand has also delivered multiple sessions and conducted workshops at conferences.

Source:

Viswanatha Shastry Medipalli is a Senior Architect with the AWS ProServe team. He brings extensive expertise and experience in database migrations, having architected and designed numerous successful solutions to address complex business requirements. His work spans Oracle, SQL Server, and PostgreSQL databases, supporting reporting, business intelligence (BI) applications, and AI initiatives. In addition, he has strong knowledge of automation and orchestration, enabling scalable and efficient implementations.

Viswanatha Shastry Medipalli is a Senior Architect with the AWS ProServe team. He brings extensive expertise and experience in database migrations, having architected and designed numerous successful solutions to address complex business requirements. His work spans Oracle, SQL Server, and PostgreSQL databases, supporting reporting, business intelligence (BI) applications, and AI initiatives. In addition, he has strong knowledge of automation and orchestration, enabling scalable and efficient implementations. Jose Amado-Blanco is a Senior Cloud Architect with over 25 years of transformative experience in enterprise technology solutions. As an AWS expert, he specializes in guiding companies through complex cloud migrations and modernization initiatives, with particular emphasis on data platform optimization and architectural excellence.

Jose Amado-Blanco is a Senior Cloud Architect with over 25 years of transformative experience in enterprise technology solutions. As an AWS expert, he specializes in guiding companies through complex cloud migrations and modernization initiatives, with particular emphasis on data platform optimization and architectural excellence. Swanand Kshirsagar is a Cloud Architect in the Professional Services Expert Services division at AWS. He specializes in collaborating with clients to architect and implement scalable, robust, and security-compliant solutions in the AWS Cloud. His primary expertise lies in orchestrating seamless migrations, encompassing both homogenous and heterogeneous transitions, and facilitating the relocation of on-premises databases to Amazon RDS and Amazon Aurora PostgreSQL with efficiency. Swanand has also delivered multiple sessions and conducted workshops at conferences.

Swanand Kshirsagar is a Cloud Architect in the Professional Services Expert Services division at AWS. He specializes in collaborating with clients to architect and implement scalable, robust, and security-compliant solutions in the AWS Cloud. His primary expertise lies in orchestrating seamless migrations, encompassing both homogenous and heterogeneous transitions, and facilitating the relocation of on-premises databases to Amazon RDS and Amazon Aurora PostgreSQL with efficiency. Swanand has also delivered multiple sessions and conducted workshops at conferences.

![How to focus on building your skills when everything’s so distracting with Ania Kubów [Podcast #187]](https://devstacktips.com/wp-content/uploads/2025/09/f7c52449-77f9-4fa4-bbd5-34c3773658dd-6kiwIT-450x253.png)