In the landscape of generative AI, organizations are increasingly adopting a structured approach to deploy their AI applications, mirroring traditional software development practices. This approach typically involves separate development and production environments, each with its own AWS account, to create logical separation, enhance security, and streamline workflows.

Amazon Bedrock is a fully managed service that offers a choice of high-performing foundation models (FMs) from leading AI companies such as AI21 Labs, Anthropic, Cohere, Meta, Mistral AI, Stability AI, and Amazon through a single API, along with a broad set of capabilities you need to build generative AI applications with security, privacy, and responsible AI. As organizations scale their AI initiatives, they often face challenges in efficiently managing and deploying custom models across different stages of development and across geographical regions.

To address these challenges, Amazon Bedrock introduces two key features: Model Share and Model Copy. These features are designed to streamline the AI development lifecycle, from initial experimentation to global production deployment. They enable seamless collaboration between development and production teams, facilitate efficient resource utilization, and help organizations maintain control and security throughout the customized model lifecycle.

In this comprehensive blog post, we’ll dive deep into the Model Share and Model Copy features, exploring their functionalities, benefits, and practical applications in a typical development-to-production scenario.

Prerequisites for Model Copy and Model Share

Before you can start using Model Copy and Model Share, the following prerequisites must be fulfilled:

- AWS Organizations setup: Both the source account (the account sharing the model) and the target account (the account receiving the model) must be part of the same organization. You’ll need to create an organization if you don’t have one already, enable resource sharing, and invite the relevant accounts.

- IAM permissions:

- For Model Share: Set up AWS Identity and Access Management (IAM) permissions for the sharing account to allow sharing using AWS Resource Access Manager (AWS RAM).

- For Model Copy: Configure IAM permissions in both source and target AWS Regions to allow copying operations.

- Attach the necessary IAM policies to the roles in both accounts to enable model sharing.

- KMS key policies (Optional): If your models are encrypted with a customer-managed KMS key, you’ll need to set up key policies to allow the target account to decrypt the shared model or to encrypt the copied model with a specific KMS key.

- Network configuration: Make sure that the necessary network configurations are in place, especially if you’re using VPC endpoints or have specific network security requirements.

- Service quotas: Check and, if necessary, request increases for the number of custom models per account service quotas in both the source and target Regions and accounts.

- Provisioned throughput support: Verify that the target Region supports provisioned throughput for the model you intend to copy. This is crucial because the copy job will be rejected if provisioned throughput isn’t supported in the target Region.

Model Share: Streamlining development-to-production workflows

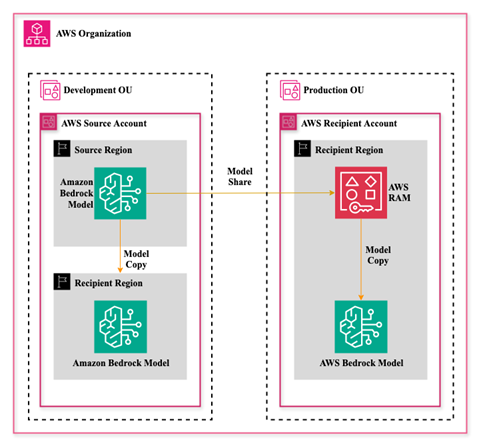

The following figure shows the architecture of Model Share and Model Copy. It consists of a source account where the model is fined tuned. Next, Amazon Bedrock shares it with the recipient account which accepts the shared model in AWS Resource Access Manager (RAM). Then, the shared model can be copied to the desired AWS Region.

When managing Amazon Bedrock custom models in a development-to-production pipeline, it’s essential to securely share these models across different AWS accounts to streamline the promotion process to higher environments. The Amazon Bedrock Model Share feature addresses this need, enabling smooth sharing between development and production environments. Model Share enables the sharing of custom models fine-tuned on Amazon Bedrock between different AWS accounts within the same Region and organization. This feature is particularly useful for organizations that maintain separate development and production environments.

Important considerations:

- Both the source and target AWS accounts must be in the same organization.

- Only models that have been fine-tuned within Amazon Bedrock can be shared.

- Base models and custom models imported using the custom model import (CMI) cannot be shared directly. For these, use the standard model import process in each AWS account.

- When sharing encrypted models, use a customer-managed KMS key and attach a key policy that allows the recipient account to decrypt the shared model. Specify the recipient account in the Principal field of the key policy.

Key benefits:

- Simplified development-to-production transitions: Quickly move fine-tuned models on Amazon Bedrock from development to production environments.

- Enhanced team collaboration: Share models across different departments or project teams.

- Resource optimization: Reduce duplicate model customization efforts across your organization.

How it works:

- After a model has been fine-tuned in the source AWS account using Amazon Bedrock, the source AWS account can use the AWS Management Console for Amazon Bedrock to share the model.

- The target AWS account accepts the shared model in AWS RAM.

- The shared model in the target AWS account needs to be copied to the desired Regions.

- After copying, the target AWS account can purchase provisioned throughput and use the model.

- If using KMS encryption, make sure the key policy is properly set up for the recipient account.

Model Copy: Optimizing model deployment across Regions

The Amazon Bedrock Model Copy feature enables you to replicate custom models across different Regions within your account. This capability serves two primary purposes: it can be used independently for single-account deployments, or it can complement Model Share in multi-account scenarios, where you first share the model across accounts and then copy it. The feature is particularly valuable for organizations that require global model deployment, Regional load balancing, and robust disaster recovery solutions. By allowing flexible model distribution across Regions, Model Copy helps optimize your AI infrastructure for both performance and reliability.

Important considerations:

- Make sure the target Region supports provisioned throughput for the model being copied. If provision throughput isn’t supported, the copy job will be rejected.

- Be aware of the costs associated with storing and using copied models in multiple Regions. Consult the Amazon Bedrock pricing page for detailed information.

- When used after Model Share for cross-account scenarios, first accept the shared model, then initiate the cross-Region copy within your account.

- Regularly review and optimize your multi-Region deployment strategy to balance performance needs with cost considerations.

- When copying encrypted models, use a customer-managed KMS key and attach a key policy that allows the role used for copying to encrypt the model. Specify the role in the Principal field of the key policy.

Key benefits of Model Copy:

- Reduced latency: Deploy models closer to end-users in different geographical locations to minimize response times.

- Increased availability: Enhance the overall availability and reliability of your AI applications by having models accessible in multiple Regions.

- Improved disaster recovery: Facilitate easier implementation of disaster recovery strategies by maintaining model replicas across different Regions.

- Support for Regional compliance: Align with data residency requirements by deploying models in specific Regions as needed.

How it works:

- Identify the target Region where you want to deploy your model.

- Use the Amazon Bedrock console to initiate the Model Copy process from the source Region to the target Region.

- After the model has been copied, purchase provisioned throughput for the model in each Region where you want to use it.

- If using KMS encryption, make sure the key policy is properly set up for the role performing the copy operation.

Use cases:

- Single-account deployment: Use Model Copy to replicate models across Regions within the same AWS account for improved global performance.

- Multi-account deployment: After using Model Share to transfer a model from a development to a production account, use Model Copy to distribute the model across Regions in the production account.

By using Model Copy, either on its own or in tandem with Model Share, you can create a robust, globally distributed AI infrastructure. This flexibility offers low-latency access to your custom models across different geographical locations, enhancing the performance and reliability of your AI-powered applications regardless of your account structure.

Aligning Model Share and Model Copy with AWS best practices

When implementing Model Share and Model Copy, it’s crucial to align these features with AWS best practices for multi-account environments. AWS recommends setting up separate accounts for development and production, which makes Model Share particularly valuable for transitioning models between these environments. Consider how these features interact with your organizational structure, especially if you have separate organizational units (OUs) for security, infrastructure, and workloads. Key considerations include:

- Maintaining compliance with policies set at the OU level.

- Using Model Share and Model Copy in the continuous integration and delivery (CI/CD) pipeline of your organization.

- Using AWS billing features for cost management across accounts.

- For disaster recovery within the same AWS account, use Model Copy. When implementing disaster recovery across multiple AWS accounts, use both Model Share and Model Copy.

By aligning Model Share and Model Copy with these best practices, you can enhance security, compliance, and operational efficiency in your AI model lifecycle management. For more detailed guidance, see the AWS Organizations documentation.

From development to production: A practical use case

Let’s walk through a typical scenario where Model Copy and Model Share can be used to streamline the process of moving a custom model from development to production.

Step 1: Model development (development account)

In the development account, data scientists fine-tune a model on Amazon Bedrock. The process typically involves:

- Experimenting with different FMs

- Performing prompt engineering

- Fine-tuning the selected model with domain-specific data

- Evaluating model performance on the specific task

- Applying Amazon Bedrock Guardrails to make sure that the model meets ethical and regulatory standards

The following example fine-tunes an Amazon Titan Text Express model in the US East (N. Virginia) Region (us-east-1).

Step 2: Model evaluation and selection

After the model is fine-tuned, the development team evaluates its performance and decides if it’s ready for production use.

Step 3: Model sharing (development to production account)

After the model is approved for production use, the development team uses Model Share to make it available to the production account. Remember, this step is only applicable for fine-tuned models created within Amazon Bedrock, not for custom models imported using custom model import.

Step 4: Model Copy (production account)

The production team, now with access to the shared model, must first copy the model to their desired Region before they can use it. This step is necessary even for shared models, because sharing alone doesn’t make the model usable in the target account.

Step 5: Production deployment

Finally, after the model has been successfully copied, the production team can purchase provisioned throughput and set up the necessary infrastructure for inference.

Conclusion

Amazon Bedrock Model Copy and Model Share features provide a powerful option for managing the lifecycle of an AI application from development to production. These features enable organizations to:

- Streamline the transition from experimentation to deployment

- Enhance collaboration between development and production teams

- Optimize model performance and availability on a global scale

- Maintain security and compliance throughout the model lifecycle

As the field of AI continues to evolve, these tools are crucial for organizations to stay agile, efficient, and competitive. Remember, the journey from development to production is iterative, requiring continuous monitoring, evaluation, and refinement of models to maintain ongoing effectiveness and alignment with business needs.

By implementing the best practices and considerations outlined in this post, you can create a robust, secure, and efficient workflow for managing your AI models across different environments and Regions. This approach will accelerate your AI development process and maximize the value of your investments in model customization and fine tuning. With the features provided by Amazon Bedrock, you’re well-equipped to navigate the complexities of AI model management and deployment successfully.

About the Authors

Ishan Singh is a Generative AI Data Scientist at Amazon Web Services, where he helps customers build innovative and responsible generative AI solutions and products. With a strong background in AI/ML, Ishan specializes in building Generative AI solutions that drive business value. Outside of work, he enjoys playing volleyball, exploring local bike trails, and spending time with his wife and dog, Beau.

Ishan Singh is a Generative AI Data Scientist at Amazon Web Services, where he helps customers build innovative and responsible generative AI solutions and products. With a strong background in AI/ML, Ishan specializes in building Generative AI solutions that drive business value. Outside of work, he enjoys playing volleyball, exploring local bike trails, and spending time with his wife and dog, Beau.

Neeraj Lamba is a Cloud Infrastructure Architect with Amazon Web Services (AWS) Worldwide Public Sector Professional Services. He helps customers transform their business by helping design their cloud solutions and offering technical guidance. Outside of work, he likes to travel, play Tennis and experimenting with new technologies.

Neeraj Lamba is a Cloud Infrastructure Architect with Amazon Web Services (AWS) Worldwide Public Sector Professional Services. He helps customers transform their business by helping design their cloud solutions and offering technical guidance. Outside of work, he likes to travel, play Tennis and experimenting with new technologies.

Source: Read MoreÂ