In natural language processing (NLP), RL methods, such as reinforcement learning with human feedback (RLHF), have been utilized to enhance model outputs by optimizing responses based on feedback signals. A specific variant, reinforcement learning with verifiable rewards (RLVR), extends this approach by utilizing automatic signals, such as mathematical correctness or syntactic features, as feedback, enabling the large-scale tuning of language models. RLVR is especially interesting because it promises to enhance models’ reasoning abilities without needing extensive human supervision. This intersection of automated feedback and reasoning tasks forms an exciting area of research, where developers aim to uncover how models can learn to reason mathematically, logically, or structurally using limited supervision.

A persistent challenge in machine learning is building models that can reason effectively under minimal or imperfect supervision. In tasks like mathematical problem-solving, where the correct answer might not be immediately available, researchers grapple with how to guide a model’s learning. Models often learn from ground-truth data, but it’s impractical to label vast datasets with perfect accuracy, particularly in reasoning tasks that require understanding complex structures like proofs or programmatic steps. Consequently, there’s an open question about whether models can learn to reason if they are exposed to noisy, misleading, or even incorrect signals during training. This issue is significant because models that overly rely on perfect feedback may not generalize well when such supervision is unavailable, thereby limiting their utility in real-world scenarios.

Several existing techniques aim to enhance models’ reasoning abilities through reinforcement learning (RL), with RLVR being a key focus. Traditionally, RLVR has used “ground truth” labels, correct answers verified by humans or automated tools, to provide rewards during training. Some approaches have relaxed this requirement by using majority vote labels or simple format-based heuristics, such as rewarding answers that follow a specific output style. Other methods have experimented with random rewards, offering positive signals without considering the correctness of the answer. These methods aim to explore whether models can learn even with minimal guidance, but they mostly concentrate on specific models, such as Qwen, raising concerns about generalizability across different architectures.

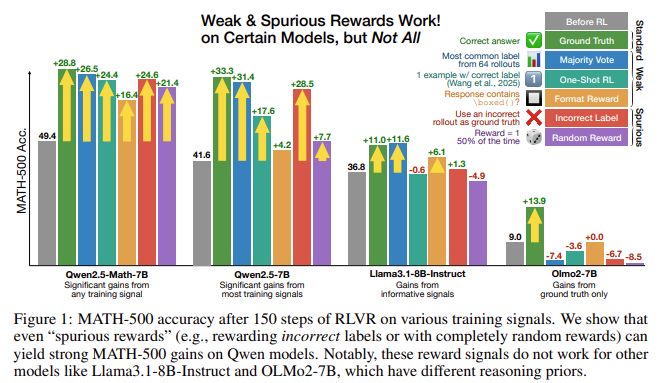

Researchers from the University of Washington, the Allen Institute for AI, and UC Berkeley investigate this question by testing various reward signals on Qwen2.5-Math, a family of large language models fine-tuned for mathematical reasoning. They tested ground-truth rewards, majority-vote rewards, format rewards based on boxed expressions, random rewards, and incorrect rewards. Remarkably, they observed that even completely spurious signals, like random rewards and rewards for wrong answers, could lead to substantial performance gains in Qwen models. For example, training Qwen2.5-Math-7B on MATH-500 with ground-truth rewards yielded a 28.8% improvement, while using incorrect labels resulted in a 24.6% gain. Random rewards still produced a 21.4% boost, and format rewards led to a 16.4% improvement. Majority-vote rewards provided a 26.5% accuracy gain. These improvements were not limited to a single model; Qwen2.5-Math-1.5B also showed strong gains: format rewards boosted accuracy by 17.6%, and incorrect labels by 24.4%. However, the same reward strategies failed to deliver similar benefits on other model families, such as Llama3 and OLMo2, which showed minimal or negative changes when trained with spurious rewards. For instance, Llama3.1-8B saw performance drops of up to 8.5% under certain spurious signals, highlighting the model-specific nature of the observed improvements.

The research team’s approach involved using RLVR training to fine-tune models with these varied reward signals, replacing the need for ground-truth supervision with heuristic or randomized feedback. They found that Qwen models, even without access to correct answers, could still learn to produce high-quality reasoning outputs. A key insight was that Qwen models tended to exhibit a distinct behavior called “code reasoning”, generating math solutions structured like code, particularly in Python-like formats, regardless of whether the reward signal was meaningful. This code reasoning tendency became more frequent over training, rising from 66.7% to over 90% in Qwen2.5-Math-7B when trained with spurious rewards. Answers that included code reasoning showed higher accuracy rates, often around 64%, compared to just 29% for answers without such reasoning patterns. These patterns emerged consistently, suggesting that spurious rewards may unlock latent capabilities learned during pretraining rather than introducing new reasoning skills.

Performance data underscored the surprising robustness of Qwen models. Gains from random rewards (21.4% on MATH-500) and incorrect labels (24.6%) nearly matched the ground-truth reward gain of 28.8%. Similar trends appeared across tasks, such as AMC, where format, wrong, and random rewards produced around an 18% improvement, only slightly lower than the 25% improvement from ground-truth or majority-vote rewards. Even on AIME2024, spurious rewards like format (+13.0%), incorrect (+8.7%), and random (+6.3%) led to meaningful gains, though the advantage of ground-truth labels (+12.8%) remained evident, particularly for AIME2025 questions created after model pretraining cutoffs.

Several Key Takeaways from the research include:

- Qwen2.5-Math-7B gained 28.8% accuracy on MATH-500 with ground-truth rewards, but also 24.6% with incorrect rewards, 21.4% with random rewards, 16.4% with format rewards, and 26.5% with majority-vote rewards.

- Code reasoning patterns emerged in Qwen models, increasing from 66.7% to 90%+ under RLVR, which boosted accuracy from 29% to 64%.

- Non-Qwen models, such as Llama3 and OLMo2, did not show similar improvements, with Llama3.1-8B experiencing up to 8.5% performance drops on spurious rewards.

- Gains from spurious signals appeared within 50 training steps in many cases, suggesting rapid elicitation of reasoning abilities.

- The research warns that RLVR studies should avoid generalizing results based on Qwen models alone, as spurious reward effectiveness is not universal.

In conclusion, these findings suggest that while Qwen models can leverage spurious signals to improve performance, the same is not true for other model families. Non-Qwen models, such as Llama3 and OLMo2, showed flat or negative performance changes when trained with spurious signals. The research emphasizes the importance of validating RLVR methods on diverse models rather than relying solely on Qwen-centric results, as many recent papers have done.

Check out the Paper, Official Release and GitHub Page. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 95k+ ML SubReddit and Subscribe to our Newsletter.

The post Incorrect Answers Improve Math Reasoning? Reinforcement Learning with Verifiable Rewards (RLVR) Surprises with Qwen2.5-Math appeared first on MarkTechPost.

Source: Read MoreÂ