Multi-modal large language models (MLLMs) have shown great progress as versatile AI assistants capable of handling diverse visual tasks. However, their deployment as isolated digital entities limits their potential impact. The growing demand to integrate MLLMs into real-world applications like robotics and autonomous vehicles requires complex spatial understanding. Current MLLMs show fundamental spatial reasoning deficiencies, often failing at basic tasks such as distinguishing left from right. While previous research attributes these limitations to insufficient specialized training data and solves them through spatial data incorporation during training, these approaches focus on single-image scenarios, thus restricting the model’s perception to static field-of-view analysis without dynamic information.

Several research methods have tried to address spatial understanding limitations in MLLMs. MLLMs incorporate image encoders that convert visual inputs into tokens processed alongside text in the language model’s latent space. Previous research has focused on single-image spatial understanding, evaluating inter-object spatial relations, or spatial recognition. Some benchmarks like BLINK, UniQA-3D, and VSIBench extend beyond single images. Existing improvements of MLLMs for spatial understanding include SpatialVLM, which fine-tunes models on curated spatial datasets, SpatialRGPT, which incorporates mask-based references and depth images, and SpatialPIN, which utilizes specialized perception models without fine-tuning.

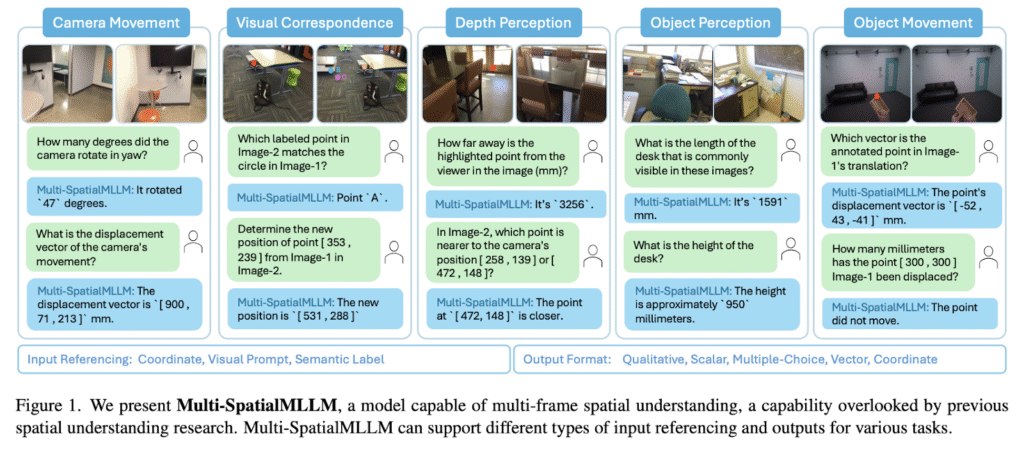

Researchers from FAIR Meta and the Chinese University of Hong Kong have proposed a framework to enhance MLLMs with robust multi-frame spatial understanding. This integrates three components: depth perception, visual correspondence, and dynamic perception to overcome the limitations of static single-image analysis. Researchers develop MultiSPA, a novel large-scale dataset containing over 27 million samples spanning diverse 3D and 4D scenes. The resulting Multi-SpatialMLLM model achieves significant improvements over baselines and proprietary systems, with scalable and generalizable multi-frame reasoning. Further, five tasks are introduced to generate training data: depth perception, visual correspondence, camera movement perception, object movement perception, and object size perception.

The Multi-SpatialMLLM centers around the MultiSPA data generation pipeline and comprehensive benchmark system. The data format follows standard MLLM fine-tuning strategies, which have the format of QA pairs: User: <image>…<image>{description}{question} and Assistant: {answer}. Researchers used the GPT-4o to generate diverse templates for task descriptions, questions, and answers. Further, high-quality annotated scene datasets are used, including 4D datasets Aria Digital Twin and Panoptic Studio, along with 3D tracking annotations from TAPVid3D for object movement perception and ScanNet for other spatial tasks. The MultiSPA generates over 27M QA samples from 1.1M unique images, with 300 samples held out for each subtask evaluation, totaling 7,800 benchmark samples.

On the MultiSPA benchmark, the Multi-SpatialMLLM achieves an average 36% gain over base models, reaching 80-90% accuracy on qualitative tasks compared to 50% for baseline models while outperforming all proprietary systems. Even on challenging tasks like predicting camera movement vectors, it attains 18% accuracy versus near-zero performance from other baselines. On the BLINK benchmark, Multi-SpatialMLLM achieves nearly 90% accuracy with an average 26.4% improvement over base models, surpassing several proprietary systems and showing transferable multi-frame spatial understanding. Standard VQA benchmark evaluations show rough parity with original performance, indicating the model maintains general-purpose MLLM proficiency without overfitting to spatial reasoning tasks.

In this paper, researchers extend MLLMs’ spatial understanding to multi-frame scenarios, addressing a critical gap overlooked in previous investigations. They introduced MultiSPA, the first large-scale dataset and benchmark for multi-frame spatial reasoning tasks. Experimental validation shows the effectiveness, scalability, and strong generalization capabilities of the proposed Multi-SpatialMLLM across diverse spatial understanding challenges. The research reveals significant insights, including multi-task learning benefits and emergent behaviors in complex spatial reasoning. The model establishes new applications, including acting as a multi-frame reward annotator.

Check out the Paper, Project Page and GitHub Page. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 95k+ ML SubReddit and Subscribe to our Newsletter.

The post Meta AI Introduces Multi-SpatialMLLM: A Multi-Frame Spatial Understanding with Multi-modal Large Language Models appeared first on MarkTechPost.

Source: Read MoreÂ