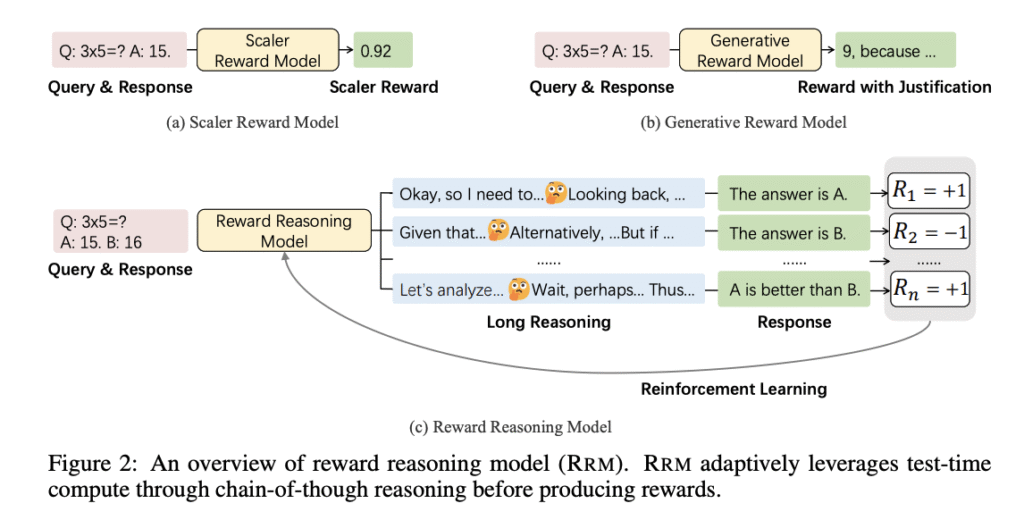

Reinforcement learning (RL) has emerged as a fundamental approach in LLM post-training, utilizing supervision signals from human feedback (RLHF) or verifiable rewards (RLVR). While RLVR shows promise in mathematical reasoning, it faces significant constraints due to dependence on training queries with verifiable answers. This requirement limits applications to large-scale training on general-domain queries where verification proves intractable. Further, current reward models, categorized into scalar and generative types, cannot effectively scale test-time compute for reward estimation. Existing approaches apply uniform computational resources across all inputs, lacking adaptability to allocate additional resources to challenging queries requiring nuanced analysis.

Formulation strategies and scoring schemes characterize reward models. Numeric approaches assign scalar scores to query-response pairs, while generative methods produce natural language feedback. Scoring follows absolute evaluation of individual pairs or discriminative comparison of candidate responses. Generative reward models, aligned with the LLM-as-a-Judge paradigm, offer interpretable feedback but face reliability concerns due to biased judgments. Inference-time scaling methods dynamically adjust computational resources, including parallel strategies like multi-sampling and horizon-based scaling for extended reasoning traces. However, they lack systematic adaptation to input complexity, limiting their effectiveness across diverse query types.

Researchers from Microsoft Research, Tsinghua University, and Peking University have proposed Reward Reasoning Models (RRMs), which perform explicit reasoning before producing final rewards. This reasoning phase allows RRMs to adaptively allocate additional computational resources when evaluating responses to complex tasks. RRMs introduce a dimension for enhancing reward modeling by scaling test-time compute while maintaining general applicability across diverse evaluation scenarios. Through chain-of-thought reasoning, RRMs utilize additional test-time compute for complex queries where appropriate rewards are not immediately apparent. This encourages RRMs to self-evolve reward reasoning capabilities without explicit reasoning traces as training data.

RRMs utilize the Qwen2 model with a Transformer-decoder backbone, formulating reward modeling as text completion where RRMs autoregressively generate thinking processes followed by final judgments. Each input contains a query and two responses to determine preference without allowing ties. Researchers use the RewardBench repository to guide systematic analysis across evaluation criteria, including instruction fidelity, helpfulness, accuracy, harmlessness, and detail level. RRMs support multi-response evaluation through ELO rating systems and knockout tournaments, both combinable with majority voting for enhanced test-time compute utilization. This samples RRMs multiple times for pairwise comparisons, performing majority voting to obtain robust comparison results.

Evaluation results show that RRMs achieve competitive performance against strong baselines on RewardBench and PandaLM Test benchmarks, with RRM-32B attaining 98.6% accuracy in reasoning categories. Comparing with DirectJudge models trained on identical data reveals substantial performance gaps, indicating RRMs effectively use test-time compute for complex queries. In reward-guided best-of-N inference, RRMs surpass all baseline models without additional test-time compute, with majority voting providing substantial improvements across evaluated subsets. Post-training experiments show steady downstream performance improvements on MMLU-Pro and GPQA. Scaling experiments across 7B, 14B, and 32B models confirm that longer thinking horizons consistently improve accuracy.

In conclusion, researchers introduced RRMs to perform explicit reasoning processes before reward assignment to address computational inflexibility in existing reward modeling approaches. Rule-based-reward RL enables RRMs to develop complex reasoning capabilities without requiring explicit reasoning traces as supervision. RRMs efficiently utilize test-time compute through parallel and sequential scaling approaches. The effectiveness of RRMs in practical applications, including reward-guided best-of-N inference and post-training feedback, demonstrates their potential as strong alternatives to traditional scalar reward models in alignment techniques.

Check out the Paper and Models on Hugging Face. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 95k+ ML SubReddit and Subscribe to our Newsletter.

The post Can LLMs Really Judge with Reasoning? Microsoft and Tsinghua Researchers Introduce Reward Reasoning Models to Dynamically Scale Test-Time Compute for Better Alignment appeared first on MarkTechPost.

Source: Read MoreÂ