Neural networks have long been powerful tools for handling complex data-driven tasks. Still, they often struggle to make discrete decisions under strict constraints, like routing vehicles or scheduling jobs. These discrete decision problems, commonly found in operations research, are computationally intensive and difficult to integrate into the smooth, continuous frameworks of neural networks. Such challenges limit the ability to combine learning-based models with combinatorial reasoning, creating a bottleneck in applications that demand both.

A major issue arises when integrating discrete combinatorial solvers with gradient-based learning systems. Many combinatorial problems are NP-hard, meaning it’s impossible to find exact solutions within a reasonable time for large instances. Existing strategies often depend on exact solvers or introduce continuous relaxations, which may not provide solutions that respect the hard constraints of the original problem. These approaches typically involve heavy computational costs, and when exact oracles are unavailable, the methods fail to deliver consistent gradients for learning. This creates a gap where neural networks can learn representations but cannot reliably make complex, structured decisions in a way that scales.

Commonly used methods rely on exact solvers for structured inference tasks, such as MAP solvers in graphical models or linear programming relaxations. These methods often require repeated oracle calls during each training iteration and depend on specific problem formulations. Techniques like Fenchel-Young losses or perturbation-based methods allow approximate learning, but their guarantees break down when used with inexact solvers like local search heuristics. This reliance on exact solutions hinders their practical use in large-scale, real-world combinatorial tasks, such as vehicle routing with dynamic requests and time windows.

Researchers from Google DeepMind and ENPC propose a novel solution by transforming local search heuristics into differentiable combinatorial layers through the lens of Markov Chain Monte Carlo (MCMC) methods. The researchers create MCMC layers that operate on discrete combinatorial spaces by mapping problem-specific neighborhood systems into proposal distributions. This design allows neural networks to integrate local search heuristics, like simulated annealing or Metropolis-Hastings, as part of the learning pipeline without access to exact solvers. Their approach enables gradient-based learning over discrete solutions by using acceptance rules that correct for the bias introduced by approximate solvers, ensuring theoretical soundness while reducing the computational burden.

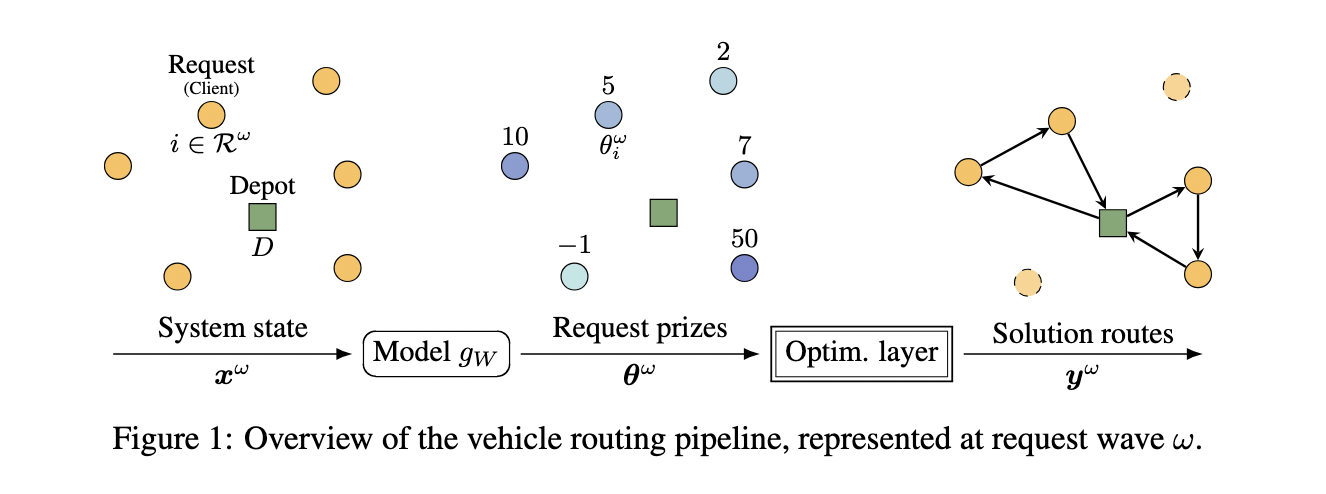

In more detail, the researchers construct a framework where local search heuristics propose neighbor solutions based on the problem structure, and the acceptance rules from MCMC methods ensure these moves result in a valid sampling process over the solution space. The resulting MCMC layer approximates the target distribution of feasible solutions and provides unbiased gradients for a single iteration under a target-dependent Fenchel-Young loss. This makes it possible to perform learning even with minimal MCMC iterations, such as using a single sample per forward pass while maintaining theoretical convergence properties. By embedding this layer in a neural network, they can train models that predict parameters for combinatorial problems and improve solution quality over time.

The research team evaluated this method on a large-scale dynamic vehicle routing problem with time windows, a complex, real-world combinatorial optimization task. They showed their approach could handle large instances efficiently, significantly outperforming perturbation-based methods under limited time budgets. For example, their MCMC layer achieved a test relative cost of 5.9% compared to anticipative baselines when using a heuristic-based initialization. In comparison, the perturbation-based method achieved 6.3% under the same conditions. Even at extremely low time budgets, such as a 1 ms time limit, their method outperformed perturbation methods by a large margin—achieving 7.8% relative cost versus 65.2% for perturbation-based approaches. They also demonstrated that initializing the MCMC chain with ground-truth solutions or heuristic-enhanced states improved learning efficiency and solution quality, especially when using a small number of MCMC iterations.

This research demonstrates a principled way to integrate NP-hard combinatorial problems into neural networks without relying on exact solvers. The problem of combining learning with discrete decision-making is addressed by using MCMC layers constructed from local search heuristics, enabling theoretically sound, efficient training. The proposed method bridges the gap between deep learning and combinatorial optimization, providing a scalable and practical solution for complex tasks like vehicle routing.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 95k+ ML SubReddit and Subscribe to our Newsletter.

The post This AI Paper Introduces Differentiable MCMC Layers: A New AI Framework for Learning with Inexact Combinatorial Solvers in Neural Networks appeared first on MarkTechPost.

Source: Read MoreÂ