Reasoning capabilities represent a fundamental component of AI systems. The introduction of OpenAI o1 sparked significant interest in building reasoning models through large-scale reinforcement learning (RL) approaches. While DeepSeek-R1’s open-sourcing empowered the community to develop state-of-the-art reasoning models, critical technical details, including data curation strategies and specific RL training recipes, were omitted from the original report. This absence left researchers struggling to replicate the success, leading to fragmented efforts exploring different model sizes, initial checkpoints, and target domains. Different model sizes, initial checkpoints, distilled reasoning models, target domains, code, and physical AI are explored, but lack conclusive or consistent training recipes.

Training language models for reasoning focuses on math and code domains through pretraining and supervised fine-tuning approaches. Early RL attempts using domain-specific reward models show limited gains due to inherent challenges for mathematical and coding tasks. Recent efforts following DeepSeek-R1’s release explore rule-based verification methods, where math problems require specific output formats for accurate verification, and code problems utilize compilation and execution feedback. However, these approaches focus on single domains rather than handling heterogeneous prompts, restricted benchmark evaluations limited to AIME and LiveCodeBench, and training instability issues requiring techniques like progressive response length increases and entropy collapse mitigation.

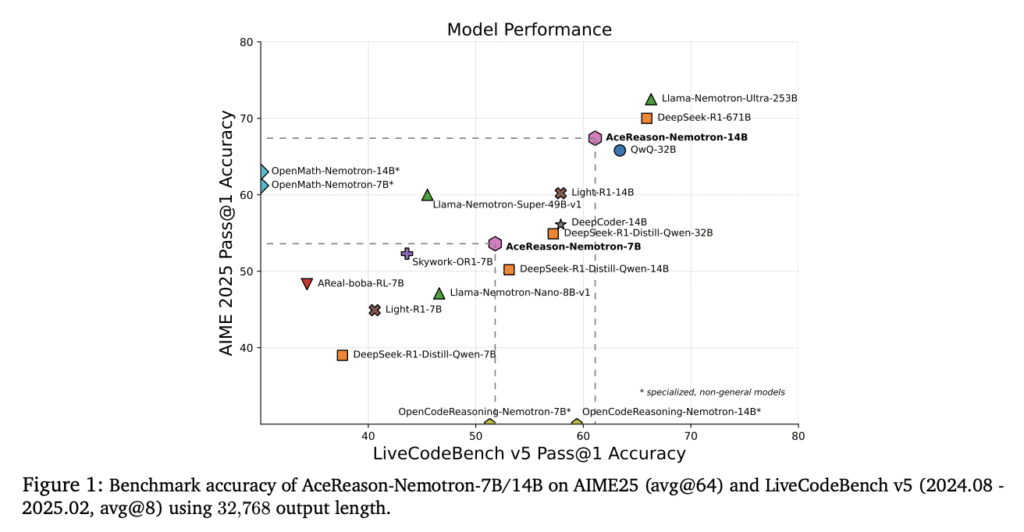

Researchers from NVIDIA demonstrate that large-scale RL can significantly enhance the reasoning capabilities of strong small- and mid-sized models, outperforming state-of-the-art distillation-based approaches. The method employs a simple yet effective sequential training strategy: first conducting RL training on math-only prompts, followed by code-only prompts. This reveals that math-only RL enhances performance on mathematical benchmarks and improves code reasoning tasks, while extended code-only RL iterations further boost code performance with minimal degradation in math results. Moreover, a robust data curation pipeline is developed to collect challenging prompts with high-quality, verifiable answers and test cases, enabling verification-based RL across both domains.

The method performs data curation for both math-only RL and code-only RL. For math-only RL, the pipeline merges DeepScaler and NuminaMath datasets covering algebra, combinatorics, number theory, and geometry, applying 9-gram filtering and strict exclusion rules for unsuitable content. DeepSeek-R1 model validates questions through eight attempts, retaining only majority-voted correct solutions via rule-based verification. The dataset for code-only RL is curated from modern competitive programming platforms using function-calling and stdin/stdout formats across algorithmic topics. Moreover, researchers filter incompatible problems, curate comprehensive test cases covering edge cases, and assign difficulty scores using DeepSeek-R1-671B evaluation, producing 8,520 verified coding problems.

The results show that the AceReason-Nemotron-7B model achieves 14.5% and 14.6% accuracy improvements on AIME 2024/2025, respectively, with 14.2% and 8% gains on LiveCodeBench v5/v6 compared to initial SFT models. The 14B variant outperforms larger models like DeepSeek-R1-Distill-Qwen-32B and DeepSeek-R1-Distill-Llama-70B, achieving best-in-class results among open RL-based reasoning models. Compared to SOTA distillation-based models, AceReason-Nemotron-14B outperforms OpenMath-14B/32B by 2.1%/4.4% on AIME benchmarks and OpenCodeReasoning-14B by 1.7%/0.8% on LiveCodeBench, showing that RL achieves higher performance upper-bounds than distillation approaches by maintaining competitive performance against frontier models like QWQ-32B and o3-mini.

In this paper, researchers show that large-scale RL enhances the reasoning capabilities of strong small- and mid-sized SFT models through sequential domain-specific training. The proposed approach of performing math-only RL followed by code-only prompts reveals that mathematical reasoning training significantly boosts performance across both mathematical and coding benchmarks. The data curation pipeline enables verification-based RL across heterogeneous domains by collecting challenging prompts with high-quality, verifiable answers and test cases. The findings reveal that RL pushes model reasoning limits, providing solutions to unsolvable problems and establishing new performance benchmarks for reasoning model development.

Check out the Paper and Model on Hugging Face. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 95k+ ML SubReddit and Subscribe to our Newsletter.

The post NVIDIA AI Introduces AceReason-Nemotron for Advancing Math and Code Reasoning through Reinforcement Learning appeared first on MarkTechPost.

Source: Read MoreÂ