A prominent area of exploration involves enabling large language models (LLMs) to function collaboratively. Multi-agent systems powered by LLMs are now being examined for their potential to coordinate challenging problems by splitting tasks and working simultaneously. This direction has gained attention due to its potential to increase efficiency and reduce latency in real-time applications.

A common issue in collaborative LLM systems is agents’ sequential, turn-based communication. In such systems, each agent must wait for others to complete their reasoning steps before proceeding. This slows down processing, especially in situations demanding rapid responses. Moreover, agents often duplicate efforts or generate inconsistent outputs, as they cannot see the evolving thoughts of their peers during generation. This latency and redundancy reduce the practicality of deploying multi-agent LLMs, particularly when time and computation are constrained, such as edge devices.

Most current solutions have relied on sequential or independently parallel sampling techniques to improve reasoning. Methods like Chain-of-Thought prompting help models to solve problems in a structured way but often come with increased inference time. Approaches such as Tree-of-Thoughts and Graph-of-Thoughts expand on this by branching reasoning paths. However, these approaches still do not allow for real-time mutual adaptation among agents. Multi-agent setups have explored collaborative methods, but mostly through alternating message exchanges, which again introduces delays. Some advanced systems propose complex dynamic scheduling or role-based configurations, which are not optimized for efficient inference.

Research from MediaTek Research introduced a new method called Group Think. This approach enables multiple reasoning agents within a single LLM to operate concurrently, observing each other’s partial outputs at the token level. Each reasoning thread adapts to the evolving thoughts of the others mid-generation. This mechanism reduces duplication and enables agents to shift direction if another thread is better positioned to continue a specific line of reasoning. Group Think is implemented through a token-level attention mechanism that lets each agent attend to previously generated tokens from all agents, supporting real-time collaboration.

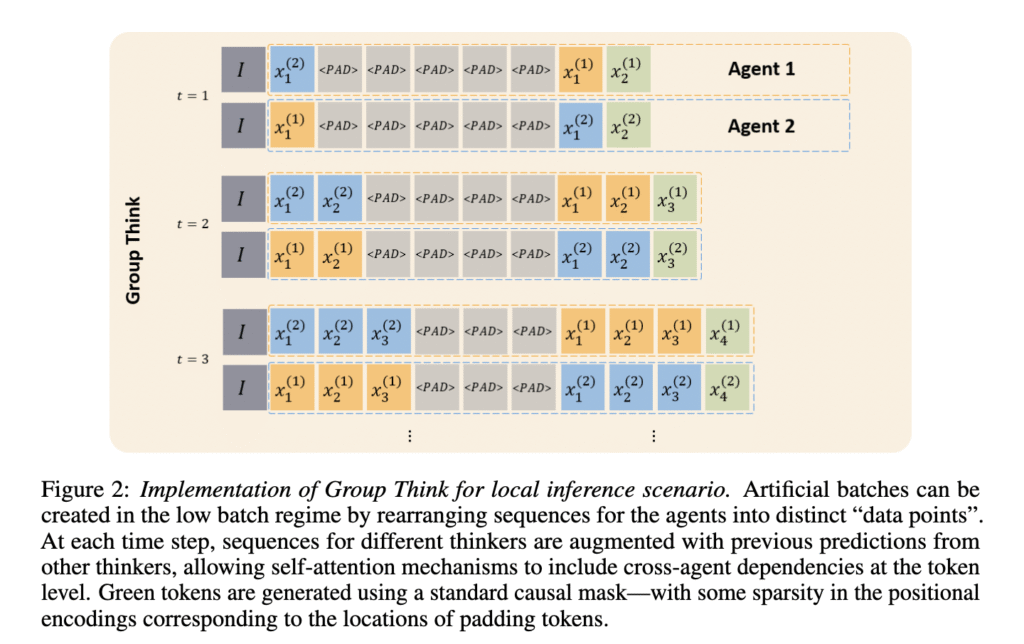

The method works by assigning each agent its own sequence of token indices, allowing their outputs to be interleaved in memory. These interleaved tokens are stored in a shared cache accessible to all agents during generation. This design allows efficient attention across reasoning threads without architectural changes to the transformer model. The implementation works both on personal devices and in data centers. On local devices, it effectively uses idle compute by batching multiple agent outputs, even with a batch size of one. In data centers, Group Think allows multiple requests to be processed together, interleaving tokens across agents while maintaining correct attention dynamics.

Performance tests demonstrate that Group Think significantly improves latency and output quality. In enumeration tasks, such as listing 100 distinct names, it achieved near-complete results more rapidly than conventional Chain-of-Thought approaches. The acceleration was proportional to the number of thinkers; for example, four thinkers reduced latency by a factor of about four. In divide-and-conquer problems, using the Floyd–Warshall algorithm on a graph of five nodes, four thinkers reduced the completion time to half that of a single agent. Group Think solved code generation challenges in programming tasks more effectively than baseline models. With four or more thinkers, the model produced correct code segments much faster than traditional reasoning models.

This research shows that existing LLMs, though not explicitly trained for collaboration, can already demonstrate emergent group reasoning behaviors under the Group Think setup. In experiments, agents naturally diversified their work to avoid redundancy, often dividing tasks by topic or focus area. These findings suggest that Group Think’s efficiency and sophistication could be enhanced further with dedicated training on collaborative data.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 95k+ ML SubReddit and Subscribe to our Newsletter.

The post This AI Paper Introduces Group Think: A Token-Level Multi-Agent Reasoning Paradigm for Faster and Collaborative LLM Inference appeared first on MarkTechPost.

Source: Read MoreÂ