Generative artificial intelligence (AI) applications and relational databases are increasingly being used together to create new solutions across industries. The integration of these technologies allows organizations to use the vast amounts of structured data stored in relational databases to train and refine AI models. AI can then be used to generate insights, predict trends, and even augment database management tasks.

In this post, we describe a solution to integrate generative AI applications with relational databases like Amazon Aurora PostgreSQL-Compatible Edition using RDS Data API (Data API) for simplified database interactions, Amazon Bedrock for AI model access, Amazon Bedrock Agents for task automation and Amazon Bedrock Knowledge Bases for context information retrieval. Data API support is currently available only with Aurora databases. If you intend to use the solution with Amazon Relational Database Service (Amazon RDS), you can customize the integration using conventional database connectivity approaches.

Solution overview

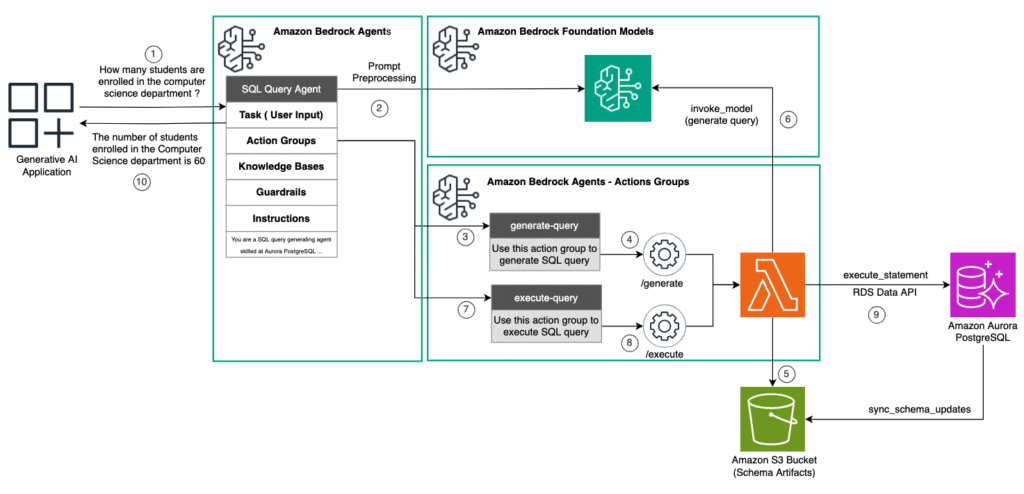

This solution combines the AI capabilities of Amazon Bedrock Agents with the robust database functionality of Aurora PostgreSQL through the Data API. Amazon Bedrock Agents, powered by large language models (LLMs), interprets natural language queries and generates appropriate SQL statements using action groups fulfilled by AWS Lambda function and schema artifacts stored in Amazon Simple Storage Service (Amazon S3). These queries are then run against Aurora PostgreSQL using the Data API, which provides a serverless, connection-free method for database interactions. This enables dynamic data retrieval and analysis without managing direct database connections. For instance, a user request to “show sales data for the last quarter” is transformed into SQL, run through the Data API, and the results are presented in a user-friendly format. The solution is represented in the following architecture diagram.

The detailed steps in this architecture are:

- The generative AI application invokes the Amazon Bedrock agent with natural language input data to orchestrate the integration with the backend relational database.

- The agent invokes the foundational model (FM) on Amazon Bedrock for pre-processing the prompt to determine the actions to be taken.

- The agent then decides to use the generate-query action group.

- The agent invokes the

/generateAPI implemented by the Lambda function. - The Lambda function uses the schema artifacts from the Amazon S3 bucket as context to augment the prompt.

- The Lambda function then invokes an LLM on Amazon Bedrock to generate the SQL query and returns the generated SQL query back to the agent.

- The agent then decides to use the execute-query action group.

- The agent invokes the /execute API implemented by the Lambda function, passing the generated SQL query.

- The Lambda function use Data API with a read-only role to run the SQL query against the Aurora PostgreSQL database.

- The agent finally returns the formatted query results to the application.

While the solution can technically support write operations, allowing AI generated queries to modify the database presents significant risks to data integrity and security. Therefore, production implementations should allow access to read-only operations through proper IAM policies and database role permissions. From a security and data integrity perspective, we strongly recommend implementing this solution exclusively for read-only workloads such as analytics, reporting, and data exploration.

Security guardrails

The solution implements multiple layers of security controls to ensure safe and controlled access to the database. These layered security controls ensure that the solution maintains data integrity while providing the desired natural language query capabilities:

- Agent-level instructions: The Bedrock agents are explicitly configured to support only read-only operations. Instructions embedded in the agent’s prompt prevent it from generating queries that could modify the database (INSERT, UPDATE, DELETE).

- Action group validation: The generate-query function implements additional validation of user input to prevent injection attacks and unauthorized operations. The execute-query function validates the generated SQL queries against an allowlist of operations and syntax patterns. Both functions work in tandem to make sure query safety before they are run.

- Read-only database access: Database interactions are exclusively performed using a read-only role (configured via READONLY_SECRET_ARN). This provides a critical security boundary at the database level, preventing any potential write operations even if other controls fail.

- Bedrock guardrails: Additional security is implemented through Amazon Bedrock Guardrails features. This further allows filtering of specific words or phrases like INSERT, UPDATE, DELETE from user prompts to prevent potentially harmful or unauthorized requests before they reach the query generation stage.

Prerequisites

To follow along with the steps in this post, you need the following resources.

- An AWS account with AWS Identity and Access Management (IAM) permissions to create an Aurora PostgreSQL database and Amazon Bedrock.

- An integrated development environment (IDE) such as Visual Studio Code.

- Python installed with the Boto3 library on your IDE.

- AWS Cloud Development Kit (AWS CDK) installed on your IDE.

Clone the sample Python project from the AWS Samples repository and follow the development environment setup instructions in the readme:

Setting up the database environment

You begin by deploying an Aurora PostgreSQL cluster using the AWS CDK. To deploy the database infrastructure, run the command:

The RDSAuroraStack is an AWS CDK construct that provisions an Aurora PostgreSQL Serverless v2 database in a secure VPC environment. It creates a dedicated VPC with public and private subnets, sets up security groups, and manages database credentials through AWS Secrets Manager. The stack also implements a custom Lambda-based solution to create a read-only database user with appropriate permissions, making it suitable for applications that need segregated database access levels, such as connecting Amazon Bedrock agents to Aurora PostgreSQL databases.

Deploy the agent

Agents orchestrate interactions between foundation models (FMs), data sources, software applications, and user conversations. Also, agents can automatically call APIs to take actions and invoke Amazon Bedrock Knowledge Bases to augment with contextual information. Integrating the agent with Amazon Aurora, you gain the ability to transform natural language inputs into precise SQL queries using generative AI capabilities. Deploy the agent using the AWS CDK command:

The BedrockAgentStack is an AWS CDK construct that creates an Amazon Bedrock agent designed to interact with an Aurora PostgreSQL database through natural language queries. It provisions a Lambda function that can generate and execute SQL queries, implements a comprehensive guardrail system to prevent data modification operations (allowing only SELECT queries), and establishes the necessary IAM roles and permissions for secure communication between Bedrock and Aurora. The stack creates two action groups—one for generating SQL queries from natural language prompts and another for executing those queries—while integrating with the previously deployed Aurora PostgreSQL database using Data API.

After the CDK stack is deployed, you can switch to the Bedrock Agent builder console to review the configurations of the query-agent as shown in the following screenshot.

You will first notice that the agent is configured to use Anthropic’s Claude LLM. Next, from the Agent builder console examine the agent’s instructions because they define the agent’s functionality. The agent also features two key action groups: generate-sql-query and execute-sql-query. An action group is a logical collection of related functions (actions) that an agent can perform to accomplish specific tasks. The generate-sql-query group invokes the generate-query Lambda function, accepting the input user question, and returns the generated SQL query. The execute-sql-query group invokes the execute-query Lambda function, accepting the query and parameter values. We explore these functions in the next sections.

The generate-query function

The generate_sql_query function uses an LLM to create SQL queries from natural language questions and a provided database schema. The function uses a detailed prompt containing instructions for SQL generation, the database schema, and example question-SQL pairs. It then formats this prompt and the user’s question into a structured input for the LLM. The function proceeds to call an invoke_llm method to obtain a response from the LLM. The SQL query is then extracted from the LLM’s output and returned. This method enables dynamic SQL generation based on natural language input while providing the LLM with necessary context about the database structure for accurate query creation. The following code shows the prompt used to invoke the LLM.

In this example, we are embedding the entire schema file as context into the prompt because the schema is small and simple.

For large and complex schemas, you can further enhance the agent’s capabilities by integrating Amazon Knowledge Bases through vector embeddings and semantic search, storing comprehensive schema definitions, table relationships, sample queries, and business context documents. By implementing a retrieval-augmented generation (RAG) approach, the agent first searches the Knowledge Base for contextual information before invoking the generate-query action group function, significantly reducing model inference time.

The execute-query function

The execute_query function uses Data API to execute a SQL query against an Aurora PostgreSQL database, taking the SQL query and parameters as inputs. It returns the response from the database. The Data API eliminates the complexity of managing database connections in serverless architectures by providing a secure HTTPS endpoint that handles connection management automatically, removing the need for VPC configurations and connection pools in Lambda functions.

The lambda_handler processes an incoming event, extracting parameters and their values into a dictionary. It then retrieves a SQL query from these parameters, replacing newlines with spaces for proper formatting. Finally, it calls the execute_query function with the extracted query and parameters to execute the SQL statement. This setup allows for dynamic SQL query execution in a serverless environment, with the ability to pass in different queries and parameters for each invocation of the Lambda function:

Test the solution

You must create a sample schema with data using scripts/create_schema.py before you can proceed with the testing. This script will create a few schemas and tables and ingest sample data. The test is very straightforward. You first send a natural language prompt as input to the agent, which then has to generate the necessary SQL query and execute the query against the configured Aurora PostgreSQL database using Data API. The agent should then return a response that is based on the results queried from the Aurora PostgreSQL database using Data API. Before you run the scripts/create_schema.py script from your IDE, update it with your DB_CLUSTER_ARN, DB_SECRET_ARN, DB_NAME noted from your RDSAuroraStack CDK deployment output.

After you’ve created the schema and loaded the test data, there are few ways you can test the deployed agent. One approach is to use the Amazon Bedrock console, and the other is to make use of the InvokeAgent API.

Using the test window of Amazon Bedrock Agents

To test the solution using the test window, follow these steps:

- On the Amazon Bedrock Agents console, on the panel on the right, open the Test window.

- Enter your test input for the agent, as shown in the following screenshot.

- To troubleshoot and review all the steps the agent has used to generate the response, expand the test window and review the Trace section, as shown in the following screenshot.

Using the Amazon Bedrock InvokeAgent API

Another way to test is by using the AWS SDK for InvokeAgent API. Applications use this API for interacting with the agent. You should find a utility script scripts/test_agent.py in the repository to test the agent and the integration with the Aurora PostgreSQL database. Make sure you update the script with the Amazon Bedrock agent ID before you run the script. The test script provides options for you to run with a single test prompt or run with multiple test prompts. You can also run the test with trace enabled to review the steps and reasoning that the agent used to complete the request.

Run a single test without trace:

Run a single test with trace:

Run all tests without trace:

Run all tests with trace:

The following is the sample output from the test, showing the actual data retrieved from the Aurora PostgreSQL database table:

The following is the sample output with trace enabled showing all the steps of the agent:BEDROCK AGENT TEST RESULTS

Here is another example showing how the agent responds for the input prompt to add data into the database. This solution only allows read operations (SELECT). From a security and data integrity perspective, we do not recommend implementing this solution for write operations. If you need your agent to support inserts and updates of the data, you should instead do this via an API that provides a layer of abstraction with the database. Moreover, you need to also implement validations and controls to make sure your data is consistent.

Considerations and best practices

When integrating Amazon Bedrock Agents with Aurora PostgreSQL and using generative AI capabilities for generating and executing SQL queries, several key considerations should be observed.

- Enable this integration approach only for read-only workloads such as analytics and reporting where you need to provide flexible data querying access to your users using natural language. For read-write and transactional workloads, instead of generating the SQL query, you can use well-defined APIs to interface with the database.

- Database schemas that undergo frequent changes in columns and data types can also benefit from this generative AI based integration approach that generates SQL queries on the fly based on the latest schema. You must make sure the changes to the schema are made visible to the query generation function.

- Implement parameter validation in Amazon Bedrock Agents and the action group Lambda functions to prevent SQL injection and ensure data integrity. Refer to Safeguard your generative AI workloads from prompt injections.

- Implement caching strategies where appropriate to reduce database load for frequently requested information. For more details refer to: Database Caching Strategies Using Redis.

- Implement comprehensive logging and auditing to track interactions between Amazon Bedrock Agents and your database, promoting compliance and facilitating troubleshooting. Regularly monitor and analyze the generated query patterns to identify opportunities for performance tuning. For more details review the blog: Improve visibility into Amazon Bedrock usage and performance with Amazon CloudWatch.

- If the application is multi-tenant, ensure you have the right isolation controls. For details on implementing row-level security with the Data API see Enforce row-level security with the RDS Data API.

- If you are looking for a managed implementation of text to SQL query generation functionality, then you can make use of the GenerateQuery API supported with the Bedrock Knowledge Base.

Clean up

To avoid incurring future charges, delete all the resources created through CDK.

Conclusion

In this post, we demonstrated how to integrate Amazon Bedrock Agents and Aurora PostgreSQL using RDS Data API, enabling natural language interactions with your database. This solution showcases how AWS services can be combined to streamline database interactions through AI-driven interfaces, making data more accessible to nontechnical users. The integration pattern can be extended to support more complex use cases, such as automated reporting, natural language–based data exploration, and intelligent database monitoring.

We encourage you to try this solution in your environment and share your experiences. For additional support and resources, visit our repository.

About the authors

Source: Read More