Language models (LMs) have great capabilities as in-context learners when pretrained on vast internet text corpora, allowing them to generalize effectively from just a few task examples. However, fine-tuning these models for downstream tasks presents significant challenges. While fine-tuning requires hundreds to thousands of examples, the resulting generalization patterns show limitations. For example, models fine-tuned on statements like “B’s mother is A” struggle to answer related questions like “Who is A’s son?” However, the LMs can handle such reverse relations in context. This raises questions about the differences between in-context learning and fine-tuning generalization patterns, and how these differences should inform adaptation strategies for downstream tasks.

Research into improving LMs’ adaptability has followed several key approaches. In-context learning studies have examined learning and generalization patterns through empirical, mechanistic, and theoretical analyses. Out-of-context learning research explores how models utilize information not explicitly included in prompts. Data augmentation techniques use LLMs to enhance performance from limited datasets, with specific solutions targeting issues like the reversal curse through hardcoded augmentations, deductive closure training, and generating reasoning pathways. Moreover, synthetic data approaches have evolved from early hand-designed data to improve generalization in domains like linguistics or mathematics to more recent methods that generate data directly from language models.

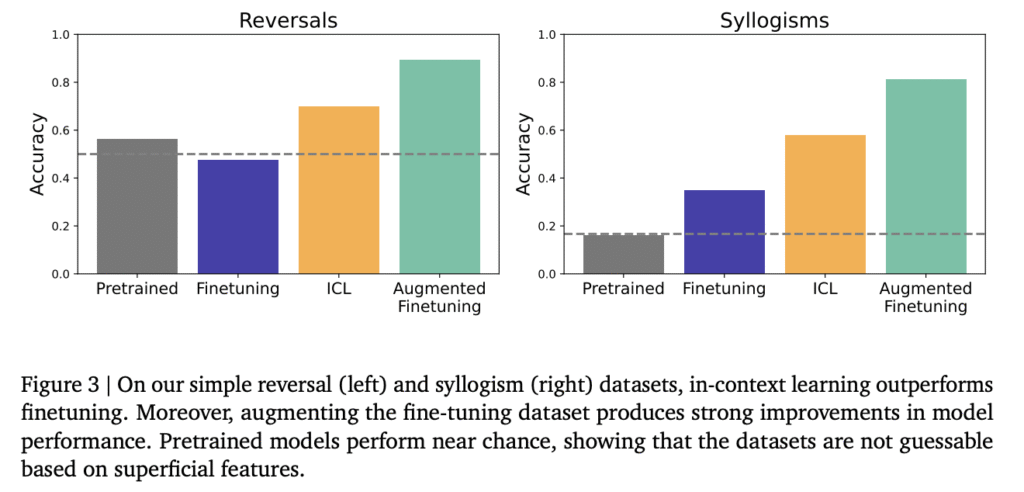

Researchers from Google DeepMind and Stanford University have constructed several datasets that isolate knowledge from pretraining data to create clean generalization tests. Performance is evaluated across various generalization types by exposing pretrained models to controlled information subsets, both in-context and through fine-tuning. Their findings reveal that in-context learning shows more flexible generalization than fine-tuning in data-matched settings, though there are some exceptions where fine-tuning can generalize to reversals within larger knowledge structures. Building on these insights, researchers have developed a method that enhances fine-tuning generalization by including in-context inferences into the fine-tuning data.

Researchers employ multiple datasets carefully designed to isolate specific generalization challenges or insert them within broader learning contexts. Evaluation relies on multiple-choice likelihood scoring without providing answer choices in context. The experiments involve fine-tuning Gemini 1.5 Flash using batch sizes of 8 or 16. For in-context evaluation, the researchers combine training documents as context for the instruction-tuned model, randomly subsampling by 8x for larger datasets to minimize interference issues. The key innovation is a dataset augmentation approach using in-context generalization to enhance fine-tuning dataset coverage. This includes local and global strategies, each employing distinct contexts and prompts.

On the Reversal Curse dataset, in-context learning achieves near-ceiling performance on reversals, while conventional fine-tuning shows near-zero accuracy as models favor incorrect celebrity names seen during training. Fine-tuning with data augmented by in-context inferences matches the high performance of pure in-context learning. Testing on simple nonsense reversals reveals similar patterns, though with less pronounced benefits. For simple syllogisms, while the pretrained model performs at chance level (indicating no data contamination), fine-tuning does produce above-chance generalization for certain syllogism types where logical inferences align with simple linguistic patterns. However, in-context learning outperforms fine-tuning, with augmented fine-tuning showing the best overall results.

In conclusion, this paper explores generalization differences between in-context learning and fine-tuning when LMs face novel information structures. Results show in-context learning’s superior generalization for certain inference types, prompting the researchers to develop methods that enhance fine-tuning performance by incorporating in-context inferences into training data. Despite promising outcomes, several limitations affect the study. The first one is the dependency on nonsense words and implausible operations. Second, the research focuses on specific LMs, limiting the results’ generality. Future research should investigate learning and generalization differences across various models to expand upon these findings, especially newer reasoning models.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 95k+ ML SubReddit and Subscribe to our Newsletter.

The post Enhancing Language Model Generalization: Bridging the Gap Between In-Context Learning and Fine-Tuning appeared first on MarkTechPost.

Source: Read MoreÂ