Recent progress in LLMs has shown their potential in performing complex reasoning tasks and effectively using external tools like search engines. Despite this, teaching models to make smart decisions about when to rely on internal knowledge versus search remains a key challenge. While simple prompt-based methods can guide models to invoke tools, LLMs still struggle with more nuanced behaviors, such as recognizing when an initial search was incorrect and deciding to search again. RL has been explored to improve these behaviors by rewarding effective search usage. However, RL often leads to unnecessary tool use, with models executing redundant searches even for simple tasks, highlighting inefficiencies that must be addressed.

Various RL strategies, including Proximal Policy Optimization (PPO), Direct Preference Optimization (DPO), and Group Relative Policy Optimization (GRPO), have been used to align LLM behavior with human expectations. PPO helps balance learning exploration with maintaining policy stability, while DPO simplifies alignment by directly optimizing model responses based on user preferences. GRPO introduces group-based evaluations to capture subtle improvements in reasoning better. Meanwhile, treating LLMs as autonomous agents that plan and execute multi-step reasoning tasks is gaining traction. Frameworks like AutoGPT and LangChain showcase how these agents can refine their outputs through iterative reasoning and search. Yet, current agent systems often depend on fixed prompts or heuristic-based tool use, limiting their adaptability and efficiency.

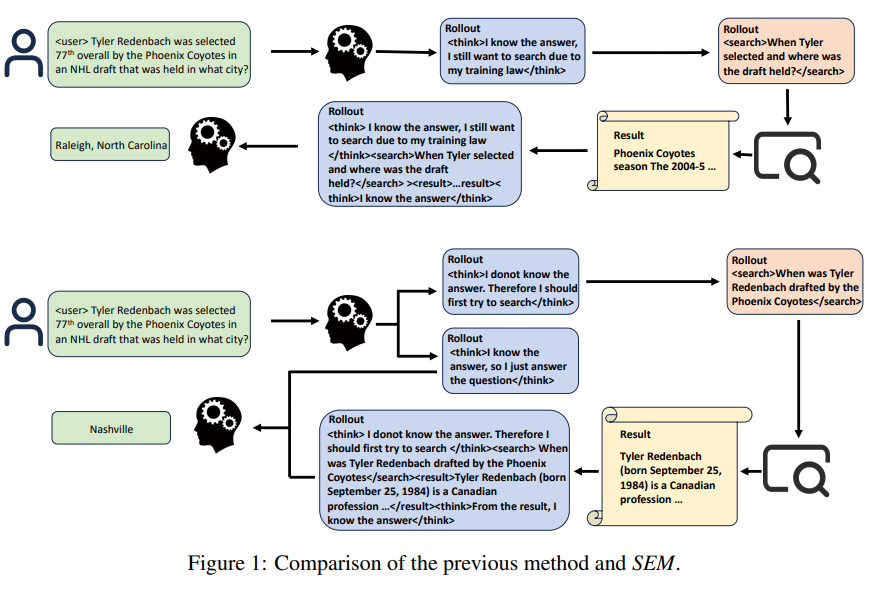

Researchers at Ant Group introduce SEM, a post-training reinforcement learning framework designed to teach LLMs when to use search tools and when to rely on internal knowledge. By training on a balanced dataset combining questions that do and do not require external retrieval, SEM guides the model to issue search requests only when necessary. Using a structured reasoning format and GRPO, the framework rewards accurate answers without search and penalizes unnecessary tool use. Results show that SEM improves response accuracy and efficiency, helping models better judge when external information is needed, thus enhancing reasoning in complex scenarios.

To integrate search tools into a model’s reasoning process, SEM uses reinforcement learning to teach models when and how to use search effectively. The training data combines Musique (questions needing external info) and MMLU (questions answerable from prior knowledge), helping models learn to judge when search is necessary. Using the GRPO framework, the model is rewarded for accurate, efficient answers, discouraging unnecessary searches, and encouraging them when internal knowledge falls short. A structured response format (<think>, <answer>, <search>, <result>) standardizes training and allows for precise reward assignment, improving both reasoning quality and search decision-making.

The study evaluates a model trained to determine when to rely on its internal knowledge and when to use external search. It combines Musique (unfamiliar questions) and MMLU (familiar questions) for training and evaluates performance on datasets like HotpotQA, GSM8K, and MMLU. The proposed SEM method outperforms baselines like Naive RAG and ReSearch in answer accuracy and search efficiency. SEM reduces unnecessary searches on known questions while improving reasoning on unknown ones. Case studies and training curves confirm SEM’s stable learning and intelligent decision-making. Overall, SEM enhances retrieval decisions and internal reasoning in large language models.

In conclusion, SEM is a post-training reinforcement learning framework designed to improve how large language models use external search tools. The model is trained on a dataset combining MuSiQue and MMLU, helping it distinguish between questions it can answer internally and those that require external retrieval. SEM uses a structured reasoning approach and a reward function that penalizes unnecessary searches while promoting accurate and efficient retrieval. Experiments on benchmarks like HotpotQA, GSM8K, and MMLU show that SEM reduces redundant searches and improves accuracy. This approach enhances reasoning efficiency and intelligent use of external knowledge in LLMs.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 95k+ ML SubReddit.

The post Reinforcement Learning Makes LLMs Search-Savvy: Ant Group Researchers Introduce SEM to Optimize Tool Usage and Reasoning Efficiency appeared first on MarkTechPost.

Source: Read MoreÂ