This is the second post of a series where we walk through the process of creating an Amazon DynamoDB data model for a multi-tenant SaaS application. This example uses an issue tracking application, but the patterns apply to any multi-tenant application.

In Part 1, we looked at considerations when designing for multi-tenancy and defined our access patterns. In this post, we continue the design process, selecting a partition key design and creating our data schema. We also show how to implement the access patterns using the AWS Command Line Interface (AWS CLI). In Part 3, we will validate our data model and explore how to extend it as new access patterns emerge.

We use the NoSQL Workbench tool to create our data model used in this post. You can use NoSQL Workbench to draft your data model, test it with sample data, and commit it to your account.

Selecting a primary key

When creating a new DynamoDB table, you must have a primary key to uniquely identify items stored in the table. You can choose to use a simple primary key, which has a single partition key, or use a composite primary key, which has a partition key and a sort key.

DynamoDB distributes data across partitions based on the partition key portion of the primary key. A simple primary key is sufficient for key-value access patterns, and by default, data gets uniformly distributed across partitions. Alternatively, a composite primary key allows modeling a one-to-many relationship between two entities, and storing items that have the same partition key value in the same partition, sorted in ascending order.

In this post, the access patterns are required to return an item collection. There may be multiple items for the same partition key value (for example, multiple comments for the same ticket) and we have a requirement to be able to get all items. We use a composite primary key to satisfy these “get all” requirements.

When modelling multiple entities in the same table, the partition key and sort key of the table should have generic attribute names, for example, PK and SK instead of a specific name, such as ticketId. This way, the primary key name is relevant to the different entities stored in the table. It is also a best practice to avoid choosing long and descriptive attribute names. The maximum item size in DynamoDB is 400 KB, which includes both attribute name binary length (UTF-8 length) and attribute value. The attribute name counts towards the size limit.

Considerations when selecting a primary key for multi-tenancy

Tenant isolation is not optional for multi-tenant applications. It is common practice to use a unique tenant identifier (tenantId) as part of the primary key to enforce tenant isolation using AWS Identity and Access Management (IAM) using a StringLike condition key. This approach can be simplified using attribute-based access control. An example implementation is shown in the IAM policy that follows:

When you choose tenantId as the partition key, all the items with the same tenantId are initially stored in one partition. Items with a different tenantId might also be stored in the same partition.

Using tenantId as the partition key might seem appealing for co-locating all data for a tenant, but it’s important to consider DynamoDB’s throughput limits. A single partition can support up to 3,000 read capacity units (RCU) and 1,000 write capacity units (WCU) per second. By distributing data across multiple partitions, you leverage DynamoDB’s ability to scale horizontally and achieve higher throughput. Concatenating tenantId with another attribute (such as the ticketId e.g. TENANT#1|TICKET#4) for the partition key helps spread the load more evenly.

You should design multiple item collections, where the partition key value is the combination of tenantId and another value that is relevant to the access patterns of your application. The adaptive capacity feature of DynamoDB instantly boosts capacity to high-traffic partitions to sustain higher throughput and reduce throttling. Even so, you can proactively reduce the likelihood of request throttling on a single partition by decomposing your item collections to be as granular as can still meet the access patterns. We provide examples of focused item collections later in this post. To dive deep into scaling DynamoDB, see Scaling DynamoDB: How partitions, hot keys, and split for heat impact performance and later posts in that series.

Write sharding can be implemented for high-volume tenants when their transaction volume approaches DynamoDB’s partition limits. By appending suffixes to tenant IDs in partition keys (like TENANT#1-0, TENANT#1-1), write operations can be spread across multiple partitions. While it adds complexity to queries, this technique provides a solution for accommodating tenants whose growth would otherwise exceed the throughput capacity of a single partition.

Designing our data schema

We now iterate across our access patterns and show how you can represent them in your data schema. From a SaaS perspective, our goal is to make our queries as efficient as possible. Any inefficiency leads to cost and performance wastage, which scales as the SaaS solution scales.

DynamoDB design best practices are well documented. For SaaS builders, the following are important design approaches we want to aim for:

- Prioritize performance – Performance is the bottleneck in a large, multi-tenant solution. By optimizing our data schema to our access patterns, we can have the most efficient performance at scale.

- Avoid filter expressions – A filter expression scans or queries a table and discard the results that don’t match the filter. These discarded results represent wastage, and quickly scale with growth if you are filtering by a single tenant in a multi-tenant table.

- Use global secondary indexes (GSIs) to explicitly map attributes to access patterns – DynamoDB paginates query results into pages of data that are 1 MB in size (or less). If there are attributes returned in the query result that aren’t needed, then these represent wastage. These may lead to further query operations if the complete results were unable to fit into a single page.

- Avoid storing large items – Larger items consume more read and write units. To optimize for performance and cost, especially for high traffic access patterns, avoid storing large items in the table and choose the right operation type. To optimize the item size, see Use vertical partitioning to scale data efficiently in Amazon DynamoDB and also Large object storage strategies for DynamoDB.

- Rate limit heavy hitters – A noisy neighbor can affect the most efficient data model. We want to think about upstream mechanisms the data model can support to ensure fairness at scale as part of our wider SaaS architecture.

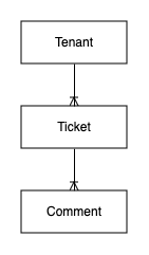

As a reminder, here is the entity relationship diagram from part 1:

Modeling item collections

Modeling item collections

In this section, we discuss the following access pattern: “As a user, I want to get a single ticket and all comments.”

This access pattern uses just the partition key to lookup the item. The following table shows the tickets for each tenant.

If there were no other access patterns in this application, a simple primary key would suffice for this table. However, we need to use a composite primary key to design the data schema for the remaining access patterns.

To retrieve a specific ticket (for example, partition key: TENANT#1|TICKET#1) from the table (SupportTicket), the exact value of the primary key should be provided when running the following command:

Here the Query API action is being used rather than the GetItem action. This is because multiple items are returned from the item collection, rather than just one specific item.

Modeling summaries

Let’s look at another access pattern: “As a user, I want to view a single ticket summary.”

As you saw in Part 1, a ticket can have zero or many comments. We don’t want to duplicate the top-level ticket information to each comment, so a summary item is created. This will be displayed in a list view before drilling down into the full detail view for an individual ticket. This is shown in the following view:

The technique used here is called shallow duplication, which avoids duplicating attributes and consequently optimizing write capacity consumption and cost. With shallow duplication, you only store the ticket status and resolver in the ticket item collection. For cases in which detailed information for each ticket is requested, the item collection should be retrieved from the table as in the previous example.

We consider the frequency and request per second requirements for each access pattern to decide which additional attributes we include as part of the items.

To retrieve a single item summary from the table (SupportTicket), use the following command:

As shown in the preceding table, there are three items in the ticket item collection with the partition key value equal to TENANT#1|TICKET#1. We use the sort key to reduce the query results to only return items in the item where the sort key value equals SUMMARY. This way, without using filter expressions, you only retrieve the summary item from the ticket item collection.

Modeling secondary indexes

The access pattern “As a user, I want to get all open tickets.” requires accessing multiple item collections. This is not possible in DynamoDB, a query must always be an equality (exactly one) on the partition key. This is where a Global Secondary Index (GSI) is required.

When creating a GSI, any attribute from the table that has the string, number, or binary scalar data type can be selected as the primary key of the GSI. You only write to the table, and if the item has attributes that are the primary key of a GSI, the item is propagated to the GSI. In a GSI, there might be multiple items with the same primary key, and you can only perform eventually consistent read operations. This might help you decide which item collection should be stored in the table and which one in the GSI.

Consider a GSI as a separate virtual table that has its own assigned partitions. The same rules apply to the GSI as to the base table regarding partition maximum throughput limits. It’s important to choose a partition key that has high cardinality in your GSI.

If the table has provisioned capacity mode, you provision read and write capacity for the table and each GSI separately. If the GSI is under-provisioned for write capacity or the write traffic goes mainly to some heavy hitter primary keys because the primary key has low cardinality, the GSI can create a backpressure that eventually causes the table to throttle for writes.

When creating your GSI for your application, you explicitly choose which attributes from the table to project into the GSI. Whenever you update any of the projected attributes in the table, the item in the GSI gets updated as well. It is a best practice to only project attributes that you actually need in the GSI. This way, you consume less write capacity, which optimizes the cost, and when performing query operations, more items are returned in each 1 MB page.

GSI considerations in a multi-tenant solution

As a SaaS provider, it’s important to keep your tenant isolation model in your GSI. For example, if you’re using tenantId as part of the partition key in your main table for tenant isolation, then tenantId should be part of the partition key in your GSIs.

Let’s consider the following access pattern: “As a user, I want to get all open tickets.”

To model the relationship of ticket status to tenants, a GSI is created with the partition key on the tenant_status attribute. This could be created using the existing status attribute, which would require that tenant isolation be enforced in the application by providing a filter to the query. But this alone is error prone, and so instead the tenantId is included as part of the tenant_status attribute (the GSI partition key), so attribute-based access control can be used. Depending on the use-case, both attributes could be kept on the table to reduce the work required in the application to split the tenant_status attribute. For our data model, we have chosen to drop the previous status attribute and just keep the new tenant-scoped attribute. This is shown in the following table:

We retrieve all open tickets for a specific tenant (partition key: TENANT#1|OPEN) from the GSI (GSI1) using the following command:

The resolver attribute is selected as the sort key for the GSI to satisfy the next access pattern: “As a user, I want to view all open tickets for a given resolver”. This allows for the GSI open tickets item collection to be selectively queried by resolver similar to the previous examples using the base table:

Modelling Transactions

The next access pattern “As a user, I want to change the status of multiple tickets atomically.” Requires modifying the tenant_status attribute on multiple items. To do this in a transactionally consistent manner, a DynamoDB transaction is used as follows:

To implement the requirement of changing the status of 2 tickets atomically using a DynamoDB transaction, you can use the following AWS CLI command:

By using the transact-write-items operation, you can ensure that the update operations are executed as an atomic transaction for up to 100 items. If any part of the transaction fails, the entire transaction will be rolled back, ensuring data consistency. DynamoDB transactions can also be expanded to cover multiple tables for more complex scenarios.

In this scenario, the application only updates one tenant’s data at once. If the application was asynchronously processing updates as part of a batch job, multiple tenant’s data may be processed. This is generally not recommended as mixing multiple tenants in a single transaction can make it harder to rollback changes for specific tenants or communicate failures and handle retries. IAM policies with condition expressions should restrict access based on tenant identifiers and batch processing should be structured to group operations by tenant and process each tenant’s updates in a separate transaction.

Modeling Aggregations

Finally, consider the following access pattern: “As a tenant admin, I want to view an aggregate of the tickets by status in my tenant.”

This access pattern is for a tenant admin persona—someone who needs to be able to view top-level metrics across their tenant. These metrics comprise aggregations, such as the count of each entity type used for tenant insights. Aggregations are expensive to perform on-demand, so we want an approach to reduce the cost and improve performance. DynamoDB doesn’t have built-in support for aggregations, therefore we use Amazon DynamoDB Streams and AWS Lambda to create the aggregation.

The following diagram shows how to count the number of tickets for each tenant by enabling a DynamoDB stream for the table and performing per-tenant aggregations using a Lambda function, which writes back to our table.

For this aggregation access pattern, we use filter expressions to control which events our DynamoDB stream sends to our function for processing. The resolver attribute only exists on ticket items, so filtering on the existence of this attribute means we only process the count of tickets, not comments or other items in the collection. This is shown in the following code:

The following table shows the resulting entries:

We retain the tenantId as part of the partition key to keep our tenant isolation mechanism.

To retrieve all aggregates for a given tenant (partition key: TENANT#1|AGGREGATE) from the table (SupportTicket), use the following command:

Conclusion

In this post, we showed how to select a primary key and model relationships between entities. To summarize the takeaways:

- Prioritize query efficiency to reduce wastage at scale.

- Design multiple item collections where the partition key value is the combination of

tenantIdand another value that is relevant to the access patterns of your application. - Maintain your tenant isolation mechanism across all access patterns. If you’re using

tenantIdwith leading keys, make sure you prefixtenantIdto all partition keys. - Use GSIs to explicitly map attributes to access patterns.

We also showed how to implement the access patterns using the AWS CLI. For more information about how to choose the right client option for your use case, see Exploring Amazon DynamoDB SDK clients.

In Part 3, we will show how to validate your data model and extend it in the future.

About the Authors

Dave Roberts is a Senior Solutions Architect in the AWS SaaS Factory team where he helps software vendors to build SaaS and modernize their software delivery. Outside of SaaS, he enjoys building guitar pedals and designing PCBs for said pedals.

Dave Roberts is a Senior Solutions Architect in the AWS SaaS Factory team where he helps software vendors to build SaaS and modernize their software delivery. Outside of SaaS, he enjoys building guitar pedals and designing PCBs for said pedals.

Josh Hart is a Principal Solutions Architect at Amazon Web Services. He works with ISV customers in the UK to help them build and modernize their SaaS applications on AWS.

Josh Hart is a Principal Solutions Architect at Amazon Web Services. He works with ISV customers in the UK to help them build and modernize their SaaS applications on AWS.

Samaneh Utter is an Amazon DynamoDB Specialist Solutions Architect based in Göteborg, Sweden.

Samaneh Utter is an Amazon DynamoDB Specialist Solutions Architect based in Göteborg, Sweden.

Source: Read More