This is the third post in a series where we walk through the process of creating an Amazon DynamoDB data model for an example multi-tenant issue tracking application.

In Part 1, we defined our access patterns. We then selected a partition key design and created our data schema in Part 2.

In this last part of the series, we explore how to validate the chosen data model from both a performance and a security perspective. Additionally, we cover how to extend the data model as new access patterns and requirements arise.

Validating your data model

Your application depends on your data model being performant and secure. You want to observe the scaling patterns of your data model as it reacts to load while maintaining tenant isolation.

You will need tooling to perform your validation. A distributed load testing approach lets you simultaneously issue requests against your DynamoDB table using parallel test clients. For more details, see Distributed Load Testing on AWS.

Start by introducing a baseline scenario with many active tenants in your application. You then introduce noisy neighbor scenarios to see how your data model reacts. Are you able to scale effectively without affecting the tenant service experience?

Introduce scenarios in series and parallel, and observe your data model. They will represent your operations, such as

- Tenant onboarding and offboarding.

- Backup and restore. Is it possible to restore a single XXL tenant within an SLA?

- Tenant portability between service tiers. Bulk export and import of tenant data.

- Noisy neighbor tenants. Can heavy usage tenants affect others?

Monitoring your data model

Metrics are key to data model validation. You want to validate that the provisioned DynamoDB capacity sustains an unpredictable usage pattern.

Data validation monitoring forms the basis of your operational monitoring. The validation provides data to build a set of alarms which indicate impact to tenant performance. If throttling occurs, that shows insufficient capacity or potentially inefficient data access patterns that need revisiting. Your operations team will want to be aware of these events.

You should enable Amazon CloudWatch Contributor Insights on the DynamoDB table to capture detailed tenant-level operational metrics. Other tools include Amazon CloudWatch, and AWS X-Ray.

The following are key metrics to watch for any anomalies:

- Most accessed keys

- Most throttled keys

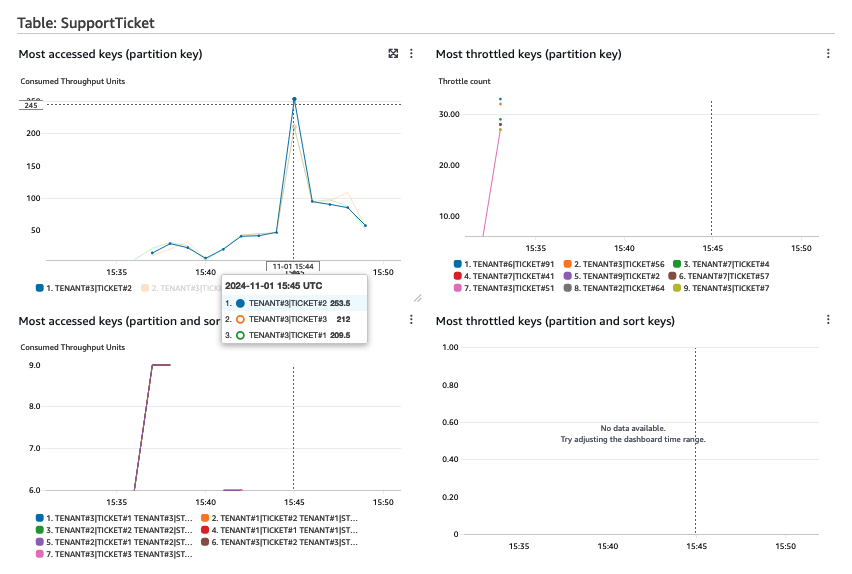

For example, consider the following Contributor Insights dashboard.

Tenant#3 is creating a large volume of requests compared to other tenants. This can have a negative performance impact for other tenants by causing table or partition-level throttling. A DynamoDB partition sustains 3,000 reads and 1,000 writes per second. When visualized in CloudWatch this metric is aggregated per minute. To detect outliers using Contributor Insights, you can create alarms at defined thresholds using the MaxContributorValue metric.

When you turn on Contributor Insights, several rules are automatically created. Alarms can be created on these rules. An example is shown below that sends a notification when a single partition key receives more than 2000 requests per second. The same logic can be used to detect when a key has any throttled requests:

Where hot partition key values are encountered, this can be mitigated by artificially write sharding Tenant#3 or by moving them to their own silo table to isolate them from affecting other tenant performance.

The throttled keys show where both the table-level and partition-level throttling limits are exceeded. If the partition-level limits are exceeded, consider caching frequently requested items.

Testing tenant isolation

Tenant isolation is not optional. Your validation will test that tenant isolation works across all your access patterns.

One technique is to create test tenants and issue requests with different AWS Identity and Access Management (IAM) credentials mapped to those tenants. You want to block attempts to access data outside the assigned tenant context and result in access denied errors.

The following are test cases to consider:

- Querying the table with a tenant ID not matching the authenticated context

- Performing scans without tenant ID filters

- Creating, updating, deleting items across tenant boundaries

- Varying combinations of resource ARNs and condition keys in policies

It’s important to test for both success and failure scenarios across each access pattern. The system should robustly prevent unauthorized cross-tenant access attempts.

Extensibility

As you continue through the application lifecycle, you may find new requirements coming from your tenant usage patterns. Although you try to fully document your requirements ahead of time, invariably there will be unexpected developments.

There are 3 core patterns you can adopt to extend the data model for new access patterns:

- Overload existing GSIs.

- Create additional GSIs.

- Add new tables for new entities. DynamoDB provides a quota of up to 2,000 tables per account by default, adjustable up to 10,000.

You can use existing Global Secondary Indexes in new ways by designing your sort keys to support additional access patterns. For instance, if you need to add ticket feedback ratings to your system, you could use the existing GSI1 by structuring the index sort key to include both resolver and feedback information. This method is cost-effective since it doesn’t require new indexes, but it can make your sort key patterns more complex. An example is shown in the table below:

When new access patterns emerge that can’t be satisfied by existing indexes or table structures, adding new GSIs is a viable solution. For example, if you need to support time-based reporting or agent performance tracking, you could add new GSIs specifically designed for these purposes. While this approach provides optimal query performance for new access patterns, it does increase costs and write capacity requirements.

When new features require significantly different data structures or access patterns, creating new tables might be the best solution. For example, if you want to add a knowledge base feature to your support ticket system, you could create a separate KnowledgeBase table while maintaining the same tenant isolation principles. This approach provides clear separation of concerns and independent scaling, though it does increase management overhead.

Cost Considerations

- Table per tenant vs. shared table: While a single table simplifies management, it can lead to noisy neighbor issues. Conversely, separate tables per tenant increase operational overhead and deployment complexity, but allow simpler isolation approaches.

- GSI proliferation costs: Each GSI increases the write costs since writes to the base table are replicated to indexes. Consider this when adding indexes for tenant-specific access patterns.

- On-demand vs. provisioned capacity: For multi-tenant applications with unpredictable workloads across tenants, on-demand capacity mode often provides better efficiency, as it prevents over-provisioning for peak utilization.

- Storage optimization: Consider how to store large attribute values and implementing TTL for temporary data to minimize storage costs.

Conclusion

In this three-part series, we covered the full process for developing a scalable, secure, and extensible DynamoDB data model for multi-tenancy.

We arrived at the best data model by working backwards from our access patterns. This was validated through load testing while enforcing tenant isolation. Finally, we explored options for extending the model to accommodate new features. The result is a flexible database architecture that scales with growth, while maintaining tenant isolation.

DynamoDB offers many advantages to customers building multi-tenant applications as a primary persistence layer and creating a data model is just the first step. Having a robust tenant isolation mechanism is the next consideration. We recommend the AWS SaaS Operations workshop as a hands-on example of how to implement, test, and audit tenant isolation using DynamoDB in a multi-tenant application.

About the Authors

Dave Roberts is a Senior Solutions Architect in the AWS SaaS Factory team where he helps software vendors to build SaaS and modernize their software delivery. Outside of SaaS, he loves to listen to the guitar of Duane Allman and spends too much time troubleshooting why his self-made guitar pedals don’t work as expected.

Dave Roberts is a Senior Solutions Architect in the AWS SaaS Factory team where he helps software vendors to build SaaS and modernize their software delivery. Outside of SaaS, he loves to listen to the guitar of Duane Allman and spends too much time troubleshooting why his self-made guitar pedals don’t work as expected.

Josh Hart is a Principal Solutions Architect at Amazon Web Services. He works with ISV customers in the UK to help them build and modernize their SaaS applications on AWS.

Josh Hart is a Principal Solutions Architect at Amazon Web Services. He works with ISV customers in the UK to help them build and modernize their SaaS applications on AWS.

Samaneh Utter is an Amazon DynamoDB Specialist Solutions Architect based in Göteborg, Sweden.

Samaneh Utter is an Amazon DynamoDB Specialist Solutions Architect based in Göteborg, Sweden.

Source: Read More