AWS announced Amazon CloudWatch Database Insights in December, 2024 for Amazon Aurora (PostgreSQL- and MySQL-compatible editions), as well as for Amazon Relational Database Service (Amazon RDS) for PostgreSQL, MySQL, MariaDB, SQL Server, and Oracle. CloudWatch Database Insights serves as a database observability solution offering a tailored experience for DevOps engineers, application developers, and database administrators. This tool is designed to accelerate database troubleshooting processes and address issues across entire database fleets, enhancing overall operational efficiency.

In this post, we show how you can use CloudWatch Database Insights for troubleshooting your RDS and Aurora resources. Our goal is to equip you with the knowledge and skills necessary to navigate complex database issues with confidence and efficiency.

Solution overview

We will demonstrate three scenarios that represent common troubleshooting challenges faced by database administrators. These demos will help you understand how to navigate and interact with CloudWatch Database Insights. Before diving into these scenarios, we recommend you review the post New Amazon CloudWatch Database Insights: Comprehensive database observability from fleets to instances for an introduction to CloudWatch Database Insights.

Prerequisites

In order to access CloudWatch Database Insights in your Management Console, you must first enable it. To do this, turn on the Advanced Mode of CloudWatch Database Insights for your RDS DB instances or Aurora DB clusters. Note that there is a cost associated with enabling this feature. Review the CloudWatch pricing page for more details.

There are two modes of operation in CloudWatch Database Insights for Amazon RDS and Aurora. The Standard Mode supports basic features around database metrics. The Advanced Mode offers additional features such as execution plan and lock analysis for SQL queries, query statistics, fleet-wide monitoring dashboards, viewing Amazon RDS events in CloudWatch, and much more. See the Modes for Database Insights documentation for a detailed comparison.

Accessing the CloudWatch Database Insights console

You can access CloudWatch Database Insights through the Amazon CloudWatch console:

- In the CloudWatch console navigation pane, choose Insights, then choose Database Insights.

- Select “Database Instance” on the left hand panel.

- Then select the RDS instance you want to further investigate.

Demo #1: Query performance issue due to a missing index

CloudWatch Database Insights can help you identify suboptimal queries and provide tuning and optimization clues. In this example, we demonstrate a query that’s experiencing slow execution due to the lack of a suitable index in the table.

Our test setup uses an Aurora PostgreSQL writer instance (t3.medium) with a sample data set. The test table, employees, contains 52 million rows and is approximately 10 GB in size.

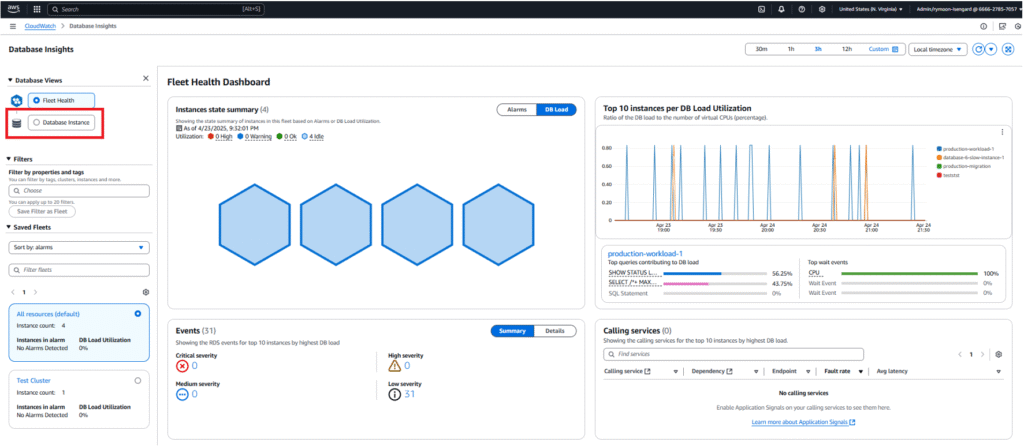

The application using this database is experiencing elevated response times. To troubleshoot this issue, we start by opening the CloudWatch Database Insights Fleet Health Dashboard, which displays one of our monitored Aurora PostgreSQL instances. One of them is experiencing a high DB load, indicated by the red hexagon.

Selecting the red hexagon gives us further information about the instance, as shown below. This includes the DB instance name, the DB load utilization metric, and additional metadata such as the engine version and compute configuration.

The Fleet Health Dashboard not only alerts us about high load in our fleet, but also displays the top queries contributing to the load. As you can see in the next view, we have a read query that is inducing the vast majority of the load.

To further analyze this query, let’s select Database Instance on the left panel under Database Views. After selecting the Database Instance view, we can see the database load metric expressed as the number of Average Active Sessions (AAS). The AAS number is well above the dashed vCPU line indicated by the arrow in the below screenshot. The active sessions are predominately utilizing the CPU as indicated by the green color within the graph. This indicates that the number of active database sessions exceeds the number of vCPUs on our database instance (t3.medium), or to put it simply, that the volume of workload is greater than what our instance can handle due to its heavy CPU utilization.

We can also observe key wait events like CPU and IO:DataFilePreFetch causing the majority of the load. These wait events are included in the key at the bottom of the graph:

Although each database engine uses unique naming conventions for the wait events, they all follow similar themes and are instrumental in diagnosing workload issues. As discussed in the wait event documentation, this particular wait event indicates that the database is either active on CPU or waiting for CPU. We can scroll further down the page to identify which query is causing this issue.

Based on the preceding view, we can confirm the information we initially saw on the Fleet Health Dashboard: the increase in load is caused by a read query. For select engines within CloudWatch Database Insights, you can review the query’s execution plan. Let’s look at the execution plan for the suboptimal query:

Based on the plan, this query has a very high cost of 1,483,029 [#1] and execution time of 139,339 milliseconds [#2]. Additionally, it is sorting through nearly 13 million rows [#3] just to get a result set of 100 rows [#4]. To further confirm that this query exhibits poor performance, we can review the statement-level metrics below:

Using the settings icon in the top right of the “Top SQL” tab, we selected additional metrics to each query. This includes Storage blk read time (ms)/call and Read time (ms)/call). It is worth noting that these metrics are aggregated over the selected time period but can still help identify the time each query is spending reading blocks from disk, in total and per each call (execution) during the problematic time range. For more information on these SQL statistics in Aurora PostgreSQL-Compatible databases, see SQL statistics for Aurora PostgreSQL.

This particular query is taking over 143 seconds per call, and as we can see based on Storage blk read time (ms)/call it is spending most of the time performing block reads from the storage. By selecting “SQL metrics” we can review the historical data for these metrics over time for a particular SQL query. Below we can see calls/sec over time for the offending read query:

You can scroll further down to see all metrics collected for the SQL query. Below we can see the storage blk read metrics which as mentioned previously are extremely high for this query.

Based on the information we gathered so far, we could likely benefit from an index on the salary column for the employees table. As shown earlier, the query uses a predicate on this column. By adding an index, we could help the database find a better execution plan for this and other queries using that column. Using DBeaver, we can review the indexes that currently exist in this table.

As we can see, the table only contains a primary key but no secondary keys (indexes). We will add an index on the salary column using the following statement, and see if this helps alleviate the pressure on our Aurora PostgreSQL instance.

After adding the index, we can see a sharp decline in the DB load utilization on our Fleet Health Dashboard. Additionally, we can observe the DB load summary has changed to Ok, indicated by the green hexagon.

We can choose the Database Instance view and see that after adding the index on March 16 at 19:56 UTC, the AAS fell below the dashed vCPU line.

Next, we can check on the problematic query to see if it has improved.

As we can see below, the query plan is showing much better performance with the newly added index. The cost has drastically decreased to .56 and the time of the query is now 10 milliseconds.

Thanks to the new secondary index, we can see the SQL metrics improve as well. We are now able to call this query much more frequently (5 calls per second) and the disk read time fell by 99.99%.

By using CloudWatch Database Insights to troubleshoot the issue, we quickly and effectively mitigated the performance problem on the database caused by the missing index on the table. It still required a small amount of database knowledge, but it was Database Insights that alerted us about the issue and provided all the data we needed to solve it as well as metrics to verify the fix.

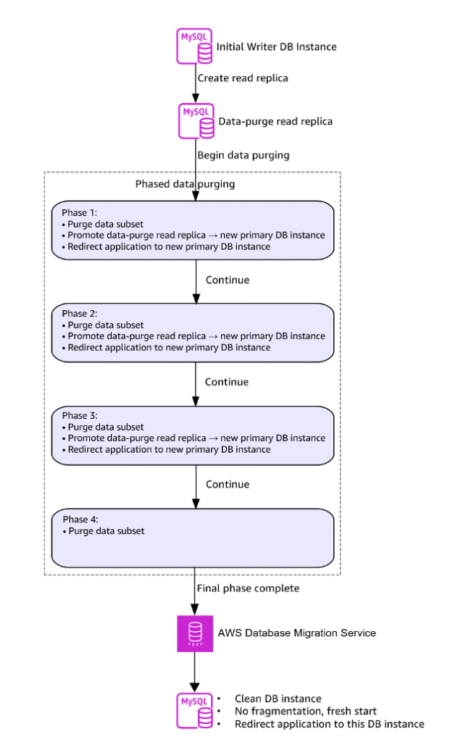

Demo #2: Reaching storage capacity limits during a database migration

Managing storage capacity is a critical aspect of database administration that, if overlooked, can lead to severe performance issues or even application downtime. Databases often grow rapidly, and without proper monitoring and management, they can unexpectedly reach storage limits. This scenario, known as a storage full state, can cause application failures, data insertion errors, and significant performance degradation. We will simulate a real-world scenario where an RDS for MySQL instance reaches its storage capacity limit during a data migration that involves downtime. We will explore how CloudWatch Database Insights can help identify and alert administrators to impending storage issues.

In this example, a development team is migrating a 35 GB MySQL database from on premises to RDS for MySQL. However, during the migration, they are alerted of application-level failures with errors similar to the following:

ERROR 2002 (HY000): Can't connect to MySQL server on 'production-migration.xxxxxx.us-east-1.rds.amazonaws.com' (115)

ERROR 1053 (08S01): Server shutdown in progress

Let’s navigate to the Fleet Health Dashboard within CloudWatch Database Insights to investigate this issue. Similar to the previous scenario, one of our instances is shown in red. However, this time the red hexagon indicates the instance is within an alarm state rather than a high DB load. This means that a previously configured CloudWatch alarm is breaching the defined threshold. To learn how to configure CloudWatch alarms within Amazon RDS, see Creating CloudWatch alarms to monitor Amazon RDS.

We can scroll further down the page and see that across our fleet, we have a total of 24 RDS events, 2 of medium severity and 22 of low severity. These events can help give us additional clues or context on what was happening on the fleet during the selected timeframe.

Let’s look at these events:

Free storage capacity for DB instance production-migration is low at 1% of the allocated storage [Allocated storage: 19.27 GB, Free storage: 176.68 MB]. The database will be shut down to prevent corruption if free storage is lower than 300.01 MB. You can increase the allocated storage to address this issue.

Diving deeper into these events reveals that our RDS instance production-migration has reached its storage capacity and will perform a shutdown to prevent corruption on the underlying Amazon Elastic Block Store (Amazon EBS) volume.

Let’s review the instance-level view to see which CloudWatch alarm was breached and check the current status of the database.

We can see that our CloudWatch alarm, which is configured on the FreeStorageSpace metric, has breached the defined threshold set at 3 GB. We recommend setting up a CloudWatch alarm on this metric if you haven’t done do already to be alerted when your storage is low. This confirms that we exhausted all allocated storage as previously suggested by the RDS events.

By navigating to Database Telemetry, we can also confirm that after the storage exhaustion, other Amazon EBS-related metrics like read IOPS and write IOPS decreased to 0, which is expected if our database has been shut down.

Lastly, before mitigating the issue, we can check the Events section to see all the events that occurred on this instance (production-migration). By doing this, we can confirm that the database was indeed shut down.

DB instance has reached the storage-full threshold, and the database has been shut down. You can increase the allocated storage to address this issue.

These details explain why the application is now displaying connection errors. As mentioned by the RDS event, we have to increase the allocated storage on the database instance to allow it to start up. We can navigate to the Amazon RDS console and select the instance production-migration, which is currently in the storage-full status.

The next step is to increase the storage by at least 10% in order for the modification to be successful. In this example, let’s increase the storage from 20 GiB to 40 GiB to allow the migration of approximately 35 GB data set to complete without exhausting the database’s allocated storage. For more details on the process and considerations for storage resizing, see Increasing DB instance storage capacity.

After performing the modification, we can use CloudWatch Database Insights to confirm that our RDS MySQL instance production-migration is up and running, and the alarm state is gone.

By checking the Database Telemetry view, we can also review storage-related CloudWatch metrics and confirm that there’s a healthy amount of free storage on the instance. Other metrics, such as CPU utilization and freeable memory, also look nominal.

Demo #3: Database restarts due to out-of-memory issues

Stability issues due to out-of-memory (OOM) errors are another common scenario many DBAs will be familiar with. We will simulate this issue and demonstrate techniques to identify and mitigate it. Let’s start by reviewing the Fleet Health Dashboard.

As seen on the Fleet Health Dashboard, we have an RDS instance that shows a high DB load warning, indicated by the red hexagon. In the right pane (“Top 10 instances …”), we can see that the instance production-workload-1 is experiencing extremely high levels of DB load. Let’s navigate to the alarms portion of the Fleet Health Dashboard.

In the context of this issue, a red hexagon indicates that the instance has one or more active CloudWatch alarms. In our example, the instance production-workload-1 has an active alarm on the EngineUptime CloudWatch metric, which has breached the defined threshold. If you would like to be alerted when there is an availability impacting event to your database than we recommend configuring a CloudWatch alarm on the EngineUptime metric. The breach of this alarm indicates availability issues on the instance, which can be anything that causes the database to restart and thus reset the uptime metric. To confirm the potential cause of this issue, let’s scroll further down the page to review the instance’s memory and CPU metrics and see if this can give us any clues.

As you can see in the following screenshot, the blue line on the Freeable Memory graph indicates that the memory levels are jumping between extreme values (from memory almost full to memory almost empty). This is a potential symptom of repeated reboots. To confirm this, we can select the Database Instance view in the left pane.

We can see an increase in database load and we notice that the CloudWatch alarm ProductionClusterUptime is in the alarm state, as previously seen on the Fleet Health Dashboard. Scrolling further down, we can see the top queries that are driving database load.

It appears that two different types of SELECT queries are the main load contributors in this database. Additionally, we can navigate to the Database Telemetry tab to review metrics for this instance and gather more details on how these queries are impacting the database.

Similar to what we saw on the Fleet Health Dashboard, the memory is frequently dropping to levels close to 0. We are also experiencing missing data points within other metrics, which is another potential indication of stability issues. To confirm that low memory is causing the issue, we can navigate to the Events tab of Database Telemetry to see the instance’s specific RDS events.

As seen in the events, the database is restarting repeatedly, and we have periodic messages warning us about the database process being stopped by the operating system due to excessive memory consumption. To temporarily alleviate this issue, we will create an instance using a larger instance class (db.r8g.24xlarge) and fail over to it to provide the workload with more resources. As seen in the following screenshot, this adds an additional instance to our fleet (production-workload-2). After the failover, it immediately switches to the Ok state, indicating that the database load is acceptable.

Additionally, in the preceding graphs, we can observe that the DB load for the original instance production-workload-1 decreases after the failover. To confirm that this new instance is not experiencing OOM reboots, we can navigate to the Database Instance view.

As observed in the preceding screenshot, we’re still running the same queries, but the load is much lower due to the increase in resources (vCPU and memory). We can navigate to the Database Telemetry tab to confirm that the memory is no longer dropping to near-zero.

We now have an acceptable amount of memory, and this instance size change should be able to properly serve the workload until further investigation is done with the application team to determine the cause of the increase in traffic. When the load returns to normal and it’s safe to downsize the instance, we can perform a failover back to our original instance (production-workload-1) and delete the large instance (production-workload-2).

Of course, although increasing the instance size is a good temporary solution for a query issue such as this, looking into query optimization might be the logical next step.

Conclusion

In this post, we demonstrated how you can troubleshoot your databases using CloudWatch Database Insights. We recommend exploring CloudWatch Database Insights further to familiarize yourself with this feature.

About the Authors

Nirupam Datta is a Senior Cloud Support DBE at AWS. He has been with AWS for over 5 years. With over 12 years of experience in database engineering and infra-architecture, Nirupam is also a subject matter expert in the Amazon RDS core systems, Amazon RDS for SQL Server, and Amazon Aurora MySQL. He provides technical assistance to customers, guiding them to migrate, optimize, and navigate their journey in the AWS Cloud.

Nirupam Datta is a Senior Cloud Support DBE at AWS. He has been with AWS for over 5 years. With over 12 years of experience in database engineering and infra-architecture, Nirupam is also a subject matter expert in the Amazon RDS core systems, Amazon RDS for SQL Server, and Amazon Aurora MySQL. He provides technical assistance to customers, guiding them to migrate, optimize, and navigate their journey in the AWS Cloud.

Ryan Moore is a Cloud Support DBE II who has worked within the AWS database team for 2 years. Ryan is an Aurora MySQL and RDS MySQL subject matter expert that specializes in enabling customers to build performant, scalable, and secure architectures within the AWS Cloud.

Ryan Moore is a Cloud Support DBE II who has worked within the AWS database team for 2 years. Ryan is an Aurora MySQL and RDS MySQL subject matter expert that specializes in enabling customers to build performant, scalable, and secure architectures within the AWS Cloud.

Cade Kettner is a Cloud Support Engineer who has worked with the AWS Database Team for nearly 2 years. In his role, he provides technical assistance with AWS Services including RDS MySQL, RDS MariaDB, RDS SQL Server, Aurora MySQL, and AWS DMS to help troubleshoot technical issues and provide tailored solutions for customers.

Cade Kettner is a Cloud Support Engineer who has worked with the AWS Database Team for nearly 2 years. In his role, he provides technical assistance with AWS Services including RDS MySQL, RDS MariaDB, RDS SQL Server, Aurora MySQL, and AWS DMS to help troubleshoot technical issues and provide tailored solutions for customers.

Source: Read More