AI models today are expected to handle complex tasks such as solving mathematical problems, interpreting logical statements, and assisting with enterprise decision-making. Building such models demands the integration of mathematical reasoning, scientific understanding, and advanced pattern recognition. As the demand for intelligent agents in real-time applications, like coding assistants and business automation tools, continues to grow, there is a pressing need for models that combine strong performance with efficient memory and token usage, making them viable for deployment in practical hardware environments.

A central challenge in AI development is the resource intensity of large-scale reasoning models. Despite their strong capabilities, these models often require significant memory and computational resources, limiting their real-world applicability. This creates a gap between what advanced models can achieve and what users can realistically deploy. Even well-resourced enterprises may find running models demanding dozens of gigabytes of memory or high inference costs unsustainable. The issue is not just about building smarter models, but ensuring they are efficient and deployable in real-world platforms. High-performing models such as QWQ‑32b, o1‑mini, and EXAONE‑Deep‑32b excel at tasks involving mathematical reasoning and academic benchmarks. However, their dependence on high-end GPUs and high token consumption limits their use in production settings. These models highlight the ongoing trade-off in AI deployment: achieving high accuracy at the cost of scalability and efficiency.

Addressing this gap, researchers at ServiceNow introduced Apriel-Nemotron-15b-Thinker. This model consists of 15 billion parameters, a relatively modest size compared to its high-performing counterparts, yet it demonstrates performance on par with models almost twice its size. The primary advantage lies in its memory footprint and token efficiency. While delivering competitive results, it requires nearly half the memory of QWQ‑32b and EXAONE‑Deep‑32b. This directly contributes to improved operational efficiency in enterprise environments, making it feasible to integrate high-performance reasoning models into real-world applications without large-scale infrastructure upgrades.

The development of Apriel-Nemotron-15b-Thinker followed a structured three-stage training approach, each designed to enhance a specific aspect of the model’s reasoning capabilities. In the initial phase, termed Continual Pre-training (CPT), the model was exposed to over 100 billion tokens. These tokens were not generic text but carefully selected examples from domains requiring deep reasoning, mathematical logic, programming challenges, scientific literature, and logical deduction tasks. This exposure provided the foundational reasoning capabilities that distinguish the model from others. The second stage involved Supervised Fine-Tuning (SFT) using 200,000 high-quality demonstrations. These examples further calibrated the model’s responses to reasoning challenges, enhancing performance on tasks that require accuracy and attention to detail. The final tuning stage, GRPO (Guided Reinforcement Preference Optimization), refined the model’s outputs by optimizing alignment with expected results across key tasks. This pipeline ensures the model is intelligent, precise, structured, and scalable.

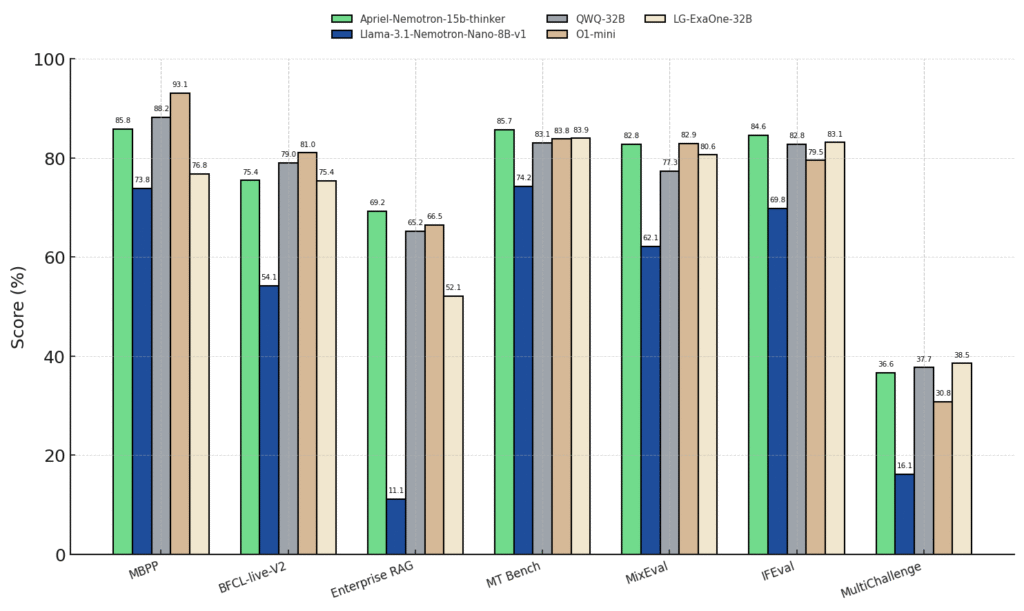

In enterprise-specific tasks such as MBPP, BFCL, Enterprise RAG, MT Bench, MixEval, IFEval, and Multi-Challenge, the model delivered competitive or superior performance compared to larger models. Regarding production efficiency, it consumed 40% fewer tokens than QWQ‑32b, significantly lowering inference costs. From a memory standpoint, it achieves all this with approximately 50% of the memory needed by QWQ‑32b and EXAONE-Deep‑32b, indicating a substantial improvement in deployment feasibility. Even in academic benchmarks, such as AIME-24, AIME-25, AMC-23, MATH-500, and GPQA, the model held its own, often equaling or surpassing the performance of other larger models, all while being significantly lighter in computational demand.

Several Key Takeaways from the Research on Apriel-Nemotron-15b-Thinker:

- Apriel-Nemotron-15b-Thinker has 15 billion parameters, significantly smaller than QWQ-32b or EXAONE-Deep-32b, but performs competitively.

- Uses a 3-phase training, 100B+ tokens in CPT, 200K fine-tuning demos in SFT, and final GRPO refinement.

- Consumes around 50% less memory than QWQ-32b, allowing for easier deployment on enterprise hardware.

- Uses 40% fewer tokens in production tasks than QWQ-32b, reducing inference cost and increasing speed.

- Outperforms or equals larger models on MBPP, BFCL, Enterprise RAG, and academic tasks like GPQA and MATH-500.

- Optimized for Agentic and Enterprise tasks, suggesting utility in corporate automation, coding agents, and logical assistants.

- Designed specifically for real-world use, avoiding over-reliance on lab-scale compute environments.

Check out the Model on Hugging Face. Also, don’t forget to follow us on Twitter.

Here’s a brief overview of what we’re building at Marktechpost:

- ML News Community – r/machinelearningnews (92k+ members)

- Newsletter– airesearchinsights.com/(30k+ subscribers)

- miniCON AI Events – minicon.marktechpost.com

- AI Reports & Magazines – magazine.marktechpost.com

- AI Dev & Research News – marktechpost.com (1M+ monthly readers)

The post ServiceNow AI Released Apriel-Nemotron-15b-Thinker: A Compact Yet Powerful Reasoning Model Optimized for Enterprise-Scale Deployment and Efficiency appeared first on MarkTechPost.

Source: Read MoreÂ