Building serverless AI agents has recently become a lot simpler. With the Langbase Docs MCP server, you can instantly connect AI models to Langbase documentation – making it easy to build composable, agentic AI systems with memory without complex infrastructure.

In this guide, you’ll learn how to set up the Langbase Docs MCP server inside Cursor (an AI code editor), and build a summary AI agent that uses Langbase docs as live, on-demand context.

Here’s what we’ll cover:

Prerequisites

Before we begin creating the agent, you’ll need to have some things setup and some tools ready to go.

In this tutorial, I’ll be using the following tech stack:

Langbase – the platform to build and deploy your serverless AI agents.

Langbase SDK – a TypeScript AI SDK, designed to work with JavaScript, TypeScript, Node.js, Next.js, React, and the like.

Cursor – An AI code editor just like VS Code.

You’ll also need to:

- Sign up on Langbase to get access to the API key.

What is Model Context Protocol (MCP)?

Model Context Protocol (MCP) is an open protocol that standardizes how applications provide external context to large language models (LLMs). With MCP, developers can connect AI models to various tools and data sources like documentation, APIs, and databases – in a clean, consistent way.

Instead of relying solely on prompts, MCP allows LLMs to call custom tools (like documentation fetchers or API explorers) during a conversation.

MCP General Architecture

At its core, MCP follows a client-server architecture where a host application can connect to multiple servers.

Here’s the general architecture of what it looks like:

The Model Context Protocol architecture lets AI clients (like Claude, IDEs, and developer tools) securely connect to multiple local or remote data sources in real time. MCP clients communicate with one or more MCP servers, which act as bridges to structured data – whether from local files, databases, or remote APIs.

This setup allows AI models to retrieve fresh, relevant context from different sources seamlessly, without embedding data directly into the model.

Anthropic’s Role in Launching MCP

Anthropic introduced MCP as part of their vision to make LLMs tool-augmented by default. MCP was originally built to expand Claude’s capabilities, but it’s now available more broadly and supported in developer-friendly environments like Cursor and Claude Desktop.

By standardizing how tools integrate into LLM workflows, MCP makes it easier for developers to extend AI systems without custom plugins or API hacks.

Cursor AI Code Editor

Cursor is a developer-first AI code editor that integrates LLMs (like Claude, GPT, and more) directly into your IDE. Cursor supports MCP, meaning you can quickly attach custom tool servers – like the Langbase Docs MCP server – and make them accessible as AI-augmented tools while you code.

Think of Cursor as VS Code meets AI agents – with built-in support for smart tools like docs fetchers and code examples retrievers.

What is Langbase and Why is its Docs MCP Server Useful?

Langbase is a powerful serverless AI platform for building AI agents with memory. It helps developers build AI-powered apps and assistants by connecting LLMs directly to their data, APIs, and documentation.

The Langbase Docs MCP Server provides access to the Langbase documentation and API reference. This server allows you to use the Langbase documentation as context for your LLMs.

By connecting this server to Cursor (or any MCP-supported IDE), you can make Langbase documentation available to your AI agents on demand. This means less context-switching, faster workflows, and smarter assistance when building serverless agentic applications.

How to Set Up the Langbase Docs MCP Server in Cursor

Let’s walk through setting up the server step-by-step.

1. Open Cursor Settings

Launch Cursor and open Settings. From the left sidebar, select MCP.

2. Add a New MCP Server

Click the yellow + Add new global MCP server button.

3. Configure the Langbase Docs MCP Server

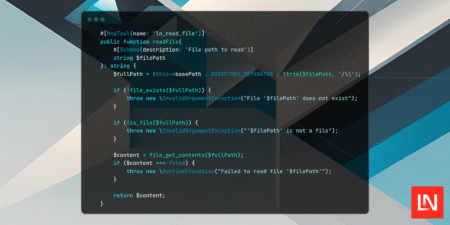

Paste the following configuration into the mcp.json file:

{

"mcpServers": {

"Langbase": {

"command": "npx",

"args": ["@langbase/cli","docs-mcp-server"]

}

}

}

4. Start the Langbase Docs MCP Server

In your terminal, run:

pnpm add @langbase/cli

And then run this command:

pnpm dlx @langbase/cli docs-mcp-server

5. Enable the MCP Server in Cursor

In the MCP settings, make sure the Langbase server is toggled to Enabled.

How to Use Langbase Docs MCP Server in Cursor AI

Once everything’s all set up, Cursor’s AI agent can now call Langbase docs tools like:

docs_route_findersdk_documentation_fetcherexamples_toolguide_toolapi_reference_tool

For example, you can ask the Cursor agent:

“Show me the API reference for Langbase Memory”

or

“Find a code example of creating an AI agent pipe in Langbase”

The AI will use the Docs MCP server to fetch precise documentation snippets – directly inside Cursor.

Use Case: Build a Summary AI Agent with Langbase Docs MCP Server

Let’s build a summary agent that summarizes context using the Langbase SDK, powered by the Langbase Docs MCP server inside the Cursor AI code editor.

Open an empty folder in Cursor and launch the chat panel (

Cmd+Shift+Ion Mac orCtrl+Shift+Ion Windows).Switch to Agent mode from the mode selector and pick your preferred LLM (we’ll use Claude 3.5 Sonnet for this demo).

In the chat input, enter the following prompt:

“In this directory, using Langbase SDK, create the summary pipe agent. Use TypeScript and pnpm to run the agent in the terminal.“Cursor will automatically invoke MCP calls, generate the required files and code using Langbase Docs as context, and suggest changes. Accept the changes, and your summary agent will be ready. You can run the agent using the commands provided by Cursor and view the results.

Here’s a demo video of creating this summary agent with a single prompt and Langbase Docs MCP server:

By combining Langbase’s Docs MCP server with Cursor AI, you’ve learned how to build serverless AI agents in minutes – all without leaving your IDE.

If you’re building AI agents, tools, or apps with Langbase, this is one of the fastest ways to simplify your development process.

Happy building! 🚀

Connect with me by 🙌:

Subscribing to my YouTube Channel. If you are willing to learn about AI and agents.

Subscribing to my free newsletter “The Agentic Engineer” where I share all the latest AI and agents news/trends/jobs and much more.

Follow me on X (Twitter).

Source: freeCodeCamp Programming Tutorials: Python, JavaScript, Git & MoreÂ