Deploying large language model (LLM)-based agents in production settings often reveals critical reliability issues. Accurately identifying the causes of agent failures and implementing proactive self-correction mechanisms is essential. Recent analysis by Atla on the publicly available τ-Bench benchmark provides granular insights into agent failures, moving beyond traditional aggregate success metrics and highlighting Atla’s EvalToolbox approach.

Conventional evaluation practices typically rely on aggregate success rates, offering minimal actionable insights into actual performance reliability. These methods necessitate manual reviews of extensive logs to diagnose issues—an impractical approach as deployments scale. Relying solely on success rates, such as 50%, provides insufficient clarity regarding the nature of the remaining unsuccessful interactions, complicating the troubleshooting process.

To address these evaluation gaps, Atla conducted a detailed analysis of τ-Bench—a benchmark specifically designed to examine tool-agent-user interactions. This analysis systematically identified and categorized agent workflow failures within τ-retail, a subset focusing on retail customer service interactions.

Explore a preview of the Atla EvalToolbox (launching soon) here, and sign up to join Atla’s user community. If you would like to learn more, book a call with the Atla team.

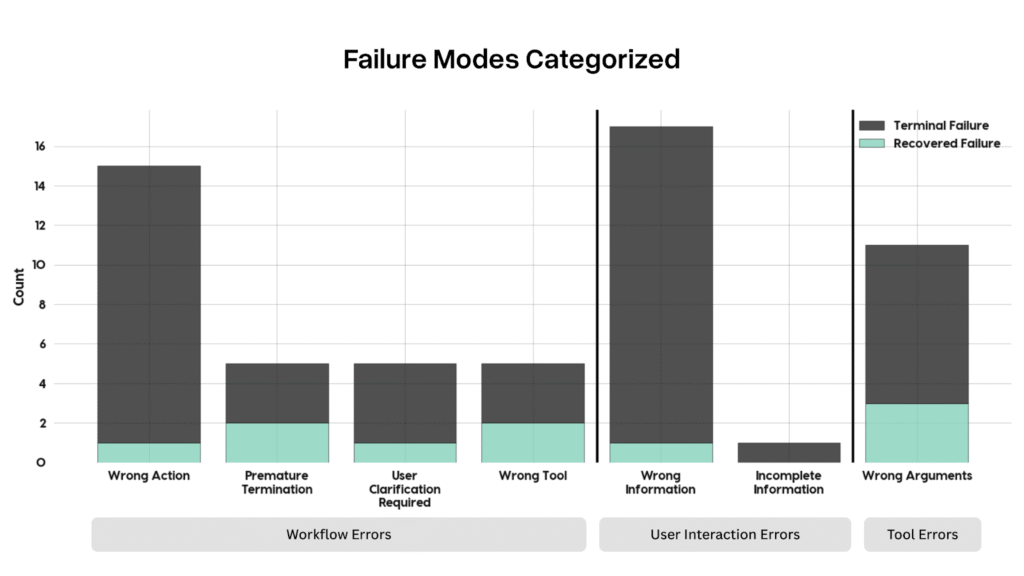

A detailed evaluation of τ-retail highlighted key failure categories:

- Workflow Errors, predominantly characterized by “Wrong Action” scenarios, where agents failed to execute necessary tasks.

- User Interaction Errors, particularly the provision of “Wrong Information,” emerged as the most frequent failure type.

- Tool Errors, where correct tools were utilized incorrectly due to erroneous parameters, constituted another significant failure mode.

A critical distinction from this benchmark is the categorization of errors into terminal failures (irrecoverable) and recoverable failures. Terminal failures significantly outnumber recoverable errors, illustrating the limitations inherent in agent self-correction without guided intervention.

Here’s an example where an agent makes a “wrong information” failure:

To address these challenges, Atla integrated Selene, an evaluation model directly embedded into agent workflows. Selene actively monitors each interaction step, identifying and correcting errors in real-time. Practical demonstrations show marked improvements when employing Selene: agents successfully corrected initial errors promptly, enhancing overall accuracy and user experience.

Illustratively, in scenarios involving “Wrong Information”:

- Agents operating without Selene consistently failed to recover from initial errors, resulting in low user satisfaction.

- Selene-equipped agents effectively identified and rectified errors, significantly enhancing user satisfaction and accuracy of responses.

EvalToolbox thus transitions from manual, retrospective error assessments toward automated, immediate detection and correction. It accomplishes this through:

- Automated categorization and identification of common failure modes.

- Real-time, actionable feedback upon detecting errors.

- Dynamic self-correction facilitated by incorporating real-time feedback directly into agent workflows.

Future enhancements include broader applicability across diverse agent functions such as coding tasks, specialized domain implementations, and the establishment of standardized evaluation-in-the-loop protocols.

Integrating evaluation directly within agent workflows through τ-Bench analysis and EvalToolbox represents a practical, automated approach to mitigating reliability issues in LLM-based agents.

Note: Thanks to the ATLA AI team for the thought leadership/ Resources for this article. ATLA AI team has supported us for this content/article.

The post Diagnosing and Self- Correcting LLM Agent Failures: A Technical Deep Dive into τ-Bench Findings with Atla’s EvalToolbox appeared first on MarkTechPost.

Source: Read MoreÂ