Large language models can generate fluent responses, emulate tone, and even follow complex instructions; however, they struggle to retain information across multiple sessions. This limitation becomes more pressing as LLMs are integrated into applications that require long-term engagement, such as personal assistance, health management, and tutoring. In real-life conversations, people recall preferences, infer behaviors, and construct mental maps over time. A person who mentioned their dietary restrictions last week expects those to be taken into account the next time food is discussed. Without mechanisms to store and retrieve such details across conversations, AI agents fail to offer consistency and reliability, undermining user trust.

The central challenge with today’s LLMs lies in their inability to persist relevant information beyond the boundaries of a conversation’s context window. These models rely on limited tokens, sometimes as high as 128K or 200K, but when long interactions span days or weeks, even these expanded windows fall short. More critically, the quality of attention degrades over distant tokens, making it harder for models to locate or utilize earlier context effectively. A user may bring up personal details, switch to a completely different topic, and return to the original subject much later. Without a robust memory system, the AI will likely ignore the previously mentioned facts. This creates friction, especially in scenarios where continuity is crucial. The issue is not just forgetting information, but also retrieving the wrong information from irrelevant parts of the conversation history due to token overflow and thematic drift.

Several attempts have been made to tackle this memory gap. Some systems rely on retrieval-augmented generation (RAG) techniques, which utilize similarity searches to retrieve relevant text chunks during a conversation. Others employ full-context approaches that simply refeed the entire conversation into the model, which increases latency and token costs. Proprietary memory solutions and open-source alternatives try to improve upon these by storing past exchanges in vector databases or structured formats. However, these methods often lead to inefficiencies, such as retrieving excessive irrelevant information or failing to consolidate updates in a meaningful manner. They also lack effective mechanisms to detect conflicting data or prioritize newer updates, leading to fragmented memories that hinder reliable reasoning.

A research team from Mem0.ai developed a new memory-focused system called Mem0. This architecture introduces a dynamic mechanism to extract, consolidate, and retrieve information from conversations as they happen. The design enables the system to selectively identify useful facts from interactions, evaluate their relevance and uniqueness, and integrate them into a memory store that can be consulted in future sessions. The researchers also proposed a graph-enhanced version, Mem0g, which builds upon the base system by structuring information in relational formats. These models were tested using the LOCOMO benchmark and compared against six other categories of memory-enabled systems, including memory-augmented agents, RAG methods with varying configurations, full-context approaches, and both open-source and proprietary tools. Mem0 consistently achieved superior performance across all metrics.

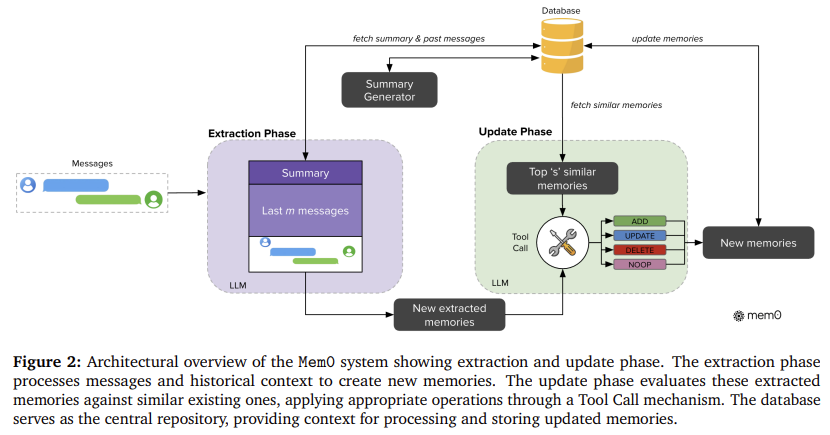

The core of the Mem0 system involves two operational stages. In the first phase, the model processes pairs of messages, typically a user’s question and the assistant’s response, along with summaries of recent conversations. A combination of global conversation summaries and the last 10 messages serves as the input for a language model that extracts salient facts. These facts are then analyzed in the second phase, where they are compared with similar existing memories in a vector database. The top 10 most similar memories are retrieved, and a decision mechanism, referred to as a ‘tool call’, determines whether the fact should be added, updated, deleted, or ignored. These decisions are made by the LLM itself rather than a classifier, streamlining memory management and avoiding redundancies.

The advanced variant, Mem0g, takes the memory representation a step further. It translates conversation content into a structured graph format, where entities, such as people, cities, or preferences, become nodes, and relationships, such as “lives in” or “prefers,” become edges. Each entity is labeled, embedded, and timestamped, while the relationships form triplets that capture the semantic structure of the dialogue. This format supports more complex reasoning across interconnected facts, allowing the model to trace relational paths across sessions. The conversion process uses LLMs to identify entities, classify them, and build the graph incrementally. For example, if a user discusses travel plans, the system creates nodes for cities, dates, and companions, thereby building a detailed and navigable structure of the conversation.

The performance metrics reported by the research team underscore the strength of both models. Mem0 showed a 26% improvement over OpenAI’s system when evaluated using the “LLM-as-a-Judge” metric. Mem0g, with its graph-enhanced design, achieved an additional 2% gain, pushing the total improvement to 28%. In terms of efficiency, Mem0 demonstrated 91% lower p95 latency than full-context methods, and more than 90% savings in token cost. This balance between performance and practicality is significant for production use cases, where response times and computational expenses are critical. The models also handled a wide range of question types, from single-hop factual lookups to multi-hop and open-domain queries, outperforming all other approaches in accuracy across categories.

Several Key takeaways from the research on Mem0 include:

- Mem0 uses a two-step process to extract and manage salient conversation facts, combining recent messages and global summaries to form a contextual prompt.

- Mem0g builds memory as a directed graph of entities and relationships, offering superior reasoning over complex information chains.

- Mem0 surpassed OpenAI’s memory system with a 26% improvement on LLM-as-a-Judge, while Mem0g added an extra 2% gain, achieving 28% overall.

- Mem0 achieved a 91% reduction in p95 latency and saved over 90% in token usage compared to full-context approaches.

- These architectures maintain fast, cost-efficient performance even when handling multi-session dialogues, making them suitable for deployment in production settings.

- The system is ideal for AI assistants in tutoring, healthcare, and enterprise settings where continuity of memory is essential.

Check out the Paper. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 90k+ ML SubReddit.

The post Mem0: A Scalable Memory Architecture Enabling Persistent, Structured Recall for Long-Term AI Conversations Across Sessions appeared first on MarkTechPost.

Source: Read MoreÂ

[Register Now] miniCON Virtual Conference on AGENTIC AI: FREE REGISTRATION + Certificate of Attendance + 4 Hour Short Event (May 21, 9 am- 1 pm PST) + Hands on Workshop

[Register Now] miniCON Virtual Conference on AGENTIC AI: FREE REGISTRATION + Certificate of Attendance + 4 Hour Short Event (May 21, 9 am- 1 pm PST) + Hands on Workshop