Despite the remarkable progress in large language models (LLMs), critical challenges remain. Many models exhibit limitations in nuanced reasoning, multilingual proficiency, and computational efficiency. Often, models are either highly capable in complex tasks but slow and resource-intensive, or fast but prone to superficial outputs. Furthermore, scalability across diverse languages and long-context tasks continues to be a bottleneck, particularly for applications requiring flexible reasoning styles or long-horizon memory. These issues limit the practical deployment of LLMs in dynamic real-world environments.

Qwen3 Just Released: A Targeted Response to Existing Gaps

Qwen3, the latest release in the Qwen family of models developed by Alibaba Group, aims to systematically address these limitations. Qwen3 introduces a new generation of models specifically optimized for hybrid reasoning, multilingual understanding, and efficient scaling across parameter sizes.

The Qwen3 series expands upon the foundation laid by earlier Qwen models, offering a broader portfolio of dense and Mixture of Experts (MoE) architectures. Designed for both research and production use cases, Qwen3 models target applications that require adaptable problem-solving across natural language, coding, mathematics, and broader multimodal domains.

Technical Innovations and Architectural Enhancements

Qwen3 distinguishes itself with several key technical innovations:

- Hybrid Reasoning Capability:

A core innovation is the model’s ability to dynamically switch between “thinking” and “non-thinking” modes. In “thinking” mode, Qwen3 engages in step-by-step logical reasoning—crucial for tasks like mathematical proofs, complex coding, or scientific analysis. In contrast, “non-thinking” mode provides direct and efficient answers for simpler queries, optimizing latency without sacrificing correctness. - Extended Multilingual Coverage:

Qwen3 significantly broadens its multilingual capabilities, supporting over 100 languages and dialects, improving accessibility and accuracy across diverse linguistic contexts. - Flexible Model Sizes and Architectures:

The Qwen3 lineup includes models ranging from 0.5 billion parameters (dense) to 235 billion parameters (MoE). The flagship model, Qwen3-235B-A22B, activates only 22 billion parameters per inference, enabling high performance while maintaining manageable computational costs. - Long Context Support:

Certain Qwen3 models support context windows up to 128,000 tokens, enhancing their ability to process lengthy documents, codebases, and multi-turn conversations without degradation in performance. - Advanced Training Dataset:

Qwen3 leverages a refreshed, diversified corpus with improved data quality control, aiming to minimize hallucinations and enhance generalization across domains.

Additionally, the Qwen3 base models are released under an open license (subject to specified use cases), enabling the research and open-source community to experiment and build upon them.

Empirical Results and Benchmark Insights

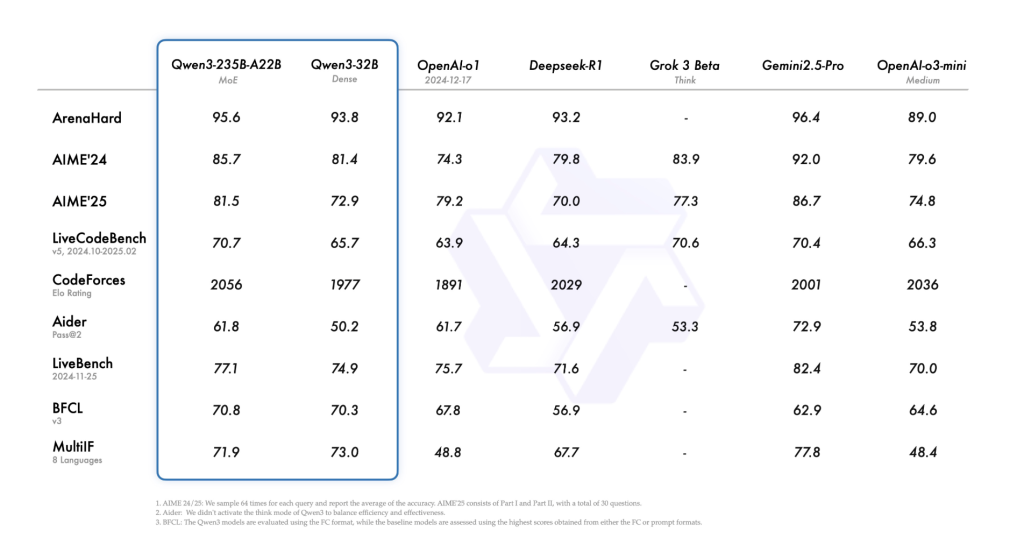

Benchmarking results illustrate that Qwen3 models perform competitively against leading contemporaries:

- The Qwen3-235B-A22B model achieves strong results across coding (HumanEval, MBPP), mathematical reasoning (GSM8K, MATH), and general knowledge benchmarks, rivaling DeepSeek-R1 and Gemini 2.5 Pro series models.

- The Qwen3-72B and Qwen3-72B-Chat models demonstrate solid instruction-following and chat capabilities, showing significant improvements over the earlier Qwen1.5 and Qwen2 series.

- Notably, the Qwen3-30B-A3B, a smaller MoE variant with 3 billion active parameters, outperforms Qwen2-32B on multiple standard benchmarks, demonstrating improved efficiency without a trade-off in accuracy.

Early evaluations also indicate that Qwen3 models exhibit lower hallucination rates and more consistent multi-turn dialogue performance compared to previous Qwen generations.

Conclusion

Qwen3 represents a thoughtful evolution in large language model development. By integrating hybrid reasoning, scalable architecture, multilingual robustness, and efficient computation strategies, Qwen3 addresses many of the core challenges that continue to affect LLM deployment today. Its design emphasizes adaptability—making it equally suitable for academic research, enterprise solutions, and future multimodal applications.

Rather than offering incremental improvements, Qwen3 redefines several important dimensions in LLM design, setting a new reference point for balancing performance, efficiency, and flexibility in increasingly complex AI systems.

Check out the Blog, Models on Hugging Face and GitHub Page. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 90k+ ML SubReddit.

The post Alibaba Qwen Team Just Released Qwen3: The Latest Generation of Large Language Models in Qwen Series, Offering a Comprehensive Suite of Dense and Mixture-of-Experts (MoE) Models appeared first on MarkTechPost.

Source: Read MoreÂ

[Register Now] miniCON Virtual Conference on AGENTIC AI: FREE REGISTRATION + Certificate of Attendance + 4 Hour Short Event (May 21, 9 am- 1 pm PST) + Hands on Workshop

[Register Now] miniCON Virtual Conference on AGENTIC AI: FREE REGISTRATION + Certificate of Attendance + 4 Hour Short Event (May 21, 9 am- 1 pm PST) + Hands on Workshop