The future of AI-powered search

The role of the modern database is evolving. AI-powered applications require more than just fast, scalable, and durable data management: they need highly accurate data retrieval and intelligent ranking, which are enabled by the ability to extract meaning from large volumes of unstructured inputs like text, images, and video. Retrieval-augmented generation (RAG) is now the default for LLM-powered applications, making accuracy in AI-driven search and retrieval a critical priority for developers. Meanwhile, customers in industries like healthcare, legal, and finance need highly reliable answers to power the applications their users rely on.

MongoDB Atlas Search already combines keyword and vector search through its hybrid capabilities. However, to truly meet developers’ needs and expectations, we are expanding our focus to integrating best-in-class embedding and reranking models into Atlas to ensure optimal performance and superior outcomes. These models enable search systems to understand meaning beyond exact words in text, and to recognize semantic similarities across images, video, and audio. Embedding models and rerankers empower customer support teams to quickly match queries with pertinent documents, assist legal professionals in surfacing key clauses within long contracts, and optimize RAG pipelines by retrieving contextually significant information that addresses users’ queries.

MongoDB is actively building this future. In February, we announced the acquisition of Voyage AI, a pioneer in state-of-the-art embedding and reranking models. With Voyage’s leading models and Atlas Search, developers will get a unified, production-ready stack for semantic retrieval.

Why embedding and reranking matter

Embedding and reranking models are core components of modern information retrieval, providing the link between natural language and accurate results:

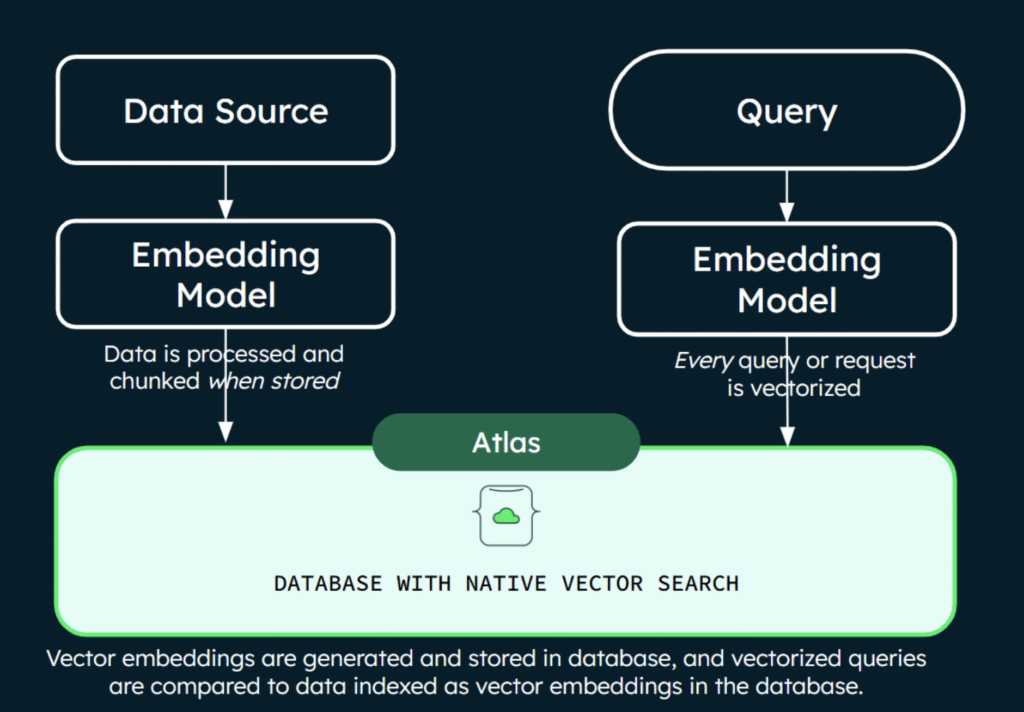

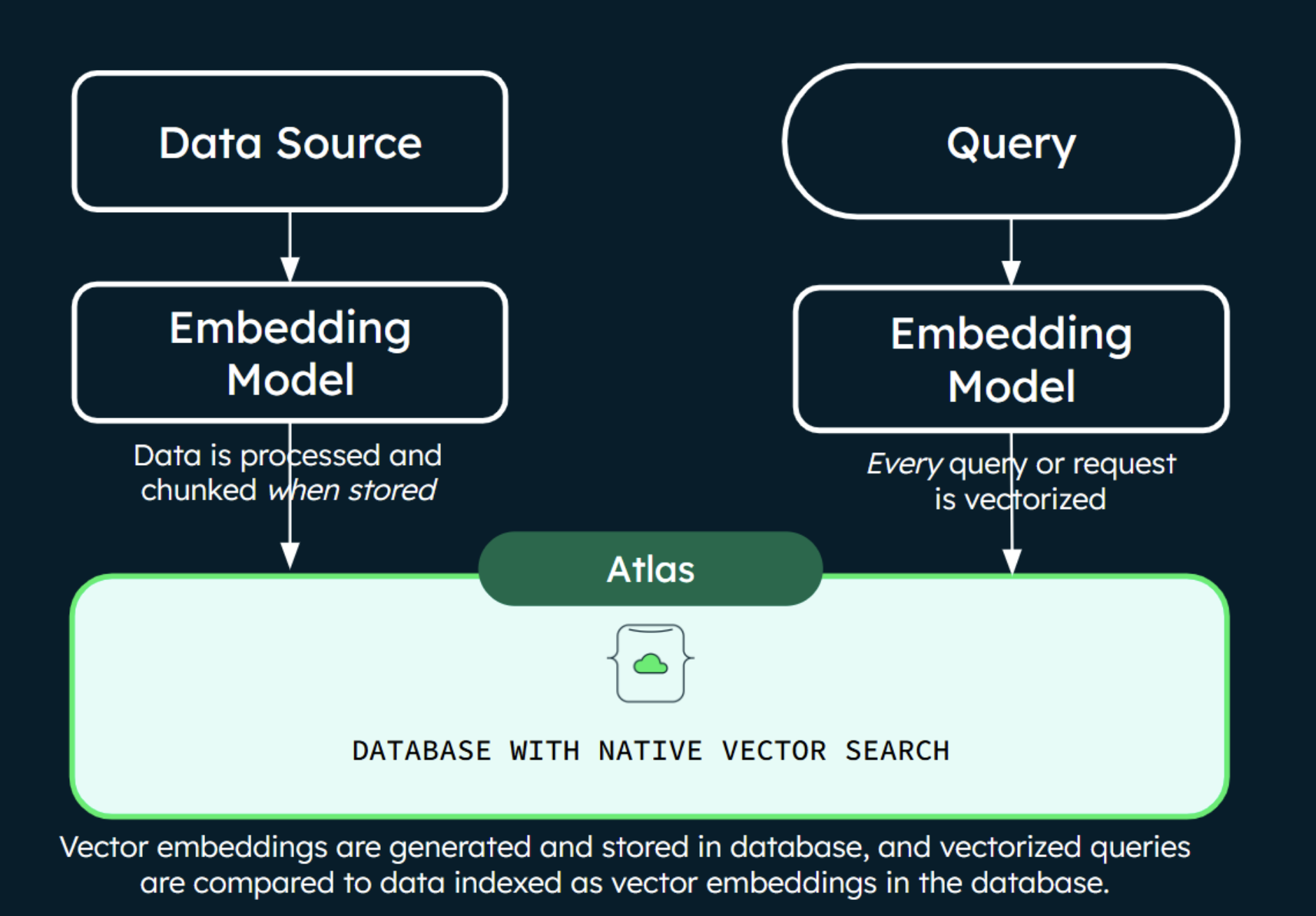

Embedding models transform data into vector representations that capture meaning and context, enabling searches based on semantic similarity rather than just keyword matches.

Reranking models improve search accuracy by scoring and ranking a smaller set (e.g., 1000) of documents based on their relevance to a query, ensuring the most meaningful results appear first.

A typical system uses an embedding model to project documents into a vector space that encodes semantics. A nearest neighbor search provides a list of documents close to a given query. These results are processed with a reranking model that enables deeper, clause-by-clause comparison between the queries and the nearest neighbors.

This combination can greatly improve retrieval accuracy. For example, the system processing a user query for “holiday cookie recipes without tree nuts” may first retrieve a set of holiday recipes with the nearest neighbor search. In reranking, the query would be fully compared to each retrieved document to ensure each recipe does not contain any nuts.

Voyage AI’s embedding and reranking models

Voyage offers a suite of embedding models that support both general-purpose use cases and domain-specific needs. General models like voyage-3, voyage-3-large, and voyage-3-lite handle diverse text inputs. For specialized applications, Voyage provides models tailored to domains like code (voyage-code-3), legal (voyage-law-2), and finance (voyage-finance-2), offering higher accuracy by capturing the context and semantics unique to each field. They also offer a multimodal model (voyage-multimodal-3) capable of processing interleaved text and images. In addition, Voyage provides reranking models in standard and lite versions, each focused on optimizing relevance while keeping latency and computational load under control.

Voyage’s embedding models are designed to optimize the two distinct workloads required for each application, and our inference platform is purpose-built to support both scenarios efficiently:

Document embeddings are created for all documents in a database whenever they are added or updated, capturing the semantic meaning of the documents an application has access to. Typically generated in batch, they are optimized for scale and throughput.

Query embeddings enable the system to effectively interpret the user’s intent for relevant results. Produced for a user’s search query at the moment it’s made, they are optimized for low latency and high precision.

Voyage AI’s embedding and reranking models consistently outperform leading production-grade models across industry benchmarks. For example, the general-purpose voyage-3-large model shows up to 20% improved retrieval accuracy over widely adopted production models across 100 datasets spanning domains like law, finance, and code. Despite its performance, it requires 200x less storage when using binary quantized embeddings. Domain-specific models like voyage-code-2 also outperform general-purpose models by up to 15% on code tasks

On the reranking side, rerank-lite-1 and rerank-1 deliver gains of up to 14% in precision and recall across over 80 multilingual and vertical-specific datasets. These improvements translate directly into better relevance, faster inference, and more efficient RAG pipelines at scale.

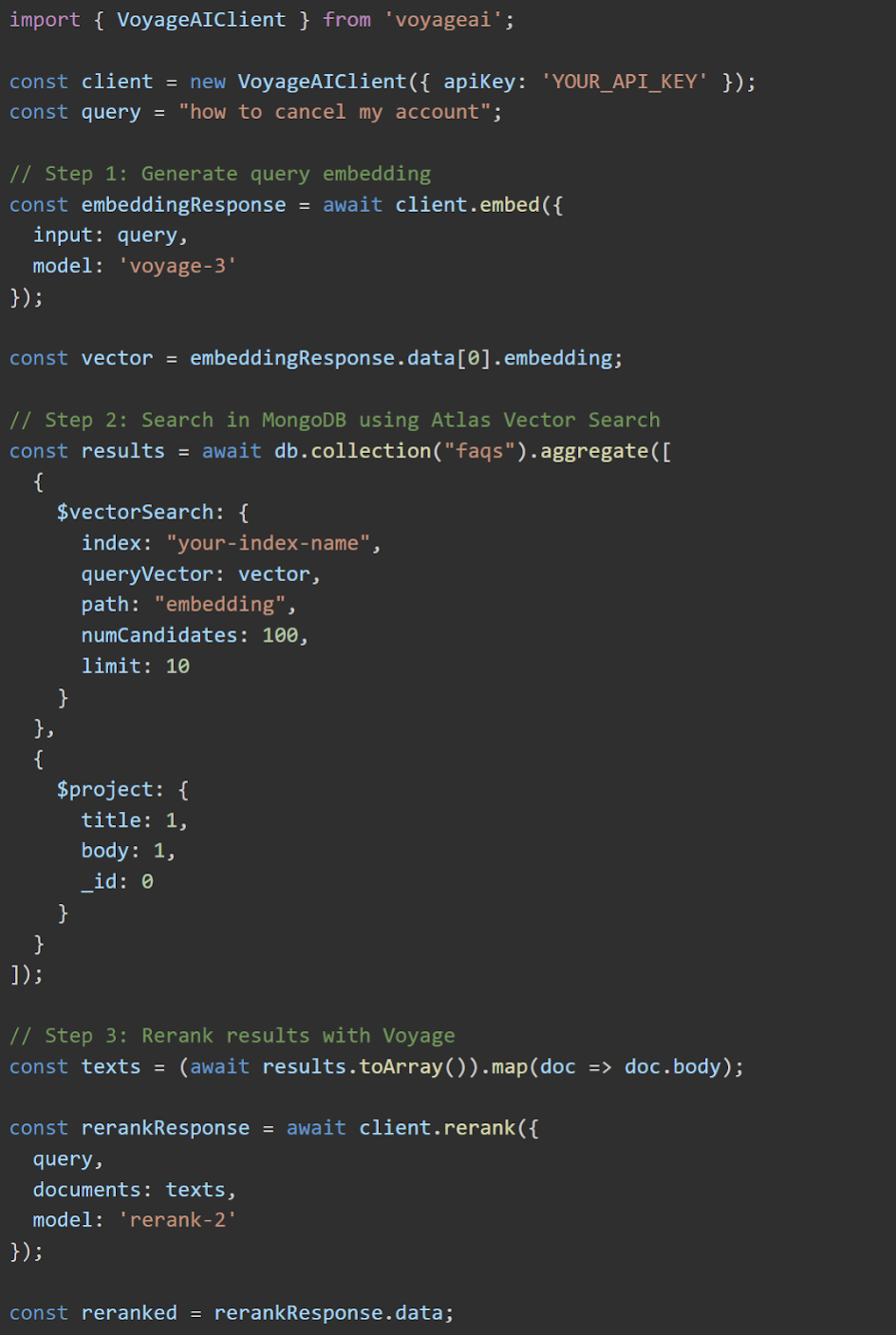

MongoDB Atlas Search + Voyage AI models today

MongoDB Atlas Vector Search enables powerful semantic retrieval with a wide range of embedding and reranking models. Developers can benefit from using Voyage models with Atlas Vector Search today, even before the deeper integration arrives.

“AI-powered search”, not “AI Search”

Not all AI search experiences are created equal. As we begin integrating Voyage AI models directly into MongoDB Atlas, it’s worth sharing how we’re approaching this work.

The best solutions today blend traditional information retrieval with modern AI techniques, improving relevance while keeping systems explainable and tunable.

AI-powered search in MongoDB Atlas enhances traditional search techniques with modern AI models. Embeddings improve semantic understanding, and reranking models refine relevance. But unlike opaque AI stacks, this approach remains transparent, customizable, and efficient:

More control: Developers can tune search logic and ranking strategies based on their domain.

More flexibility: Models can be updated or swapped to improve on an industry-specific corpus of data.

More efficiency: MongoDB handles both storage and retrieval, optimizing cost and performance at scale.

With Voyage’s models integrated directly into Atlas workflows, developers gain powerful semantic capabilities without sacrificing clarity or maintainability.

Building the MongoDB + Voyage AI “better together” story

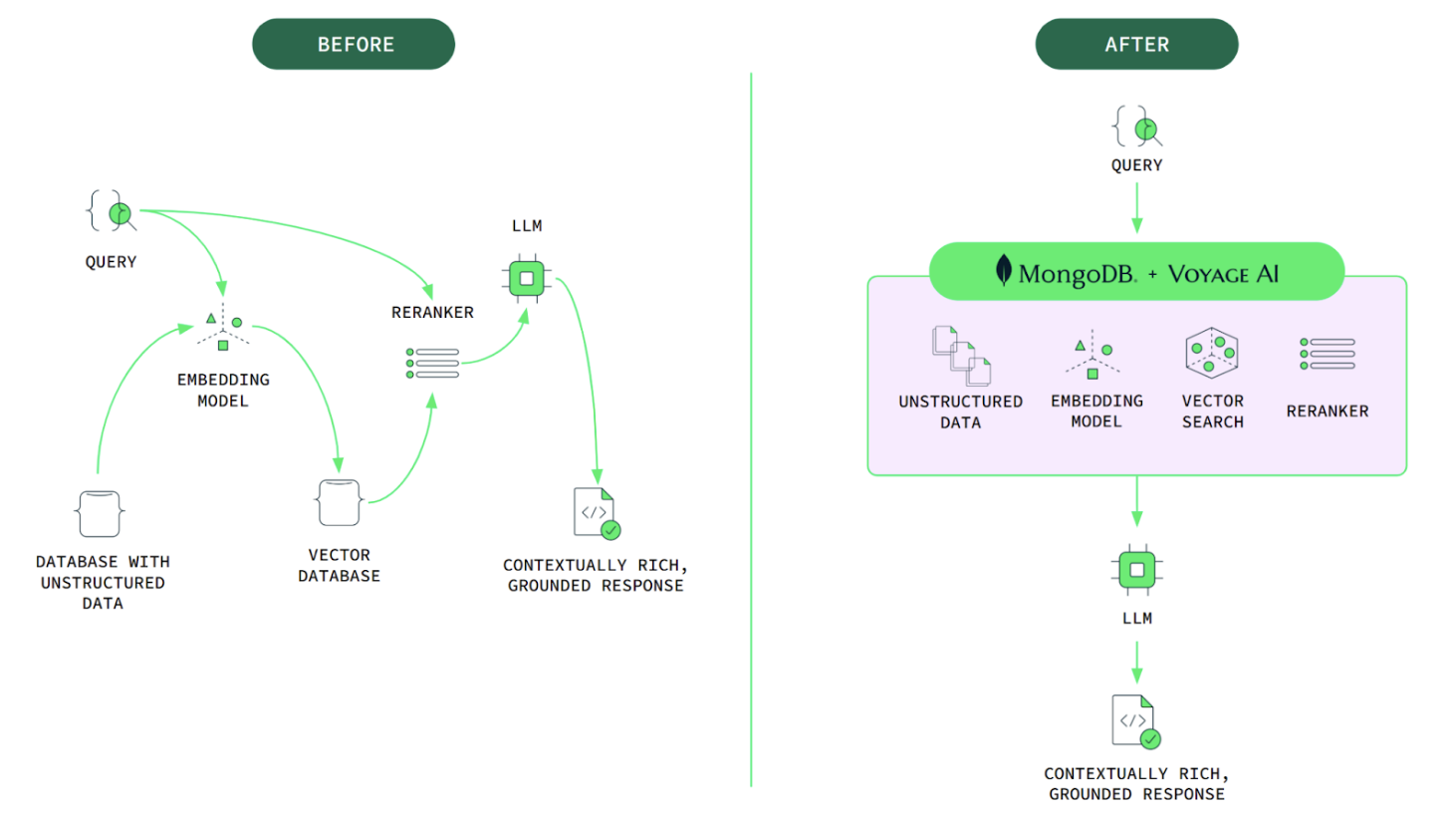

While MongoDB’s flexible query language unlocks powerful capabilities, Atlas Vector Search can require thoughtful setup, especially for advanced use cases. Users must select and fine-tune embedding models to fit specific use cases. Additionally, they must either rely on serverless model APIs or build and maintain infrastructure to host models themselves. Each insert of new data and search query requires independent API calls, adding operational overhead. As applications scale or when models need updating, managing these new data types in clusters introduces additional friction. Finally, integrating rerankers further complicates the workflow by requiring separate API calls and custom handling for reordering results.

By natively bringing Voyage AI’s industry-leading models to MongoDB Atlas, we will eliminate these burdens and introduce new capabilities that empower customers to deliver highly relevant query results with simplicity.

MongoDB is actively integrating Voyage’s embedding and reranking models into Atlas to deliver a truly native experience. These deep integrations will not only simplify the developer workflow but will also enhance accuracy, performance, and cost efficiency – all without the usual complexity of tuning disparate systems. And our ongoing commitment to partnering with innovative companies across AI and tech ensures that models from various providers remain supported within a collaborative ecosystem. However, adopting the native Voyage models allows developers to focus on building their applications while achieving the highest quality of information retrieval.

As we work on these native integrations, we’re actively exploring advanced capabilities to further enhance the Atlas platform. Our investigations focus on:

Defining the optimal approach to multi-modal information retrieval, integrating diverse inputs like text and images for richer results.

Developing instruction-tuned retrieval, which allows concise prompts to precisely guide model interpretations, ensuring searches align closely with user intent. For example, enabling a search for “shoes” to prioritize sneakers or dress shoes, depending on user behavior and preferences.

Determining the best ways to integrate domain-specific models tailored to the unique needs and use cases of industries such as legal, finance, and healthcare to achieve superior retrieval accuracy.

Making it easy to update and change models without impacting availability.

Bringing additional AI capabilities into our expressive aggregation pipeline language

Improving the ability to automatically assess model performance, with the potential to offer this capability to customers.

Building the future of AI-powered search

From RAG pipelines to AI-powered customer experiences, information retrieval is the backbone of real-world AI applications. Voyage’s models strengthen this foundation by surfacing better documents and improving final LLM outputs.

We are building this future around four core principles, with accuracy at the forefront:

Accurate: ensuring the precision of information retrieval is always our top priority, empowering applications to achieve production-grade quality and mass adoption.

Seamless: built into existing developer workflows.

Scalable: optimized for performance and cost.

Composable: open, flexible, and deeply integrated.

By embedding Voyage into Atlas, MongoDB offers the best of both worlds: industry-leading retrieval models inside a fully managed, developer-friendly platform. This unified platform allows models and data to work together seamlessly, empowering developers to build scalable, high-performance AI applications with precision at their core.

Join our MongoDB Community to learn about upcoming events, hear stories from MongoDB users, and connect with community members from around the world.

Source: Read More