- What are General-Purpose AI (GPAI) Models?

- Identifying the GPAI Model with Systemic Risk

- Regulatory Requirements for GPAI Models Posing Systemic Risk

- How Does Tx Ensure Your AI Models’ Compliance with AI Regulations?

The current state of AI advancements can be termed an unprecedented moment, as it rapidly transforms industries, with general-purpose AI (GPAI) models like Gemini and ChatGPT leading the change. However, the advancement also brings significant challenges. As per statistics, around 80-85% of AI projects fail to meet goals due to poor data quality, insufficient risk controls, and many other issues. After understanding these challenges, regulatory bodies are coming forward to ensure responsible and ethical AI development and deployment. The EU Artificial Intelligence Act (AI Act) introduces various guidelines for GPAI models, especially posing systemic risks.

This blog will discuss the criteria for identifying GPAI models having systemic risks, EU AI regulatory requirements for such models, and how AI models can comply with evolving regulations.

What are General-Purpose AI (GPAI) Models?

The EU AI Act defines GPAI models as AI models trained using large data volumes and self-supervision at scale. These models can perform multiple tasks across domains without depending on a particular functionality. General-purpose AI models can adapt and be fine-tuned to support different applications, including content creation, coding, translation, data analytics, and decision-making. Generally, there are three types of GPAI models:

Foundational Models

For example, GPT-4, Gemini, and Llama, trained on large-scale data and fine-tuned for downstream tasks.

Multimodal Models

For example, models that can generate text, image, audio, etc., by handling and integrating multiple types of input.

Instruction-Tuned Models

Fine-tuned to follow human instructions across general tasks.

But, under the EU AI Act, more critical GPAI Model types have emerged:

GPAI Models Without Systemic Risk

Although these models are broadly capable of performing diverse tasks like code assistance, content creation, and translation, they do not fulfill the criteria of systemic impact. They still need to follow transparency and usage guidelines defined by regulatory bodies but are not subject to the highly critical obligations under the law.

GPAI Models with Systemic Risk

These powerful models significantly impact the economy, business operations, and society. If they are left unchecked or unregulated, the aftereffects would be severe. Systemic risks may emerge from:

Model scale and capabilities

Trained on extremely large datasets, the models gain advanced autonomy and can generate compelling content, making them harder to predict or control. For instance, GPT-4 or Gemini can write software code, simulate human conversations, or generate legal documents.

Deployment Reach

Recently, organizations have been integrating GPAI models with their critical systems to multiply their impact. For instance, a general-purpose model merged with a cloud platform, a financial service tool, or a government chatbot will significantly impact millions of users if it generates biased or harmful outputs.

Risk of Misuse

The more advanced the model, the higher the risk of being used unethically and maliciously. Malicious actors can use it to create misinformation, deepfakes, or exploit security gaps within an infrastructure. For instance, bad actors might use an AI model to make phishing emails, spread misinformation during a nationwide event, or manipulate stock markets.

GPAI Models Without Systemic Risk

Although these models are broadly capable of performing diverse tasks like code assistance, content creation, and translation, they do not fulfill the criteria of systemic impact. They still need to follow transparency and usage guidelines defined by regulatory bodies but are not subject to the highly critical obligations under the law.

GPAI Models with Systemic Risk

These powerful models significantly impact the economy, business operations, and society. If they are left unchecked or unregulated, the aftereffects would be severe. Systemic risks may emerge from:

Model scale and capabilities

Trained on extremely large datasets, the models gain advanced autonomy and can generate compelling content, making them harder to predict or control. For instance, GPT-4 or Gemini can write software code, simulate human conversations, or generate legal documents.

Deployment Reach

Recently, organizations have been integrating GPAI models with their critical systems to multiply their impact. For instance, a general-purpose model merged with a cloud platform, a financial service tool, or a government chatbot will significantly impact millions of users if it generates biased or harmful outputs.

Risk of Misuse

The more advanced the model, the higher the risk of being used unethically and maliciously. Malicious actors can use it to create misinformation, deepfakes, or exploit security gaps within an infrastructure. For instance, bad actors might use an AI model to make phishing emails, spread misinformation during a nationwide event, or manipulate stock markets.

Identifying the GPAI Model with Systemic Risk

General-purpose AI Models containing systemic risk will greatly impact everyone’s lives and business operations. The impact would be so significant that any negative AI incident could disturb the whole technology value chain, disrupt business operations, and impact end-users who rely on it. That’s how GPAI models are categorized into the systemic risk category. Now, the question is, “How do you calculate the impact capability?”

The high impact of any AI model is identified when its cumulative computing power utilized during its training is greater than 10²⁵ Floating Point Operations (FLOPS). FLOPS/second is a unit that calculates a computer’s processing speed. A higher FLOPS value means higher power consumption, which also increases the risk factor. Also, FLOPS is not the only performance indicator in the EU Commission’s AI office. They will set additional benchmarks to identify and assess systemic risk.

The AI Office will now supervise and implement the laws listed in the AI Act for GPAI Model providers (Article 88 AI Act). The AI Office will work alongside national authorities in EU countries to help them check if AI systems meet the required standards. It can ask companies to provide information about their models, carry out evaluations, and, if needed, demand changes or even remove a model from the market entirely. If GPAI model providers don’t comply, the Office can issue fines of up to 3% of a company’s worldwide annual revenue or 15 million euros, whichever is higher.

Regulatory Requirements for GPAI Models Posing Systemic Risk

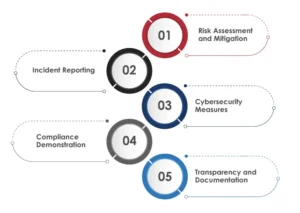

Providers of GPAI models identified as posing systemic risk must adhere to the following obligations:

Risk Assessment and Mitigation

Conduct thorough evaluations of their models using standardized protocols and tools that reflect the current state of the art. This includes adversarial testing to identify and mitigate systemic risks.

Incident Reporting

Monitor serious incidents and report relevant information, including possible corrective measures, to the AI Office and, where necessary, to national competent authorities immediately.

Cybersecurity Measures

Ensure adequate cybersecurity for the model and its physical infrastructure to prevent unauthorized access and other security threats.

Compliance Demonstration

Until conformed standards are published, providers can use codes of practice to demonstrate compliance with the AI Act’s requirements.

Transparency and Documentation

Maintain detailed records of the model’s development and testing processes. Provide necessary information to downstream providers integrating the model into their AI systems while safeguarding intellectual property rights.

How Does Tx Ensure Your AI Models’ Compliance with AI Regulations?

With the increasing adoption of AI technologies, a critical gap also emerges ensuring AI models’ reliability, authenticity, ethics, and responsibility. At Tx, we understand these challenges and lead the way in AI quality engineering by deep diving into your general-purpose AI models’ functionalities. Our years of experience in ensuring compliance with region-specific regulatory requirements enable our clients to build GPAI AI models that are robust, secure, trustworthy, and scalable.

General-purpose AI models, like GPT-4 and Gemini, are increasingly powerful and widely used, but they also pose risks when left unchecked. The EU AI Act sets strict rules for models with systemic impact—those using massive computing power or influencing critical sectors. These rules include mandatory risk assessments, incident reporting, and cybersecurity measures. The AI Office enforces these obligations. Tx supports organizations by helping ensure their AI models meet compliance standards through in-depth testing, governance, and responsible deployment practices. Contact our AI QE experts now to find out how Tx can assist with your AI projects.

The post How General-Purpose AI (GPAI) Models Are Regulated first appeared on TestingXperts.

Source: Read More