Rethinking the Problem of Collaboration in Language Models

Large language models (LLMs) have demonstrated remarkable capabilities in single-agent tasks such as question answering and structured reasoning. However, the ability to reason collaboratively—where multiple agents interact, disagree, and align on solutions—remains underdeveloped. This form of interaction is central to many human tasks, from academic collaboration to decision-making in professional contexts. Yet, most LLM training pipelines and benchmarks focus on isolated, single-turn outputs, overlooking the social dimensions of problem-solving such as assertiveness, perspective-taking, and persuasion. One primary challenge in advancing collaborative capabilities is the lack of scalable, high-quality multi-turn dialogue datasets designed for reasoning tasks.

Meta AI Introduces Collaborative Reasoner: A Multi-Agent Evaluation and Training Framework

To address this limitation, Meta AI introduces Collaborative Reasoner (Coral)—a framework specifically designed to evaluate and enhance collaborative reasoning skills in LLMs. Coral reformulates traditional reasoning problems into multi-agent, multi-turn tasks, where two agents must not only solve a problem but reach consensus through natural conversation. These interactions emulate real-world social dynamics, requiring agents to challenge incorrect conclusions, negotiate conflicting viewpoints, and arrive at joint decisions.

The framework spans five domains, including mathematics (MATH), STEM multiple-choice (MMLU-Pro, GPQA), and social cognition (ExploreToM, HiToM). These tasks serve as testbeds for evaluating whether models can apply their reasoning abilities in a cooperative, dialogue-driven context.

Methodology: Synthetic Collaboration and Infrastructure Support

Coral defines new evaluation metrics tailored to multi-agent settings. At the conversation level, agreement correctness measures whether the agents converge on the correct solution. At the turn level, social behaviors such as persuasiveness (the ability to influence another agent) and assertiveness (the ability to maintain one’s position) are explicitly quantified.

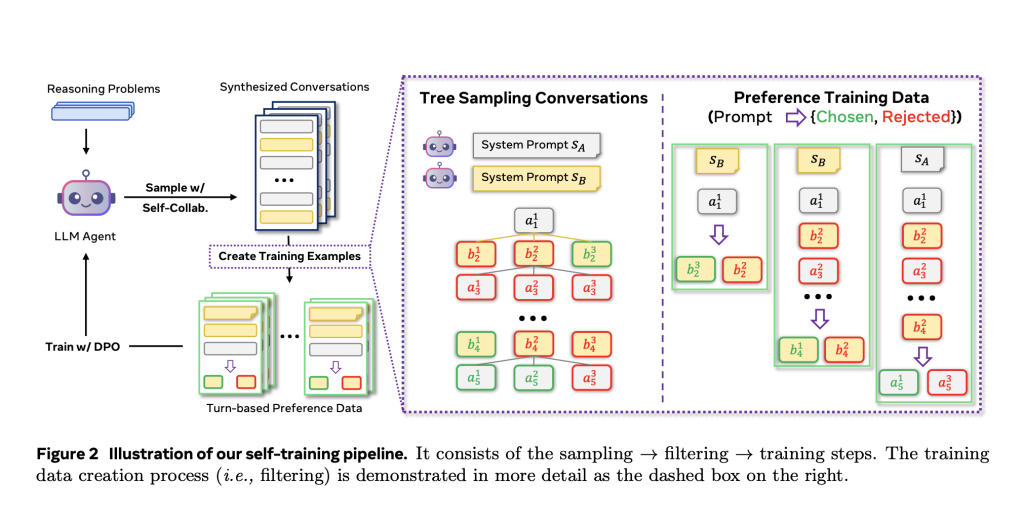

To address the data bottleneck, Meta AI proposes a self-collaboration approach, where a single LLM plays both roles in a conversation. These synthetic conversations are used to generate training data through a pipeline involving tree sampling, belief filtering, and preference fine-tuning using Direct Preference Optimization (DPO).

To support data generation at scale, Meta introduces Matrix, a high-performance serving framework. Matrix supports a variety of backends, employs gRPC for efficient networking, and integrates with Slurm and Ray for large-scale orchestration. Empirical comparisons show that Matrix achieves up to 1.87x higher throughput than comparable systems like Hugging Face’s llm-swarm, making it suitable for high-volume conversational training.

Empirical Results: Performance Gains and Generalization

Evaluation across five benchmarks reveals that collaboration, when properly modeled and trained, yields measurable gains. Fine-tuned Coral models significantly outperform baseline single-agent chain-of-thought (CoT) approaches. For instance, Llama-3.1-8B-Instruct shows a 47.8% improvement on ExploreToM after Coral+DPO training. The Llama-3.1-70B model fine-tuned on Coral surpasses GPT-4o and O1 on key collaborative reasoning tasks such as MMLU-Pro and ExploreToM.

Notably, models trained via Coral exhibit improved generalization. When tested on unseen tasks (e.g., GPQA and HiToM), Coral-trained models demonstrate consistent gains—indicating that learned collaborative behaviors can transfer across domains.

Despite the improvements, Coral-trained models still underperform CoT-trained baselines on complex mathematical problems (e.g., MATH), suggesting that collaboration alone may not suffice in domains requiring deep symbolic reasoning.

Conclusion: Toward Generalist Social Reasoning Agents

Collaborative Reasoner provides a structured and scalable pathway to evaluate and improve multi-agent reasoning in language models. Through synthetic self-dialogue and targeted social metrics, Meta AI presents a novel approach to cultivating LLMs capable of effective collaboration. The integration of Coral with the Matrix infrastructure further enables reproducible and large-scale experimentation.

As LLMs become increasingly embedded in human workflows, the ability to collaborate—rather than simply perform—is likely to be a defining capability. Coral is a step toward that direction, offering a foundation for future research on social agents capable of navigating complex, multi-agent environments.

Here is the Paper, Download the Collaborative Reasoner code and Download the MATRIX code. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 90k+ ML SubReddit.

The post Meta AI Introduces Collaborative Reasoner (Coral): An AI Framework Specifically Designed to Evaluate and Enhance Collaborative Reasoning Skills in LLMs appeared first on MarkTechPost.

Source: Read MoreÂ

[Register Now] miniCON Virtual Conference on AGENTIC AI: FREE REGISTRATION + Certificate of Attendance + 4 Hour Short Event (May 21, 9 am- 1 pm PST) + Hands on Workshop

[Register Now] miniCON Virtual Conference on AGENTIC AI: FREE REGISTRATION + Certificate of Attendance + 4 Hour Short Event (May 21, 9 am- 1 pm PST) + Hands on Workshop