Transformers have emerged as foundational tools in machine learning, underpinning models that operate on sequential and structured data. One critical challenge in this setup is enabling the model to understand the position of tokens or inputs since Transformers inherently lack a mechanism for encoding order. Rotary Position Embedding (RoPE) became a popular solution, especially in language and vision tasks, because it efficiently encodes absolute positions to facilitate relative spatial understanding. As these models grow in complexity and application across modalities, enhancing the expressiveness and dimensional flexibility of RoPE has become increasingly significant.

A significant challenge arises when scaling RoPE, from handling simple 1D sequences to processing multidimensional spatial data. The difficulty lies in preserving two essential features: relativity—enabling the model to distinguish positions relative to one another—and reversibility—ensuring unique recovery of original positions. Current designs often treat each spatial axis independently, failing to capture the interdependence of dimensions. This approach leads to an incomplete positional understanding in multidimensional settings, restricting the model’s performance in complex spatial or multimodal environments.

Efforts to extend RoPE have generally involved duplicating 1D operations along multiple axes or incorporating learnable rotation frequencies. A common example is standard 2D RoPE, which independently applies 1D rotations across each axis using block-diagonal matrix forms. While maintaining computational efficiency, these techniques cannot represent diagonal or mixed-directional relationships. Recently, learnable RoPE formulations, such as STRING, attempted to add expressiveness by directly training the rotation parameters. However, these lack a clear mathematical framework and do not guarantee that the fundamental constraints of relativity and reversibility are satisfied.

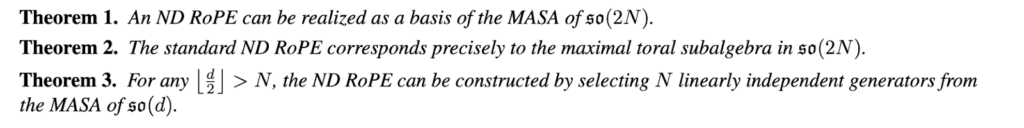

Researchers from the University of Manchester introduced a new method that systematically extends RoPE into N dimensions using Lie group and Lie algebra theory. Their approach defines valid RoPE constructions as those lying within a maximal abelian subalgebra (MASA) of the special orthogonal Lie algebra so(n). This strategy brings a previously absent theoretical rigor, ensuring the positional encodings meet relativity and reversibility requirements. Rather than stacking 1D operations, their framework constructs a basis for position-dependent transformations that can flexibly adapt to higher dimensions while maintaining mathematical guarantees.

The core methodology defines the RoPE transformation as a matrix exponential of skew-symmetric generators within the Lie algebra so(n). For standard 1D and 2D cases, these matrices produce traditional rotation matrices. The novelty comes in generalizing to N dimensions, where the researchers select a linearly independent set of N generators from a MASA of so(d). This ensures that the resulting transformation matrix encodes all spatial dimensions reversibly and relatively. The authors prove that this formulation, especially the standard ND RoPE, corresponds to the maximal toral subalgebra—a structure that divides the input space into orthogonal two-dimensional rotations. To enable dimensional interactions, the researchers incorporate a learnable orthogonal matrix, Q, which modifies the basis without disrupting the mathematical properties of the RoPE construction. Multiple strategies for learning Q are proposed, including the Cayley transform, matrix exponential, and Givens rotations, each offering interpretability and computational efficiency trade-offs.

The method demonstrates robust theoretical performance, proving that the constructed RoPE retains injectivity within each embedding cycle. When dimensionality d² equals the number of dimensions N, the standard basis efficiently supports structured rotations without overlap. For higher values of d, more flexible generators can be chosen to accommodate multimodal data better. The researchers showed that matrices like B₁ and B₂ within so(6) could represent orthogonal and independent rotations across six-dimensional space. Although no empirical results were reported for downstream task performance, the mathematical structure confirms that both key properties—relativity, and reversibility—are preserved even when introducing learned inter-dimensional interactions.

This research from the University of Manchester offers a mathematically complete and elegant solution to the limitations of current RoPE approaches. The research closes a significant gap in positional encoding by grounding their method in algebraic theory and offering a path to learn inter-dimensional relationships without sacrificing foundational properties. The framework applies to traditional 1D and 2D inputs and scales to more complex N-dimensional data, making it a foundational step toward more expressive Transformer architectures.

Check out Paper. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 90k+ ML SubReddit.

The post Transformers Gain Robust Multidimensional Positional Understanding: University of Manchester Researchers Introduce a Unified Lie Algebra Framework for N-Dimensional Rotary Position Embedding (RoPE) appeared first on MarkTechPost.

Source: Read MoreÂ