Artificial intelligence systems have made significant strides in simulating human-style reasoning, particularly mathematics and logic. These models don’t just generate answers—they walk through a series of logical steps to reach conclusions, offering insights into how and why those answers are produced. This step-by-step reasoning, often called Chain-of-Thought (CoT), has become vital in how machines handle complex problem-solving tasks.

A common problem researchers encounter with these models is inefficiency during inference. Reasoning models often continue processing even after reaching a correct conclusion. This overthinking results in the unnecessary generation of tokens, increasing computational cost. Whether these models have an internal sense of correctness remains unclear—do they realize when an intermediate answer is right? If they could identify this internally, the models could halt processing earlier, becoming more efficient without losing accuracy.

Many current approaches measure a model’s confidence through verbal prompts or by analyzing multiple outputs. These black-box strategies ask the model to report how sure it is of its answer. However, they are often imprecise and computationally expensive. On the other hand, white-box methods investigate models’ internal hidden states to extract signals that may correlate with answer correctness. Prior work shows that a model’s internal states can indicate the validity of final answers, but applying this to intermediate steps in long reasoning chains is still an underexplored direction.

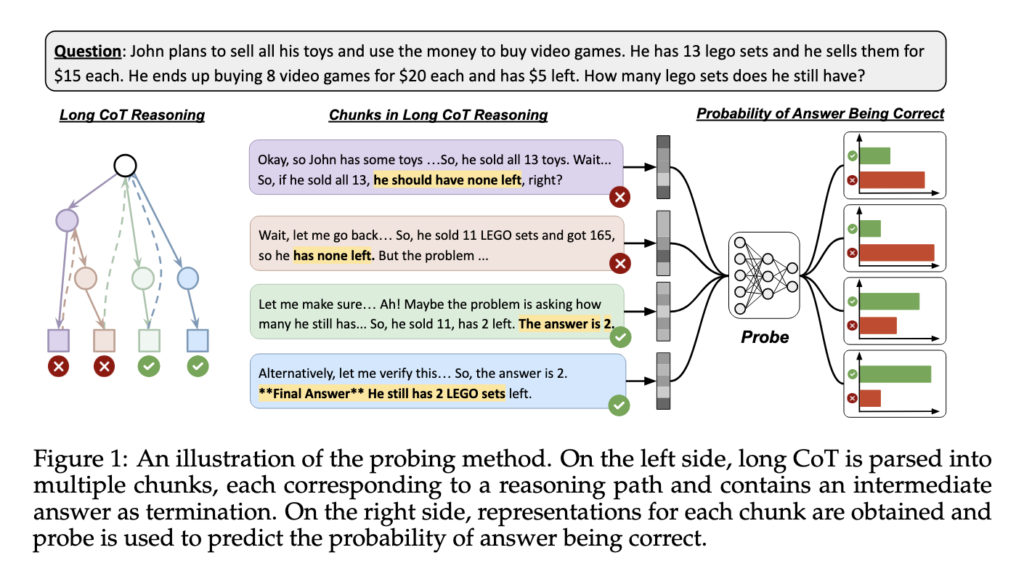

The research introduced by a team from New York University and NYU Shanghai tackled this gap by designing a lightweight probe—a simple two-layer neural network—to inspect a model’s hidden states at intermediate reasoning steps. The models used for experimentation included the DeepSeek-R1-Distill series and QwQ-32B, known for their step-by-step reasoning capabilities. These models were tested across various datasets involving mathematical and logical tasks. The researchers trained their probe to read the internal state associated with each chunk of reasoning and predict whether the current intermediate answer was correct.

To construct their approach, the researchers first segmented each long CoT output into smaller parts or chunks, using markers like “wait” or “verify” to identify breaks in reasoning. They used the last token’s hidden state in each chunk as a representation and matched this to a correctness label, which was judged using another model. These representations were then used to train the probe on binary classification tasks. The probe was fine-tuned using grid search across hyperparameters like learning rate and hidden layer size, with most models converging to linear probes—indicating that correctness information is often linearly embedded in the hidden states. The probe worked for fully formed answers and showed the ability to predict correctness before an answer was even completed, hinting at look-ahead capabilities.

Performance results were clear and quantifiable. The probes achieved ROC-AUC scores exceeding 0.9 for some datasets like AIME when using models like R1-Distill-Qwen-32B. Expected Calibration Errors (ECE) remained under 0.1, showing high reliability. For example, R1-Distill-Qwen-32B had an ECE of just 0.01 on GSM8K and 0.06 on MATH datasets. In application, the probe was used to implement a confidence-based early exit strategy during inference. The reasoning process was stopped when the probe’s confidence in an answer exceeded a threshold. At a confidence threshold of 0.85, the accuracy remained at 88.2%, while the inference token count was reduced by 24%. Even at a threshold of 0.9, accuracy stayed at 88.6%, with a 19% token reduction. Compared to static exit methods, this dynamic strategy achieved up to 5% higher accuracy using the same or fewer tokens.

This study offers an efficient, integrated way for reasoning models to self-verify during inference. The researchers’ approach pinpoints a gap—while models inherently know when they’re right, they don’t act on it. The research reveals a path toward smarter, more efficient reasoning systems by leveraging internal representations through probing. It shows that tapping into what the model already “knows” can lead to meaningful performance and resource use improvements.

Check out Paper. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 85k+ ML SubReddit.

The post Reasoning Models Know When They’re Right: NYU Researchers Introduce a Hidden-State Probe That Enables Efficient Self-Verification and Reduces Token Usage by 24% appeared first on MarkTechPost.

Source: Read MoreÂ