In today’s enterprise landscape—especially in insurance and customer support —voice and audio data are more than just recordings; they’re valuable touchpoints that can transform operations and customer experiences. With AI audio processing, organizations can automate transcriptions with remarkable accuracy, surface critical insights from conversations, and power natural, engaging voice interactions. By utilizing these capabilities, businesses can boost efficiency, uphold compliance standards, and build deeper connections with customers, all while meeting the high expectations of these demanding industries.

Boson AI introduces Higgs Audio Understanding and Higgs Audio Generation, two robust solutions that empower you to develop custom AI agents for a wide range of audio applications. Higgs Audio Understanding focuses on listening and contextual comprehension. Higgs Audio Generation excels in expressive speech synthesis. Both solutions are currently optimized for English, with support for additional languages on the way. They enable AI interactions that closely resemble natural human conversation. Enterprises can leverage these tools to power real-world audio applications.

Higgs Audio Understanding: Listening Beyond Words

Higgs Audio Understanding is Boson AI’s advanced solution for audio comprehension. It surpasses traditional speech-to-text systems by capturing context, speaker traits, emotions, and intent. The model deeply integrates audio processing with a large language model (LLM), converting audio inputs into rich contextual embeddings, including speech tone, background sounds, and speaker identities. The model achieves nuanced interpretation by processing these alongside text tokens, essential for tasks such as meeting transcription, contact center analytics, and media archiving.

A key strength is its chain-of-thought audio reasoning capability. This allows the model to analyze audio in a structured, step-by-step manner, solving complex tasks like counting word occurrences, interpreting humor from tone, or applying external knowledge to audio contexts in real time. Tests show Higgs Audio Understanding leads standard speech recognition benchmarks (e.g., Common Voice for English) and outperforms competitors like Qwen-Audio, Gemini, and GPT-4o-audio in holistic audio reasoning evaluations, achieving top scores (60.3 average on AirBench Foundation) with its reasoning enhancements. This real-time, contextual comprehension can give enterprises unparalleled audio data insights.

Higgs Audio Generation: Speaking with Human-Like Nuance

Higgs Audio Generation, Boson AI’s advanced speech synthesis model, enables AI to produce highly expressive, human-like speech essential for virtual assistants, automated services, and customer interactions. Unlike traditional text-to-speech (TTS) systems that often sound robotic, Higgs Audio Generation leverages an LLM at its core, enabling nuanced comprehension and expressive output closely aligned with textual context and intended emotions.

Boson AI addresses common limitations of legacy TTS, such as monotone delivery, emotional flatness, incorrect pronunciation of unfamiliar terms, and difficulty handling multi-speaker interactions, by incorporating deep contextual understanding into speech generation.

The unique capabilities of Higgs Audio Generation include:

- Emotionally Nuanced Speech: It naturally adjusts tone and emotion based on textual context, creating more engaging and context-appropriate interactions.

- Multi-Speaker Dialogue Generation: This technology simultaneously generates distinct, realistic voices for multi-character conversations, as Boson AI’s Magic Broom Shop demo demonstrated. It is ideal for audiobooks, interactive training, and dynamic storytelling.

- Accurate Pronunciation and Accent Adaptation: Precisely pronounces uncommon names, foreign words, and technical jargon, adapting speech dynamically for global and diverse scenarios.

- Real-Time Generation with Contextual Reasoning: This technology produces coherent, real-time speech outputs responsive to conversational shifts, suitable for interactive applications like customer support chatbots or live voice assistants.

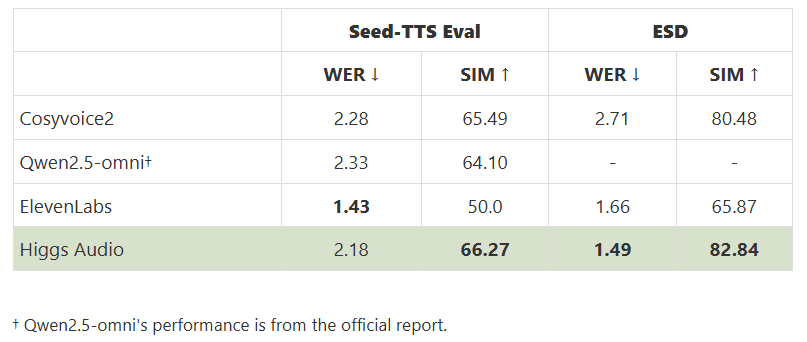

Benchmark results confirm Higgs Audio’s superiority over top competitors, including CosyVoice2, Qwen2.5-omni, and ElevenLabs. In standard tests like SeedTTS and the Emotional Speech Dataset (ESD), Higgs Audio achieved significantly higher emotional accuracy, while being competitive or superior in word error rate (~1.5–2%). This performance demonstrates Higgs Audio’s ability to deliver unmatched clarity, expressiveness, and realism, setting a new benchmark for audio generation.

Under the Hood: LLMs, Audio Tokenizers, and In‑Context Learning

Boson AI’s Higgs Audio models leverage advanced research, combining LLMs with innovative audio processing techniques. At their core, these models utilize pretrained LLMs, extending their robust language understanding, contextual awareness, and reasoning abilities to audio tasks. Boson AI achieves this integration by training LLMs end-to-end on extensive paired text–audio datasets, enabling semantic comprehension of spoken content and acoustic nuances.

Boson AI’s custom audio tokenizer is a critical element that efficiently compresses raw audio into discrete tokens using residual vector quantization (RVQ). This preserves linguistic information and subtle acoustic details (tone, timbre) while balancing token granularity for optimal speed and quality. These audio tokens seamlessly feed into the LLM alongside text, allowing simultaneous processing of audio and textual contexts. Also, Higgs Audio incorporates in-context learning, enabling models to adapt quickly without retraining. With simple prompts, such as brief reference audio samples, Higgs Audio Generation can instantly perform zero-shot voice cloning, matching speaking styles. Similarly, Higgs Audio Understanding rapidly customizes outputs (e.g., speaker labeling or domain-specific terminology) with minimal prompting.

Boson AI’s approach integrates transformer-based architectures, multimodal learning, and Chain-of-Thought (CoT) reasoning, enhancing interpretability and accuracy in audio comprehension and generation tasks. By combining LLM’s strengths with sophisticated audio tokenization and flexible prompting, Higgs Audio delivers unprecedented performance, speed, and adaptability, significantly surpassing traditional audio AI solutions.

Benchmark Performance: Outpacing Industry Leaders

Boson AI extensively benchmarked Higgs Audio, confirming its competitive leadership in audio understanding and generation compared to top industry models.

In audio understanding, Higgs Audio matched or surpassed models like OpenAI’s GPT-4o-audio and Gemini-2.0 Flash. It delivered top-tier speech recognition accuracy, achieving state-of-the-art Mozilla Common Voice (English) results, robust performance on challenging tasks like Chinese speech recognition, and strong results on benchmarks such as LibriSpeech and FLEURS.

However, Higgs Audio Understanding truly differentiates itself in complex audio reasoning tasks. On comprehensive tests like the AirBench Foundation and MMAU benchmarks, Higgs outperformed Alibaba’s Qwen-Audio, GPT-4o-audio, and Gemini models, scoring an average of 59.45, which improved to above 60 with CoT reasoning. This demonstrates the model’s superior capability to understand nuanced audio scenarios and dialogues with background noise and interpret audio contexts logically and insightfully.

On the audio generation side, Higgs Audio was evaluated against specialized TTS models, including ElevenLabs, Qwen 2.5-Omni, and CosyVoice2. Higgs Audio consistently led or closely matched competitors on key benchmarks:

- Seed-TTS Eval: Higgs Audio achieved the lowest Word Error Rate (WER), indicating highly intelligible speech, and demonstrated the highest similarity to reference voices. In comparison, ElevenLabs had slightly lower intelligibility but notably weaker voice similarity.

- Emotional Speech Dataset (ESD): Higgs Audio achieved the highest emotional similarity scores (over 80 versus mid-60s for ElevenLabs), excelling in emotionally nuanced speech generation.

Boson AI also introduced the “EmergentTTS-Eval,” using advanced audio-understanding models (even competitors like Gemini 2.0) as evaluators. Higgs Audio was consistently preferred over ElevenLabs in complex scenarios involving emotional expression, pronunciation accuracy, and nuanced intonation. Overall, benchmarks clearly show Higgs Audio’s comprehensive advantage, ensuring users adopting Boson AI’s models gain superior audio quality and insightful understanding capabilities.

Enterprise Deployment and Use Case: Bringing Higgs Audio to Business

Higgs Audio Understanding and Generation function on a unified platform, enabling end-to-end voice AI pipelines that listen, reason, and respond, all in real time.

- Customer Support: At a company like Chubb, a virtual claims agent powered by Higgs Audio can transcribe customer calls with high accuracy, detect stress or urgency, and identify key claim details. It separates speakers automatically and interprets context (e.g., recognizing a car accident scenario). Higgs Audio Generation responds in an empathetic, natural voice, even adapting to the caller’s accent. This improves resolution speed, reduces staff workload, and boosts customer satisfaction.

- Media & Training Content: Enterprises producing e-learning or training materials can use Higgs Audio Generation to create multi-voice, multilingual narrations without hiring voice actors. Higgs Audio Understanding ensures quality control by verifying script adherence and emotional tone. Teams can also transcribe and analyze meetings for speaker sentiment and key takeaways, streamlining internal knowledge management.

- Compliance & Analytics: In regulated industries, Higgs Audio Understanding can monitor conversations for compliance by recognizing intent beyond keywords. It detects deviations from approved scripts, flags sensitive disclosures, and surfaces customer trends or pain points over thousands of calls, enabling proactive insights and regulatory adherence.

Boson AI offers flexible deployment, API, cloud, on-premise or licensing, with models that adapt via prompt-based customization. Enterprises can tailor outputs to domain-specific terms or workflows using in-context learning, building intelligent voice agents that match internal vocabulary and tone. From multilingual chatbots to automated meeting summaries, Higgs Audio delivers conversational AI that feels truly human, raising the quality and capability of enterprise voice applications.

Future Outlook and Strategic Takeaways

Boson AI’s roadmap for Higgs Audio indicates a strong future pipeline of features to deepen audio understanding and generation. A key upcoming capability is multi-voice cloning, allowing the model to learn multiple voice profiles from short samples and generate natural conversations between the speakers. This will enable use cases like AI-powered cast recordings or consistent virtual voices across customer touchpoints. This goes beyond current one-speaker cloning, with Boson AI’s TTS demo already hinting at its arrival. Another development is explicit control over style and emotion. While the current model infers emotion from context, future versions may allow users to specify parameters like “cheerful” or “formal,” enhancing brand consistency and user experience. The Smart Voice feature previewed in Boson AI’s demos suggests an intelligent voice-selection system tailored to script tone and intent.

On the understanding side, future updates may enhance comprehension with features like long-form conversation summarization, deeper reasoning via expanded chain-of-thought capabilities, and real-time streaming support. These advancements could enable applications like live analytics for support calls or AI-driven meeting insights.

Strategically, Boson AI positions Higgs Audio as a unified enterprise audio AI solution. By adopting Higgs Audio, companies can access the frontier of voice AI with tools that understand, reason, and speak with human-level nuance. Its dual strength in understanding and generation, built on shared infrastructure, allows seamless integration and continuous improvement. Enterprises can benefit from a consistent platform where models evolve together, one that adapts easily and stays ahead of the curve. Boson AI offers a future-proof foundation for enterprise innovation in a world increasingly shaped by audio interfaces.

Sources

- https://boson.ai/

- https://boson.ai/blog/higgs-audio/

- https://boson.ai/demo/shop

- https://boson.ai/demo/tts

Thanks to the Boson AI team for the thought leadership/ Resources for this article. Boson AI team has financially supported us for this content/article.

The post Boson AI Introduces Higgs Audio Understanding and Higgs Audio Generation: An Advanced AI Solution with Real-Time Audio Reasoning and Expressive Speech Synthesis for Enterprise Applications appeared first on MarkTechPost.

Source: Read MoreÂ