LLMs have demonstrated strong general-purpose performance across various tasks, including mathematical reasoning and automation. However, they struggle in domain-specific applications where specialized knowledge and nuanced reasoning are essential. These challenges arise primarily from the difficulty of accurately representing long-tail domain knowledge within finite parameter budgets, leading to hallucinations and the lack of domain-specific reasoning abilities. Conventional approaches to domain adaptation—such as fine-tuning or continual pretraining—often result in untraceable knowledge and increased training costs. While helpful for supplementing knowledge, RAG methods typically fall short in teaching models how to reason with that information. A key research challenge is how to separate the learning of domain knowledge from reasoning, allowing models to prioritize cognitive skill development under limited resources.

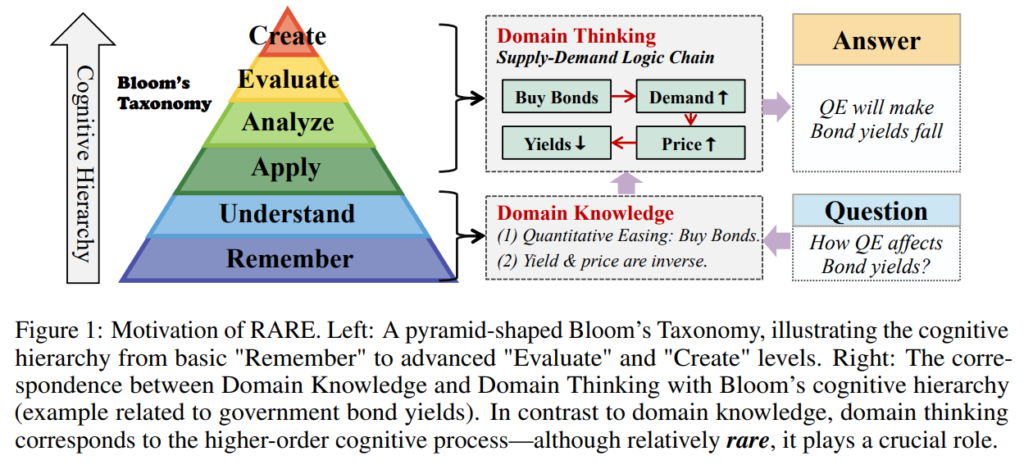

Drawing parallels from education theory, particularly Bloom’s Taxonomy, it becomes clear that building advanced reasoning skills requires more than just knowledge memorization. Higher-order cognitive abilities—like analysis, evaluation, and synthesis—are often hindered when models are burdened with memorizing extensive domain facts. This observation raises the question of whether reasoning capabilities can be enhanced independently of large-scale knowledge internalization. In practice, many existing methods focus heavily on storing knowledge within model parameters, complicating updates and increasing the risk of outdated or incorrect outputs. Even retrieval-based techniques treat retrieved documents as inputs rather than tools for learning reasoning processes. The future of domain-specific intelligence may depend on approaches that reduce reliance on internal memorization and instead use external knowledge sources as scaffolds for reasoning skill development, enabling smaller models to solve complex tasks more efficiently.

Researchers from Peking University, Shanghai Jiao Tong University, Northeastern University, Nankai University, the Institute for Advanced Algorithms Research (Shanghai), OriginHub Technology, MemTensor, and the Shanghai Artificial Intelligence Laboratory have introduced a new paradigm called Retrieval-Augmented Reasoning Modeling (RARE). Inspired by Bloom’s Taxonomy, RARE separates knowledge storage from reasoning by using external databases for domain knowledge while training models to focus on contextual rationale. This allows models to bypass memory-heavy factual learning and prioritize cognitive skill development. Experiments show that lightweight RARE-trained models outperform larger models like GPT-4 on benchmarks, offering a scalable and efficient approach to domain-specific intelligence.

A proposed framework shifts focus from memorizing domain knowledge to developing reasoning skills. By combining retrieved external knowledge with step-by-step reasoning, models generate responses based on understanding and application rather than recall. The framework models responses as a sequence of knowledge and reasoning tokens, optimizing for integrating retrieved information and contextual inference. Using expert models for knowledge distillation, it builds high-quality training data and employs adaptive refinement for correctness. Grounded in cognitive theories like contextual learning, this approach enables lightweight models to achieve strong domain-specific performance through fine-tuning and reasoning-centric training.

The study evaluates the effectiveness of the RARE framework using five healthcare-focused QA datasets requiring multi-hop reasoning. Lightweight models like Llama-3.1-8B, Qwen-2.5-7B, and Mistral-7B were tested against CoT, SFT, and RAG baselines. Results show that RARE consistently outperforms these baselines across all tasks, with notable medical diagnosis and scientific reasoning gains. Compared to DeepSeek-R1-Distill-Llama-8B and GPT-4, RARE-trained models achieved higher accuracy, exceeding GPT-4 by over 20% on some tasks. These findings highlight that training models for domain-specific reasoning through structured, contextual learning is more effective than merely increasing model size or relying solely on retrieval.

In conclusion, the study presents RARE, a new framework that enhances domain-specific reasoning in LLMs by separating knowledge storage from reasoning development. Drawing from Bloom’s Taxonomy, RARE avoids parameter-heavy memorization by retrieving external knowledge during inference and integrating it into training prompts, encouraging contextual reasoning. This shift allows lightweight models to outperform larger ones like GPT-4 on medical tasks, achieving up to 20% higher accuracy. RARE promotes a scalable approach to domain-specific intelligence by combining maintainable knowledge bases with efficient, reasoning-focused models. Future work will explore reinforcement learning, data curation, and applications across multi-modal and open-domain tasks.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 85k+ ML SubReddit.

The post RARE (Retrieval-Augmented Reasoning Modeling): A Scalable AI Framework for Domain-Specific Reasoning in Lightweight Language Models appeared first on MarkTechPost.

Source: Read MoreÂ

[Register Now] miniCON Virtual Conference on OPEN SOURCE AI: FREE REGISTRATION + Certificate of Attendance + 3 Hour Short Event (April 12, 9 am- 12 pm PST) + Hands on Workshop [Sponsored]

[Register Now] miniCON Virtual Conference on OPEN SOURCE AI: FREE REGISTRATION + Certificate of Attendance + 3 Hour Short Event (April 12, 9 am- 12 pm PST) + Hands on Workshop [Sponsored]