Reinforcement Learning with Verifiable Rewards (RLVR) has proven effective in enhancing LLMs’ reasoning and coding abilities, particularly in domains where structured reference answers allow clear-cut verification. This approach relies on reference-based signals to determine if a model’s response aligns with a known correct answer, typically through binary correctness labels or graded scores. RLVR has mainly been applied to areas like math and coding, where rule-based or tool-assisted verification is straightforward. However, expanding RLVR to more complex and less structured tasks has been difficult due to challenges in verifying open-ended or ambiguous reference responses. Although generative models and closed-source LLMs like GPT-4o have been explored as verifiers, these solutions often remain domain-specific and require extensive annotated datasets for training.

Recent developments aim to broaden RLVR applications by introducing generative reward modeling, where LLMs use their generative abilities to produce judgments and justifications. These models can be trained without detailed rationales, instead relying on the confidence of the verifier’s outputs to generate stable reward signals. This technique supports reinforcement learning in tasks with noisy or ambiguous labels. Furthermore, researchers are exploring RLVR in a wider variety of domains using more free-form reference answers—sourced from expert annotations and pretraining data or generated by LLMs—moving beyond narrowly defined tasks like math and logic puzzles. These efforts mark a significant step toward scalable and domain-general RLVR training.

Tencent AI Lab and Soochow University researchers are exploring extending RLVR to complex, unstructured domains like medicine, chemistry, and education. They show that binary correctness judgments remain consistent across LLMs when expert-written references are available. To address the limitations of binary rewards in free-form tasks, they introduce soft, generative model-based reward signals. Using compact 7B models, they train cross-domain reward verifiers without requiring extensive domain-specific annotation. Their RLVR framework significantly outperforms top open-source models in reasoning tasks and scales effectively. They also release a 570k-example dataset to support further research in multi-domain RLVR.

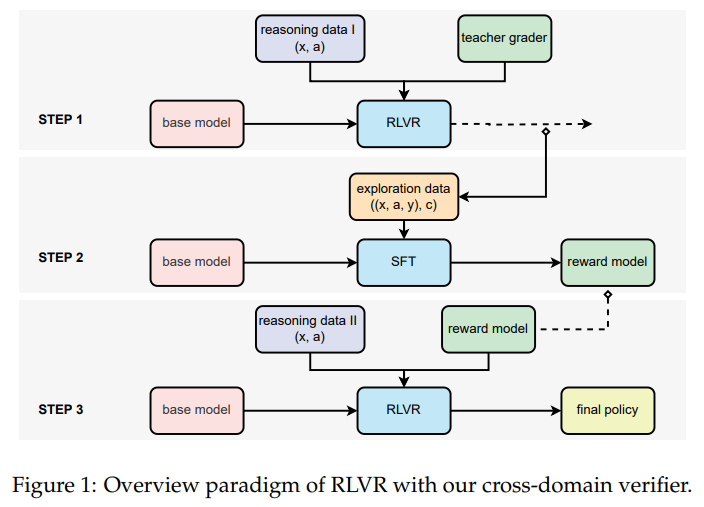

The method uses expert-written reference answers to guide reward estimation for reinforcement learning. Responses are evaluated using a generative LLM verifier, which outputs binary (0/1) or soft rewards based on the likelihood of correctness. Rewards are normalized using z-score normalization for stable training and better learning dynamics. The authors train a compact (7B) generative reward model using judgments collected during RL exploration to avoid relying solely on large models. These binary labels are obtained from a larger LLM and used to fine-tune the smaller verifier. This approach balances performance and efficiency while increasing robustness to noise and formatting variations.

The study uses two large-scale Chinese QA datasets—one with 773k free-form math questions across school levels and another with 638k multi-subject college-level questions from ExamQA. These datasets feature complex, unstructured answers that challenge rule-based reward methods. The researchers trained a 7B reward model (RM-7B) using 160k distilled samples and tested various RL approaches. Results show that RL with model-based rewards outperforms rule-based methods and supervised fine-tuning (SFT), especially in reasoning tasks. Notably, RM-7B achieves performance close to the larger 72B model, highlighting its efficiency. Binary rewards outperform soft rewards in rule-based settings due to semantic mismatch issues.

In conclusion, the study simplifies reward modeling by training a generative model to output binary scores (1 or 0) without relying on chain-of-thought reasoning. While CoT aids in reasoning, its necessity for verifying semantic similarity remains unclear. Unlike past work that relied on format-based scoring, this approach avoids strict answer formatting, reducing manual effort. The research extends RLVR beyond structured domains to areas like medicine and economics, where reference answers are less defined. Using a 7B model, it shows that soft, model-based rewards enhance performance in free-form tasks, outperforming larger models and improving RLVR’s adaptability and scalability.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 85k+ ML SubReddit.

The post Scalable Reinforcement Learning with Verifiable Rewards: Generative Reward Modeling for Unstructured, Multi-Domain Tasks appeared first on MarkTechPost.

Source: Read MoreÂ

[Register Now] miniCON Virtual Conference on OPEN SOURCE AI: FREE REGISTRATION + Certificate of Attendance + 3 Hour Short Event (April 12, 9 am- 12 pm PST) + Hands on Workshop [Sponsored]

[Register Now] miniCON Virtual Conference on OPEN SOURCE AI: FREE REGISTRATION + Certificate of Attendance + 3 Hour Short Event (April 12, 9 am- 12 pm PST) + Hands on Workshop [Sponsored]