Sparse autoencoders are central tools in analyzing how large language models function internally. Translating complex internal states into interpretable components allows researchers to break down neural activations into parts that make sense to humans. These methods support tracing logic paths and identifying how particular tokens or phrases influence model behavior. Sparse autoencoders are especially valuable for interpretability applications, including circuit analysis, where understanding what each neuron contributes is crucial to ensuring trustworthy model behavior.

A pressing issue with sparse autoencoder training lies in aligning training objectives with how performance is measured during model inference. Traditionally, training uses mean squared error (MSE) on precomputed model activations. However, this doesn’t optimize for cross-entropy loss, which is used to judge performance when reconstructed activations replace the originals. This mismatch results in reconstructions that perform poorly in real inference settings. More direct methods that train on both MSE and KL divergence solve this issue, but they demand considerable computation, which limits their adoption in practice.

Several approaches have attempted to improve sparse autoencoder training. Full end-to-end training combining KL divergence and MSE losses offers better reconstruction quality. Still, it comes with a high computational cost of up to 48× higher due to multiple forward passes and lack of activation amortization. An alternative involves using LoRA adapters to fine-tune the base language model around a fixed autoencoder. While efficient, this method modifies the model itself, which isn’t ideal for applications that require analyzing the unaltered architecture.

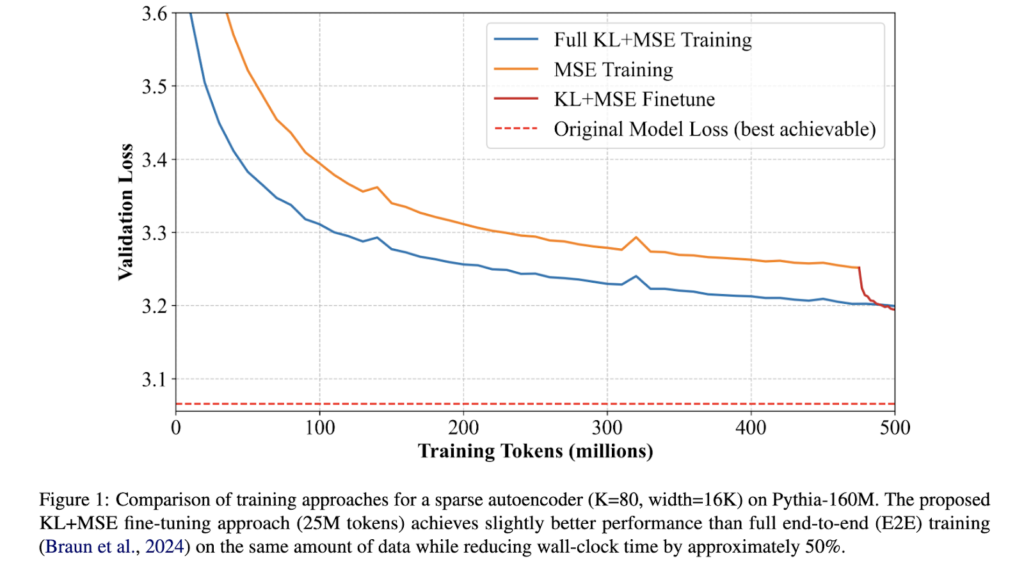

An independent researcher from Deepmind has introduced a new solution that applies a brief KL+MSE fine-tuning step at the tail end of the training, specifically for the final 25 million tokens—just 0.5–10% of the usual training data volume. The models come from the Gemma team and Pythia project. It avoids altering the model architecture and minimizes complexity while achieving performance similar to full end-to-end training. It also allows training time savings of up to 90% in scenarios with large models or amortized activation collection without requiring additional infrastructure or algorithmic changes.

To implement this, the training begins with standard MSE on shuffled activations, followed by a short KL+MSE fine-tuning phase. This phase uses a dynamic balancing mechanism to adjust the weight of KL divergence relative to MSE loss. Instead of manually tuning a fixed β parameter, the system recalculates the KL scaling factor per training batch. The formula ensures the total combined loss maintains the same scale as the original MSE loss. This dynamic control prevents the need for additional hyperparameters and simplifies transfer across model types. Fine-tuning is executed with a linear decay of the learning rate from 5e-5 to 0 over the 25M token window, aligning the process with practical compute budgets and preserving sparsity settings from earlier training.

Performance evaluations show that this approach reduced the cross-entropy loss gap by 20% to 50%, depending on the sparsity setting. For example, on Pythia-160M with K=80, the KL+MSE fine-tuned model performed slightly better than a full end-to-end model, requiring 50% less wall-clock time. At higher sparsity (K=160), the fine-tuned MSE-only model achieved similar or marginally better outcomes than KL+MSE, possibly due to the simplicity of the objective. Tests with LoRA and linear adapters revealed that their benefits do not stack, as each method corrects a shared error source in MSE-trained autoencoders. Even very low-rank LoRA adapters (rank 2) captured over half the performance gains of full fine-tuning.

Although cross-entropy results consistently favored the fine-tuned method, interpretability metrics showed mixed trends. On SAEBench, ReLU-based sparse autoencoders saw improvements in sparse probing and RAVEL metrics, while performance on spurious correlation and targeted probe tasks dropped. TopK-based models showed smaller, more inconsistent changes. These results suggest that fine-tuning may yield reconstructions better aligned with model predictions but may not always enhance interpretability, depending on the specific evaluation task or architecture type.

This research underscores a meaningful advancement in sparse autoencoder training: a computationally light, technically simple method that improves reconstruction accuracy without modifying base models. It addresses key alignment issues in training objectives and delivers practical results across models and sparsity levels. While not uniformly superior in all interpretability metrics, it offers a favorable trade-off between performance and simplicity for tasks like circuit-level analysis.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 85k+ ML SubReddit.

The post This AI Paper Introduces a Short KL+MSE Fine-Tuning Strategy: A Low-Cost Alternative to End-to-End Sparse Autoencoder Training for Interpretability appeared first on MarkTechPost.

Source: Read MoreÂ

[Register Now] miniCON Virtual Conference on OPEN SOURCE AI: FREE REGISTRATION + Certificate of Attendance + 3 Hour Short Event (April 12, 9 am- 12 pm PST) + Hands on Workshop [Sponsored]

[Register Now] miniCON Virtual Conference on OPEN SOURCE AI: FREE REGISTRATION + Certificate of Attendance + 3 Hour Short Event (April 12, 9 am- 12 pm PST) + Hands on Workshop [Sponsored]