Large language models have transformed how machines comprehend and generate text, especially in complex problem-solving areas like mathematical reasoning. These systems, known as R1-like models, are designed to emulate slow and deliberate thought processes. Their key strength is handling intricate tasks requiring step-by-step reasoning across long sequences. These capabilities make them valuable for applications such as solving Olympiad-level math problems or logical reasoning tasks, where depth and coherence of reasoning are essential.

A significant challenge in training these models is the extensive computation for reinforcement learning using long context windows. Tasks that require multi-step logic force models to produce long outputs which consumes more resources and slows down learning. Further, not all long responses contribute meaningfully to accuracy; many include redundant reasoning. These inefficiencies in response generation and high GPU usage make it difficult to effectively scale training, particularly when working with models with 1.5 billion parameters.

Previous attempts to address this issue include models like DeepScaleR, which uses a staged context length extension strategy during training. DeepScaleR starts with an 8K context window and expands gradually to 24K over three training phases. Although this approach helps guide the model to manage longer reasoning chains efficiently, it still demands approximately 70,000 A100 GPU hours. DeepScaleR reduces that to 3,800 hours through a progressive strategy but still requires considerable hardware, including setups with up to 32 GPUs in some stages. This shows that while improvements are possible, the solution remains costly and complex.

Researchers at Tencent introduced a method called FASTCURL to overcome the inefficiencies of traditional reinforcement learning training. This method presents a curriculum-based strategy aligned with context window expansion. FASTCURL splits the dataset based on input prompt length into short, long, and combined categories. The training progresses in four stages, each using a different dataset and context window setting. This approach ensures the model learns simple reasoning before advancing to longer, more complex reasoning steps. The researchers emphasize that the entire training process runs on a single node with just 8 GPUs, reducing setup complexity.

The approach involves a deliberate segmentation of data by input length, driven by the hypothesis that longer prompts usually lead to longer and more complex outputs. The model first learns using short prompts under an 8K window. As training proceeds, the model transitions to a mixed dataset with 16K window length, then to the long dataset with the same window size, and finally reviews the combined data again. Each stage is trained for one iteration, and FASTCURL requires about 860 training steps. This is efficient compared to DeepScaleR’s 1,750 steps, representing a 50% reduction in training time and resource usage while maintaining effectiveness.

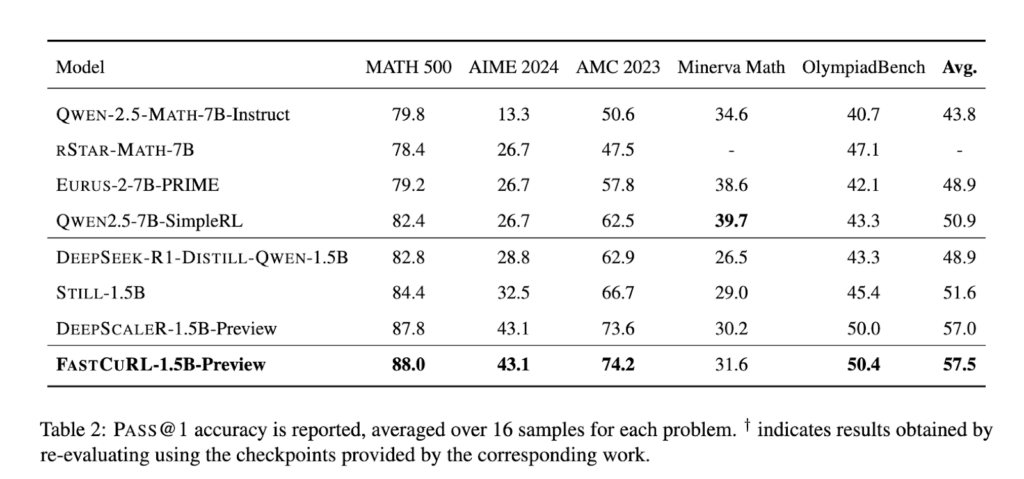

In performance evaluations, FASTCURL-1.5B-Preview showed improvements over other models across five benchmarks. It scored 88.0 on MATH 500, 43.1 on AIME 2024, 74.2 on AMC 2023, 31.6 on Minerva Math, and 50.4 on OlympiadBench, with an average PASS@1 score of 57.5. Compared to DeepScaleR-1.5B-Preview, which scored an average of 57.0, FASTCURL performed better in four of five datasets. These results highlight that FASTCURL can outperform existing techniques while consuming significantly fewer resources. The model also showed better generalization, particularly on datasets like AMC 2023 and Minerva Math, indicating robustness.

The research clearly outlines a computational problem in training R1-like reasoning models and offers an innovative curriculum strategy as a solution. The method provides an efficient and practical training framework by combining input-based data segmentation with context expansion. FASTCURL delivers strong performance using fewer steps and limited hardware, proving that strategic training design can be as powerful as raw computational scale.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 85k+ ML SubReddit.

The post This AI Paper Introduces FASTCURL: A Curriculum Reinforcement Learning Framework with Context Extension for Efficient Training of R1-like Reasoning Models appeared first on MarkTechPost.

Source: Read MoreÂ

[Register Now] miniCON Virtual Conference on OPEN SOURCE AI: FREE REGISTRATION + Certificate of Attendance + 3 Hour Short Event (April 12, 9 am- 12 pm PST) + Hands on Workshop [Sponsored]

[Register Now] miniCON Virtual Conference on OPEN SOURCE AI: FREE REGISTRATION + Certificate of Attendance + 3 Hour Short Event (April 12, 9 am- 12 pm PST) + Hands on Workshop [Sponsored]