Process-supervised reward models (PRMs) offer fine-grained, step-wise feedback on model responses, aiding in selecting effective reasoning paths for complex tasks. Unlike output reward models (ORMs), which evaluate responses based on final outputs, PRMs provide detailed assessments at each step, making them particularly valuable for reasoning-intensive applications. While PRMs have been extensively studied in language tasks, their application in multimodal settings remains largely unexplored. Most vision-language reward models still rely on the ORM approach, highlighting the need for further research into how PRMs can enhance multimodal learning and reasoning.

Existing reward benchmarks primarily focus on text-based models, with some specifically designed for PRMs. In the vision-language domain, evaluation methods generally assess broad model capabilities, including knowledge, reasoning, fairness, and safety. VL-RewardBench is the first benchmark incorporating reinforcement learning preference data to refine knowledge-intensive vision-language tasks. Additionally, multimodal RewardBench expands evaluation criteria beyond standard visual question answering (VQA) tasks, covering six key areas—correctness, preference, knowledge, reasoning, safety, and VQA—through expert annotations. These benchmarks provide a foundation for developing more effective reward models for multimodal learning.

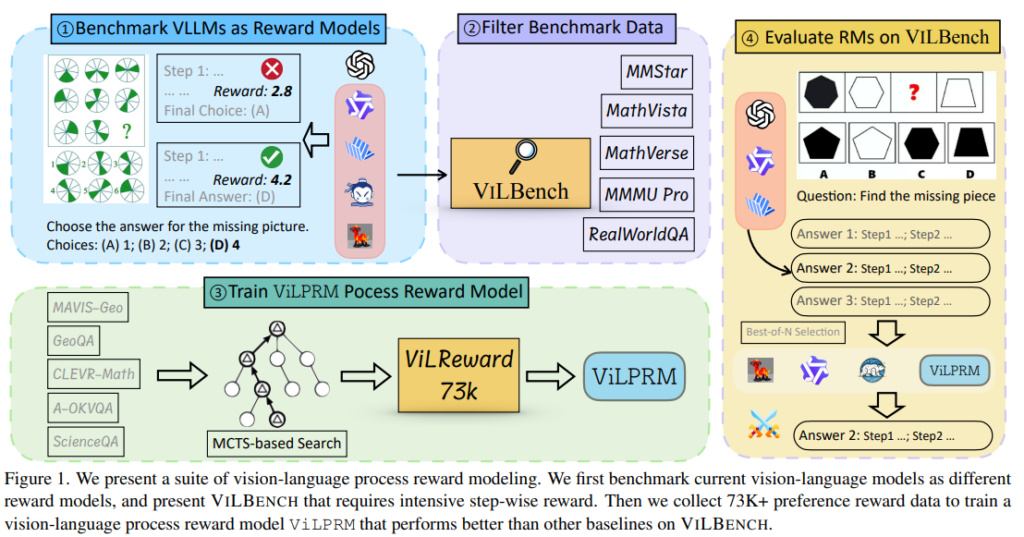

Researchers from UC Santa Cruz, UT Dallas, and Amazon Research benchmarked VLLMs as ORMs and PRMs across multiple tasks, revealing that neither consistently outperforms the other. To address evaluation gaps, they introduced VILBENCH, a benchmark requiring step-wise reward feedback, where GPT-4o with Chain-of-Thought achieved only 27.3% accuracy. Additionally, they collected 73.6K vision-language reward samples using an enhanced tree-search algorithm, training a 3B PRM that improved evaluation accuracy by 3.3%. Their study provides insights into vision-language reward modeling and highlights challenges in multimodal step-wise evaluation.

VLLMs are increasingly effective across various tasks, particularly when evaluated for test-time scaling. Seven models were benchmarked using the LLM-as-a-judge approach to analyze their step-wise critique abilities on five vision-language datasets. A Best-of-N (BoN) setting was used, where VLLMs scored responses generated by GPT-4o. Key findings reveal that ORMs outperform PRMs in most cases except for real-world tasks. Additionally, stronger VLLMs do not always excel as reward models, and a hybrid approach between ORM and PRM is optimal. Moreover, VLLMs benefit from text-heavy tasks more than visual ones, underscoring the need for specialized vision-language reward models.

To assess ViLPRM’s effectiveness, experiments were conducted on VILBENCH using different RMs and solution samplers. The study compared performance across multiple VLLMs, including Qwen2.5-VL-3B, InternVL-2.5-8B, GPT-4o, and o1. Results show that PRMs generally outperform ORMs, improving accuracy by 1.4%, though o1’s responses showed minimal difference due to limited detail. ViLPRM surpassed other PRMs, including URSA, by 0.9%, demonstrating superior consistency in response selection. Additionally, findings suggest that existing VLLMs are not robust enough as reward models, highlighting the need for specialized vision-language PRMs that perform well beyond math reasoning tasks.

In conclusion, Vision-language PRMs perform well when reasoning steps are segmented, as seen in structured tasks like mathematics. However, in functions with unclear step divisions, PRMs can reduce accuracy, particularly in visual-dominant cases. Prioritizing key steps rather than treating all equally improves performance. Additionally, current multimodal reward models struggle with generalization, as PRMs trained on specific domains often fail in others. Enhancing training by incorporating diverse data sources and adaptive reward mechanisms is crucial. The introduction of ViLReward-73K improves PRM accuracy by 3.3%, but further advancements in step segmentation and evaluation frameworks are needed for robust multimodal models.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 85k+ ML SubReddit.

The post Advancing Vision-Language Reward Models: Challenges, Benchmarks, and the Role of Process-Supervised Learning appeared first on MarkTechPost.

Source: Read MoreÂ

[Register Now] miniCON Virtual Conference on OPEN SOURCE AI: FREE REGISTRATION + Certificate of Attendance + 3 Hour Short Event (April 12, 9 am- 12 pm PST) + Hands on Workshop [Sponsored]

[Register Now] miniCON Virtual Conference on OPEN SOURCE AI: FREE REGISTRATION + Certificate of Attendance + 3 Hour Short Event (April 12, 9 am- 12 pm PST) + Hands on Workshop [Sponsored]