Large Language Models (LLMs) significantly benefit from attention mechanisms, enabling the effective retrieval of contextual information. Nevertheless, traditional attention methods primarily depend on single token attention, where each attention weight is computed from a single pair of query and key vectors. This design inherently constrains the model’s ability to discern contexts requiring the integration of multiple token signals, thereby limiting its effectiveness on complex linguistic dependencies. For example, identifying sentences simultaneously containing both “Alice” and “rabbit” is challenging because conventional attention mechanisms struggle to integrate multiple separate attention signals efficiently without substantially increasing model complexity.

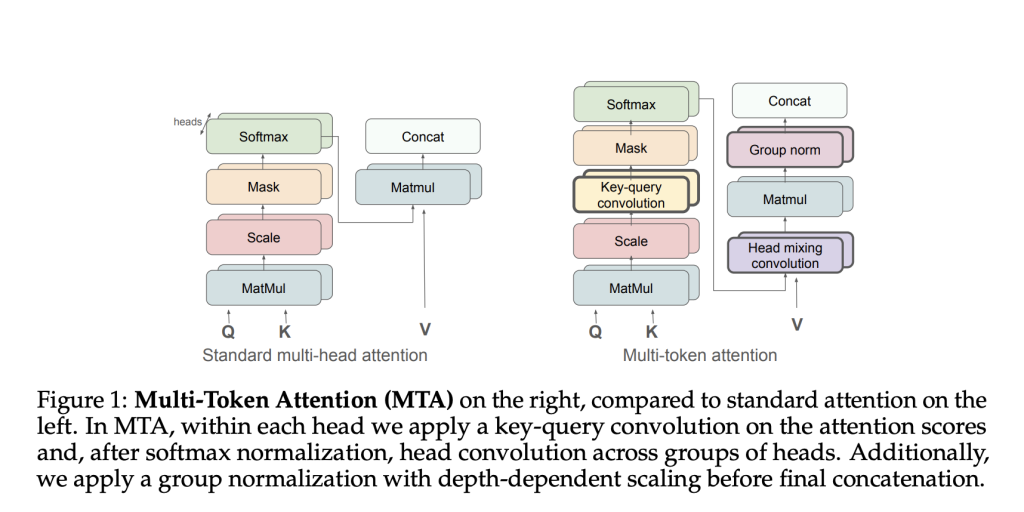

Meta AI addresses this limitation by introducing Multi-Token Attention (MTA), an advanced attention mechanism that conditions attention weights simultaneously on multiple query and key vectors. MTA integrates convolution operations over queries, keys, and attention heads, thus enhancing the precision and efficiency of contextual information retrieval. Specifically, the MTA framework consists of two convolutional components: key-query convolution, which aggregates multiple token signals within individual attention heads, and head mixing convolution, which facilitates information sharing among different attention heads. Additionally, the implementation employs group normalization with depth-dependent scaling to stabilize gradient flow, further improving model training stability and efficacy.

At a technical level, MTA modifies conventional attention calculations by incorporating a two-dimensional convolution operation on the attention logits prior to softmax normalization. This convolution allows adjacent queries and keys to influence attention scores mutually, thus enabling the attention mechanism to identify contextual relationships involving multiple tokens more precisely. Consequently, the model efficiently aggregates local token interactions without substantially increasing the number of parameters or the dimensionality of attention vectors. Moreover, head convolution promotes effective knowledge transfer among attention heads, selectively amplifying relevant context signals while mitigating less pertinent information. Collectively, these enhancements yield a more robust attention mechanism capable of capturing complex multi-token interactions.

Empirical evaluations validate the efficacy of MTA across several benchmarks. In a structured motivating task explicitly designed to illustrate the shortcomings of single-token attention mechanisms, MTA demonstrated near-perfect performance, achieving an error rate of only 0.1%, in contrast to standard Transformer models that exhibited error rates above 50%. Further large-scale experiments involving an 880M-parameter model trained on 105 billion tokens showed MTA consistently outperforming baseline architectures. MTA achieved superior validation perplexity scores across datasets such as arXiv, GitHub, and Wikipedia. Specifically, in tasks requiring extended context comprehension, such as Needle-in-the-Haystack and BabiLong benchmarks, MTA significantly exceeded the performance of standard Transformer models. In the Needle-in-the-Haystack task with 4K token contexts containing multiple needles, MTA attained accuracies ranging from 67% to 97.6%, surpassing standard models by substantial margins.

In summary, Multi-Token Attention (MTA) presents a refined advancement in attention mechanisms by addressing fundamental limitations of traditional single-token attention. Leveraging convolutional operations to concurrently integrate multiple query-key interactions, MTA enhances the ability of language models to handle intricate contextual dependencies. These methodological improvements facilitate more precise and efficient performance, particularly in scenarios involving complex token interactions and long-range contextual understanding. Through targeted modifications to standard attention mechanisms, MTA contributes meaningfully to the evolution of more sophisticated, accurate, and computationally efficient language models.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 85k+ ML SubReddit.

The post Meta AI Proposes Multi-Token Attention (MTA): A New Attention Method which Allows LLMs to Condition their Attention Weights on Multiple Query and Key Vectors appeared first on MarkTechPost.

Source: Read MoreÂ

[Register Now] miniCON Virtual Conference on OPEN SOURCE AI: FREE REGISTRATION + Certificate of Attendance + 3 Hour Short Event (April 12, 9 am- 12 pm PST) + Hands on Workshop [Sponsored]

[Register Now] miniCON Virtual Conference on OPEN SOURCE AI: FREE REGISTRATION + Certificate of Attendance + 3 Hour Short Event (April 12, 9 am- 12 pm PST) + Hands on Workshop [Sponsored]