Large language models are powering a new wave of digital agents to handle sophisticated web-based tasks. These agents are expected to interpret user instructions, navigate interfaces, and execute complex commands in ever-changing environments. The difficulty lies not in understanding language but in translating that understanding into precise, sequenced actions while adapting to dynamic contexts. Success for long-horizon tasks like booking travel or retrieving specific web data depends on managing a sequence of steps that evolves with each action. Despite major progress in language capabilities, creating agents that can effectively plan and adapt at each step remains an unsolved problem.

Composing broad goals into actionable steps is a major issue in building such agents. When a user requests “follow the top contributor of this GitHub project,” the agent must interpret the command and determine how to navigate to the contributor’s section, identify the relevant person, and initiate the following action. This task becomes even more complex in dynamic environments where content may shift between executions. Without a clear planning and updating strategy, agents can make inconsistent decisions or fail entirely. The scarcity of training data that shows how to plan and execute long tasks correctly adds another layer of difficulty.

Previously, researchers attempted to address these issues with models that either relied on single-agent strategies or applied reinforcement learning to guide actions. Single-agent systems like ReAct attempted to merge reasoning and execution but often faltered as the model was overwhelmed by thinking and acting at once. Reinforcement learning approaches showed promise but proved unstable and highly sensitive to environment-specific tuning. Collecting training data for these methods required extensive interaction with environments, making it time-consuming and impractical to scale. These methods also struggled to maintain performance consistency when tasks changed mid-process.

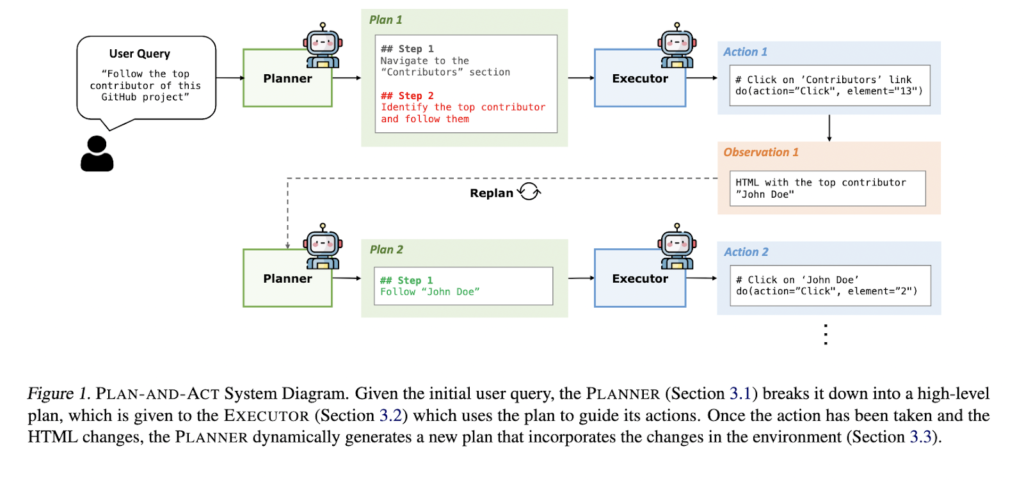

Researchers from UC Berkeley, the University of Tokyo, and ICSI introduced a new PLAN-AND-ACT system. Companies like Apple, Nvidia, Microsoft, and Intel supported the work. This framework splits task planning and execution into two modules: a PLANNER and an EXECUTOR. The PLANNER is tasked with creating a structured plan based on the user’s request, essentially outlining what steps need to be taken. The EXECUTOR then translates each step into environment-specific actions. By separating these responsibilities, the system allows the PLANNER to focus on strategy while the EXECUTOR handles execution, improving the reliability of both components. This modular design marks a significant shift from previous approaches.

The methodology behind PLAN-AND-ACT is detailed and focuses heavily on scalable training. Since human-annotated planning data is limited, researchers introduced a synthetic data generation pipeline. They began by collecting action trajectories from simulated agents—sequences of clicks, inputs, and responses. Large language models then analyzed these trajectories to reconstruct high-level plans grounded in actual outcomes. For example, a plan might specify identifying the top contributor, while the actions linked to it include clicking the “Contributors” tab and parsing the resulting HTML. The team expanded their dataset with 10,000 additional synthetic plans and then generated 5,000 more targeted plans based on failure analysis. This synthetic training method saved time and produced high-quality data that reflected real execution needs.

In testing, PLAN-AND-ACT achieved a task success rate of 53.94% on the WebArena-Lite benchmark, surpassing the previous best result of 49.1% from WebRL. Without any planner, a base executor only achieved 9.85%. Adding a non-finetuned planner boosted performance to 29.63% while finetuning on 10,000 synthetic plans brought results up to 44.24%. Incorporating dynamic replanning added a final 10.31% performance gain. Across all experiments, the data showed that most performance improvements came from enhancing the PLANNER rather than the EXECUTOR. Even with a base EXECUTOR, having a strong PLANNER led to substantial success rate increases, validating the researchers’ hypothesis that separating planning and execution yields better task outcomes.

In conclusion, this paper highlights how identifying the gap between goal understanding and environment interaction can lead to more effective AI systems. By focusing on structured planning and scalable data generation, the researchers proposed a method that solves a specific problem and demonstrates a framework that can extend to broader applications. PLAN-AND-ACT shows that effective planning, not just execution, is critical to AI agent success in complex environments.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 85k+ ML SubReddit.

The post This AI Paper Introduces PLAN-AND-ACT: A Modular Framework for Long-Horizon Planning in Web-Based Language Agents appeared first on MarkTechPost.

Source: Read MoreÂ