As AWS Solutions Architects, we regularly work with customers to optimize their Amazon DynamoDB deployments. Through these engagements, we’ve identified several common misconceptions about on-demand throughput mode that often prevent teams from making optimal choices for their workloads. While on-demand capacity mode can significantly reduce operational complexity, questions persist about its cost-effectiveness, performance, and operational characteristics.

In this post, we examine the realities behind common myths about DynamoDB on-demand capacity mode across three key areas: cost implications and efficiency, operational overhead and management, and performance considerations. We provide practical guidance to help you make informed decisions about throughput management.

Examining cost-related misconceptions

There are three common myths about DynamoDB on-demand capacity costs that we frequently encounter:

- On-demand tables are always expensive.

- It is always cheaper to use provisioned mode with auto-scaling.

- On-demand mode charges you for unused capacity.

Let’s examine each of these myths. The first myth about on-demand tables being expensive oversimplifies the cost structure. While the per-request price is higher than provisioned capacity, the true cost of running DynamoDB tables extends beyond simple request pricing. Teams must consider engineering time for capacity management, the cost of over-provisioning for peak demands, and ongoing operational overhead for monitoring and adjusting auto-scaling settings. When you factor in these operational costs, on-demand mode often proves more economical, especially for variable workloads or development environments where precise capacity planning is challenging. Learn more about the DynamoDB on-demand throughput price reduction in November 2024.

In addition to these total cost of ownership (TCO) factors, the second myth overlooks key aspects of provisioned capacity with auto-scaling. You pay for allocated capacity whether you use it or not, and scaling down unused capacity isn’t immediate—it requires a minimum 15-minute of lower usage period with a maximum of 30 mins between scale downs. This delay means that you continue paying for unnecessary capacity even after your workload decreases.

The third myth—that on-demand mode charges for unused capacity—is incorrect. The pricing model for on-demand capacity is straightforward: you pay only for the actual read and write requests your application performs. There are no charges for maintaining scaling capability or idle capacity, and no minimum capacity requirements.

To optimize costs in either mode, Amazon CloudWatch provides comprehensive metrics for monitoring usage patterns. You can configure maximum throughput settings to control upper limits and implement AWS Budgets for cost tracking. For read-heavy workloads, consider implementing DynamoDB Accelerator (DAX) to reduce costs through caching.

Performance and scaling myths

While cost considerations are important, performance and scaling capabilities often raise equally important questions. When discussing DynamoDB performance, we often hear three misconceptions:

- On-demand tables have a slower response time.

- On-demand tables won’t scale higher than 40,000 read and write capacity units.

- You can’t scale beyond twice your previous peak.

The first misconception—that on-demand tables have slower response times—is based on a misunderstanding of the architecture of DynamoDB. In reality, DynamoDB delivers consistent single-digit millisecond latency regardless of your chosen throughput mode. When you select on-demand mode, you’re not changing the underlying infrastructure or performance characteristics—you are adjusting how you manage and pay for capacity. Both modes use the same high-performance infrastructure and offer identical enterprise-ready features.

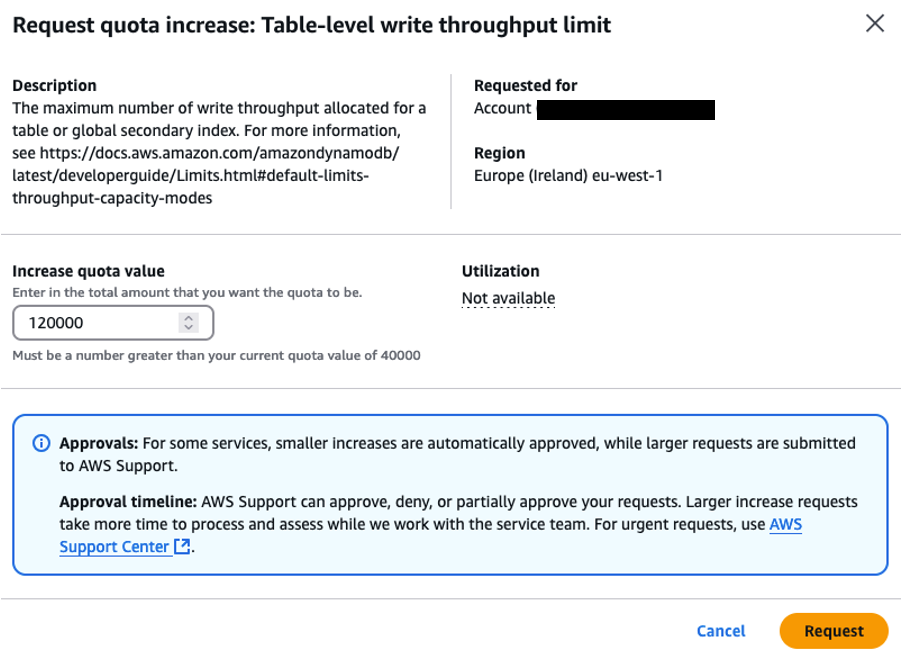

The second myth concerns the initial table and account quotas. While new tables start with a default quota of 40,000 read capacity units (RCUs) and write capacity units (WCUs), this isn’t a hard limit. Through the Service Quotas console, you can request increases to the level you need. We regularly work with customers operating at hundreds of thousands or even millions of capacity units. The following figure is an example of a quota increase for write capacity units from the default quota of 40,000 WCU to 120,000 WCU.

The third myth—about scaling beyond twice your previous peak—requires understanding how on-demand scaling works. New on-demand tables start with capacity for 4,000 writes per second and 12,000 reads per second. From there, DynamoDB automatically accommodates up to double your previous peak traffic within a 30-minute window. For example, if your previous peak was 50,000 reads per second, DynamoDB instantly handles up to 100,000 reads per second. Once you sustain that new level, it becomes your peak, enabling scaling to 200,000 reads per second.

If you know you’ll need to scale beyond twice your previous peak, you can set your desired warm throughput value ahead of time, as shown in the following figure. This eliminates the need to gradually scale up and makes sure that your table can immediately handle your target workload. For unplanned scaling beyond twice your previous peak, DynamoDB continues to add capacity as your traffic increases, but you should plan to implement this growth at least 30 minutes in advance to avoid throttling.

CloudWatch metrics are available to help you understand your application’s scaling patterns and plan for growth. Work with your AWS account team to plan for maintaining consistent performance during larger scaling events.

Operational myths

After addressing performance and scaling, let’s examine three persistent myths about operational aspects of on-demand tables:

- You can’t control on-demand consumption.

- On-demand tables need to be pre-warmed frequently.

- Changing table capacity requires downtime.

The first myth—that you can’t control on-demand consumption—misunderstands the management capabilities available. While DynamoDB automatically handles scaling in on-demand mode, you retain significant control over your table’s operation. Through the AWS Management Console, you can set maximum throughput limits to protect downstream systems and control costs. Here’s how the write request units work:

- Default limit: 40,000 write request units

- Additional approved capacity: 80,000 write request units

- Total available capacity: 120,000 write request units

In this example, although the account has been approved for up to 120,000 write request units, we’re setting a maximum limit of 100,000 units to establish predictable cost boundaries while maintaining substantial scaling headroom, as shown in the following figure.

The second myth is that you need frequent pre-warming. While DynamoDB automatically maintains appropriate capacity based on observed traffic patterns, pre-warming can be crucial when you expect a significant traffic spike. Here’s the key difference: With pre-warming, your table can be ready to handle the anticipated load after you complete the pre-warming activity. Without it, scaling happens gradually – for example, if your gaming application typically handles 50,000 requests per second and you need to handle 250,000 requests during an event, your table would need to scale in steps: first to 100,000 requests, wait 30 minutes, then to 200,000 requests, and after another 30 minutes reach 400,000 requests. That’s a full hour before reaching the needed capacity. The good news is that once you pre-warm once, DynamoDB maintains that capacity for future similar traffic patterns – including regular weekly events at that scale. You won’t need to pre-warm again unless you expect traffic significantly higher than your new peak.

The third myth—that changing capacity modes causes downtime—is particularly persistent but incorrect. You can switch between provisioned and on-demand modes once every 24 hours without experiencing any downtime. During the transition, which typically takes several minutes, your table remains fully available for read and write operations. The switch preserves all your table configurations, including global secondary indexes, monitoring settings, and backup configurations.

One of the key benefits of on-demand mode is the reduced need for active monitoring and management. Your teams can focus on building features and improving applications rather than frequently reviewing and adjusting capacity settings.

Common implementation misconceptions

After addressing operational concerns, let’s examine two final misconceptions about on-demand table usage:

- On-demand tables will fix my throttling.

- On-demand mode is only for spiky workloads.

The first myth—that on-demand mode automatically eliminates all throttling—oversimplifies how DynamoDB partitions work. While on-demand mode significantly reduces throttling concerns through automated capacity management, certain fundamental limits still apply. DynamoDB maintains partition-level throughput limits—3,000 RCUs and 1,000 WCUs per partition—to ensure consistent performance across all tables. These hardware-level limits apply regardless of your chosen capacity mode.

In our customer engagements, we frequently encounter throttling caused by partition key design, both in base tables and global secondary indexes (GSIs). For example, an order processing system might use timestamps as partition keys in the base table, while a GSI tracks order status using a small set of possible values such as pending, processing, and completed. During high-traffic events such as flash sales, both the base table and the GSI can experience throttling as writes independently concentrate on single partitions. The solution involves implementing distributed partition key strategies—such as combining timestamps with product categories in the base table and adding more granular values to GSI keys to improve their distribution.

The second myth—that on-demand mode is only suitable for spiky workloads—unnecessarily limits its applicability. While on-demand capacity excels at handling variable workloads, its benefits extend to many different usage patterns. Consider an ecommerce platform where order processing handles variable traffic, customer profiles maintain steady access patterns, and inventory updates run as periodic batch processes. On-demand mode effectively serves all these components, reducing operational overhead across the entire application.

Development and operations teams benefit from this flexibility throughout the application lifecycle. During development and testing, teams can focus on building features instead of managing capacity. In production, teams spend less time on capacity planning and more time improving application functionality. The key advantage isn’t just handling spiky workloads; it’s the overall reduction in operational complexity and the ability to automatically adapt to your application’s needs.

Conclusion and next steps

Throughout this post, we’ve examined several common myths about Amazon DynamoDB on-demand throughput mode and provided clarity about its actual capabilities. While the per-request price might be higher than provisioned capacity, the total cost of ownership often proves more economical when considering operational overhead and elimination of over-provisioning. On-demand tables provide the same consistent, single-digit millisecond performance as provisioned capacity, with the added benefit of automatic scaling beyond default quotas. You maintain significant control over your tables while eliminating the complexity of capacity management and pre-warming activities.

Based on our experience helping customers across various industries optimize their DynamoDB implementations, we recommend evaluating capacity modes based on your specific workload characteristics and operational requirements. Successful implementations focus on workload patterns, operational efficiency, and actual usage rather than common assumptions about capacity modes.

To get started with DynamoDB on-demand capacity mode, visit the Amazon DynamoDB console to create your first on-demand table, or review the DynamoDB Developer Guide to learn about capacity mode best practices. Read Amazon DynamoDB re:Invent 2024 recap | AWS Database Blog to see interesting presentations you might have missed.

About the Author

Esteban Serna, Principal DynamoDB Specialist Solutions Architect, is a database enthusiast with 15 years of experience. From deploying contact center infrastructure to falling in love with NoSQL, Esteban’s journey led him to specialize in distributed computing. Today, he helps customers design massive-scale applications with single-digit millisecond latency using DynamoDB. Passionate about his work, Esteban loves nothing more than sharing his knowledge with others.

Source: Read More