Large language models (LLMs) have shown remarkable advancements in reasoning capabilities in solving complex tasks. While models like OpenAI’s o1 and DeepSeek’s R1 have significantly improved challenging reasoning benchmarks such as competition math, competitive coding, and GPQA, critical limitations remain in evaluating their true reasoning potential. The current reasoning datasets focus on problem-solving tasks but fail to encompass domains that require open-ended reasoning. Moreover, these datasets suffer from limited diversity in both scale and difficulty levels, making it challenging to evaluate and enhance the reasoning capabilities of LLMs across different domains and complexity levels.

Previous attempts to enhance LLM reasoning capabilities mostly focus on two approaches: synthetic data generation and unsupervised self-training. In synthetic data generation, STaR and MetaMath methods augment existing datasets with new chain-of-thought rationales and question variations. Still, they heavily depend on pre-existing high-quality datasets. While approaches like OpenMathInstruct-2, NuminaMath, and Xwin-Math generate new data from seed examples, they struggle with scaling to novel domains. In unsupervised self-training, most methods rely on human-annotated final answers or external reward models, making them resource-intensive and costly, particularly for complex multi-step problems that require human evaluation of LLM outputs.

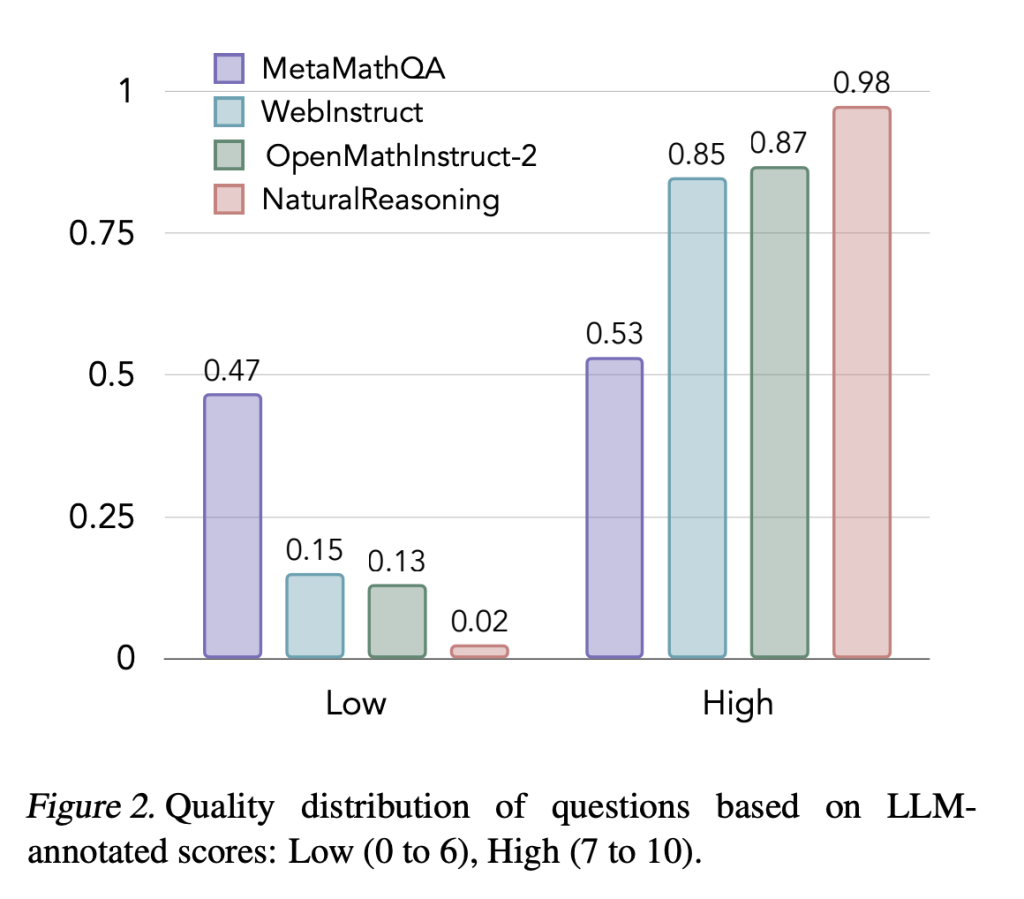

Researchers from Meta, and New York University have proposed NATURALREASONING, a comprehensive dataset of 2.8 million reasoning questions extracted from pretraining corpora. This dataset spans diverse fields including Mathematics, Physics, Computer Science, and Economics & Business. Unlike synthetic datasets like MetaMathQA and OpenMathInstruct-2, NATURALREASONING represents authentic real-world reasoning problems through backtranslation from pretraining corpora. It uniquely combines verifiable and open-ended questions, including theorem proving, making it valuable for developing algorithms that enhance LLMs’ reasoning abilities beyond simple verification tasks and enabling knowledge distillation from stronger to weaker models.

The efficacy of the NATURALREASONING method is shown in two ways to enhance reasoning capabilities. First, it utilizes knowledge distillation and supervised finetuning to achieve steeper scaling trends than existing datasets. Second, it functions as a source for domain-specific seed data extraction. For targeting science reasoning benchmarks like GPQA, the method samples 250 benchmark questions and retrieves 1K similar decontaminated questions from NATURALREASONING using cosine similarity between question embeddings. These questions are then deduplicated and clustered into 15K groups. The evaluation protocol uses zero-shot testing across various benchmarks including MATH, GPQA, GPQA-Diamond, and MMLUPro, using greedy decoding for consistent performance measurement.

The evaluation results show that with just 1.5 million training examples, models trained on NATURALREASONING outperform Llama3.1-8B-Instruct but other datasets like OpenMathInstruct-2 and WebInstruct fail to achieve comparable performance even with 2.8 million data points. While math-specific datasets like OpenMathInstruct-2 show strong performance on math benchmarks (improving from 50.83 to 59.25 on MATH), they struggle to generalize, with GPQA accuracy plateauing around 26-27% and inconsistent MMLU-Pro performance. Moreover, datasets like WebInstruct show diminishing returns, with GPQA performance peaking at 29.02% with 500K samples but declining to 26.12% at 2.8M samples.

In conclusion, researchers introduced NATURALREASONING, a dataset that represents a significant advancement in developing comprehensive reasoning datasets for LLMs. The dataset’s collection of 2.8 million questions spans multiple domains including mathematics, physics, computer science, economics, and social sciences. The results show that using the NATURALREASONING method for knowledge distillation leads to consistent improvements in reasoning benchmark performance as data size increases. Its effectiveness extends to enabling unsupervised self-training of LLMs through external reward models and self-rewarding techniques, marking a step forward to enhance LLMs’ reasoning capabilities in diverse domains.

Check out the Paper and Dataset. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 75k+ ML SubReddit.

The post Meta AI Releases ‘NATURAL REASONING’: A Multi-Domain Dataset with 2.8 Million Questions To Enhance LLMs’ Reasoning Capabilities appeared first on MarkTechPost.

Source: Read MoreÂ