Virtual meetings have become a cornerstone of modern work, but reviewing lengthy recordings can be time-consuming. In this tutorial you’ll learn how to automatically generate summaries of meeting recordings in less than 10 lines of code using AssemblyAI’s API.

Getting Started

First, get a free AssemblyAI API key here. The free offering comes with hundreds of hours of free speech-to-text and summarization.

You’ll need to have Python installed on your system to follow along, so install it if you haven’t already. Then install AssemblyAI’s Python SDK, which will allow you to call the API from your Python code:

pip install -U assemblyai

Basic implementation

It’s time to set up your summarization workflow in Python. First, import the AssemblyAI SDK and configure your API key. While we set the API key inline here for simplicity, you should store it securely in a configuration file or environment variable in production code, and never check it into source control.

import assemblyai as aai

aai.settings.api_key = "YOUR_API_KEY"

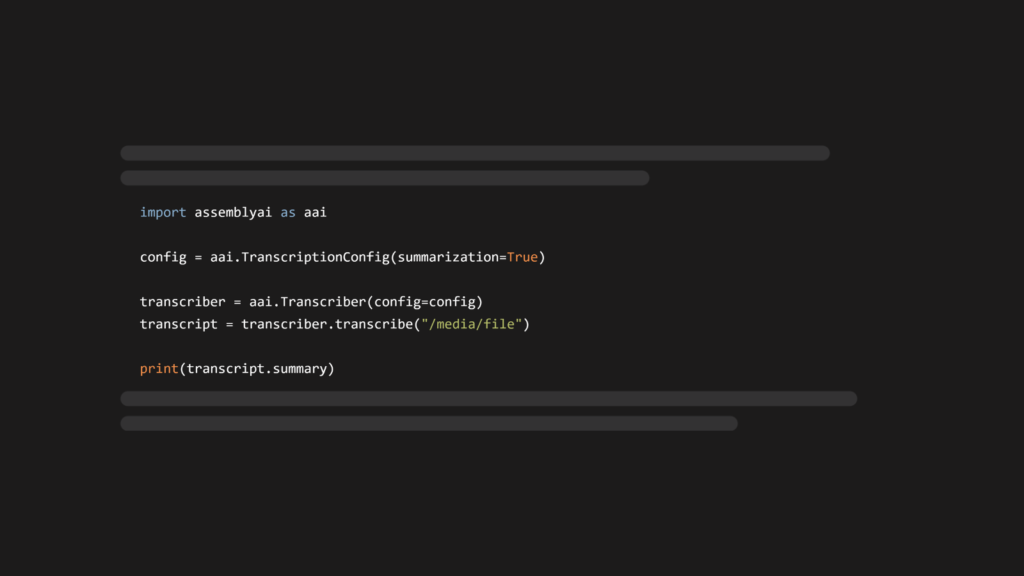

Next, create a transcription configuration object like the one below. This TranscriptionConfig configuration tells AssemblyAI that you want to enable summarization when you submit a file for speech-to-text, and also specifies which model you want to use for the summarization as well as the type (or format) of summary you want to create:

config = aai.TranscriptionConfig(

summarization=True,

summary_model=aai.SummarizationModel.informative,

summary_type=aai.SummarizationType.bullets

)

Next, create a transcriber object, which will handle the transcription of audio files. Passing in the TranscriptionConfig defined above applies these settings to any transcription created by this transcriber.

transcriber = aai.Transcriber(config=config)

Now you can submit files for transcription using the transcribe method of the transcriber. You can input either a local filepath or a remote URL to a publicly-accessible audio or video file you want to transcribe:

# example remote file

transcript = transcriber.transcribe("https://storage.googleapis.com/aai-web-samples/ravenel_bridge.opus")

# or you can use a local file

# transcript = transcriber.transcribe("path/to/your/audio.mp3")

You can then access the summary information through the summary attribute of the resulting transcript:

print(transcript.summary)

Here’s the output for the example file above:

- The Arthur Ravenel Jr Bridge opened in 2005 and is the longest cable stayed bridge in the Western Hemisphere. The design features two diamond shaped towers that span the Cooper river and connect downtown Charleston with Mount Pleasant. The bicycle pedestrian paths provide unparalleled views of the harbor.

Using Python’s requests library

If you prefer to use the requests library instead of AssemblyAI’s SDK, you can make a POST request to the API endpoint directly. A POST request to our API for a transcription with summarization looks like this, using cURL:

curl https://api.assemblyai.com/v2/transcript

--header "Authorization: <YOUR_API_KEY>"

--header "Content-Type: application/json"

--data '{

"audio_url": "YOUR_AUDIO_URL",

"summarization": true,

"summary_model": "informative",

"summary_type": "bullets"

}'

Here’s how to make such a request in Python using the requests library:

import requests

url = "https://api.assemblyai.com/v2/transcript"

headers = {

"Authorization": "<YOUR_API_KEY>",

"Content-Type": "application/json"

}

data = {

"audio_url": "YOUR_AUDIO_URL",

"summarization": True,

"summary_model": "informative",

"summary_type": "bullets"

}

response = requests.post(url, json=data, headers=headers)

The response contains a transcript id that can be used to access the transcript once it is finished processing. You can use webhooks to get notified when the transcript is ready, or you can poll the API to check the status – here’s a complete example of submitting a file and then polling until the transcript is ready:

import requests

import os

import time

URL = "https://api.assemblyai.com/v2/transcript"

HEADERS = {

"Authorization": f"{os.getenv('ASSEMBLYAI_API_KEY')}",

"Content-Type": "application/json"

}

DATA = {

"audio_url": "https://storage.googleapis.com/aai-web-samples/ravenel_bridge.opus",

"summarization": True,

"summary_model": "informative",

"summary_type": "bullets"

}

# Submit the transcription request

response = requests.post(URL, json=DATA, headers=HEADERS)

if response.status_code == 200:

transcript_id = response.json()['id']

# Poll until completion

while True:

polling_endpoint = f"https://api.assemblyai.com/v2/transcript/{transcript_id}"

polling_response = requests.get(polling_endpoint, headers=HEADERS)

transcript = polling_response.json()

status = transcript['status']

if status == 'completed':

print("Transcription completed!")

print("Summary:", transcript.get('summary', ''))

break

elif status == 'error':

print("Error:", transcript['error'])

break

print("Transcript processing ...")

time.sleep(3)

else:

print(f"Error: {response.status_code}")

print(response.text)

Customizing summaries

AssemblyAI offers different models and summary types to suit various use cases:

Summary models

informative(default): Optimized for single-speaker content like presentationsconversational: Best for two-person dialogues like interviewscatchy: Designed for creating engaging media titles

Summary types

bullets(default): Key points in bullet formatbullets_verbose: Comprehensive bullet-point summarygist: Brief summary in a few wordsheadline: Single-sentence summaryparagraph: Paragraph-form summary

Best practices and troubleshooting

Choosing the right model and summary type is important for optimal results. For clear recordings with two speakers, use the conversational model. If you’re working with shorter content, the gist or headline types will serve you better, while longer recordings are best handled by bullets_verbose or paragraph formats.

If you’re having trouble getting good results, first verify your audio quality meets these criteria:

- Speakers’ voices are distinct and easily distinguishable

- Background noise is minimized

- Audio input is clear and well-recorded

And finally, some technical considerations to keep in mind:

- The Summarization model cannot run simultaneously with Auto Chapters

- Summarization must be explicitly enabled in your configuration

- Processing times may vary based on your audio length and complexity

Next steps

To learn more about how to use our API and the features it offers, check out our Docs, or check out our cookbooks repository to browse solutions for common use cases. Alternatively, check out our blog for tutorials and deep-dives on AI theory, like this Introduction to LLMs, or our YouTube channel for project tutorials and more, like this one on on building an AI voice agent in Python with DeepSeek R1:

Source: Read MoreÂ