Vision‐language models (VLMs) have long promised to bridge the gap between image understanding and natural language processing. Yet, practical challenges persist. Traditional VLMs often struggle with variability in image resolution, contextual nuance, and the sheer complexity of converting visual data into accurate textual descriptions. For instance, models may generate concise captions for simple images but falter when asked to describe complex scenes, read text from images, or even detect multiple objects with spatial precision. These shortcomings have historically limited VLM adoption in applications such as optical character recognition (OCR), document understanding, and detailed image captioning. Google’s new release aims to tackle these issues head on—by providing a flexible, multi-task approach that enhances fine-tuning capability and improves performance across a range of vision-language tasks. This is especially vital for industries that depend on precise image-to-text translation, like autonomous vehicles, medical imaging, and multimedia content analysis.

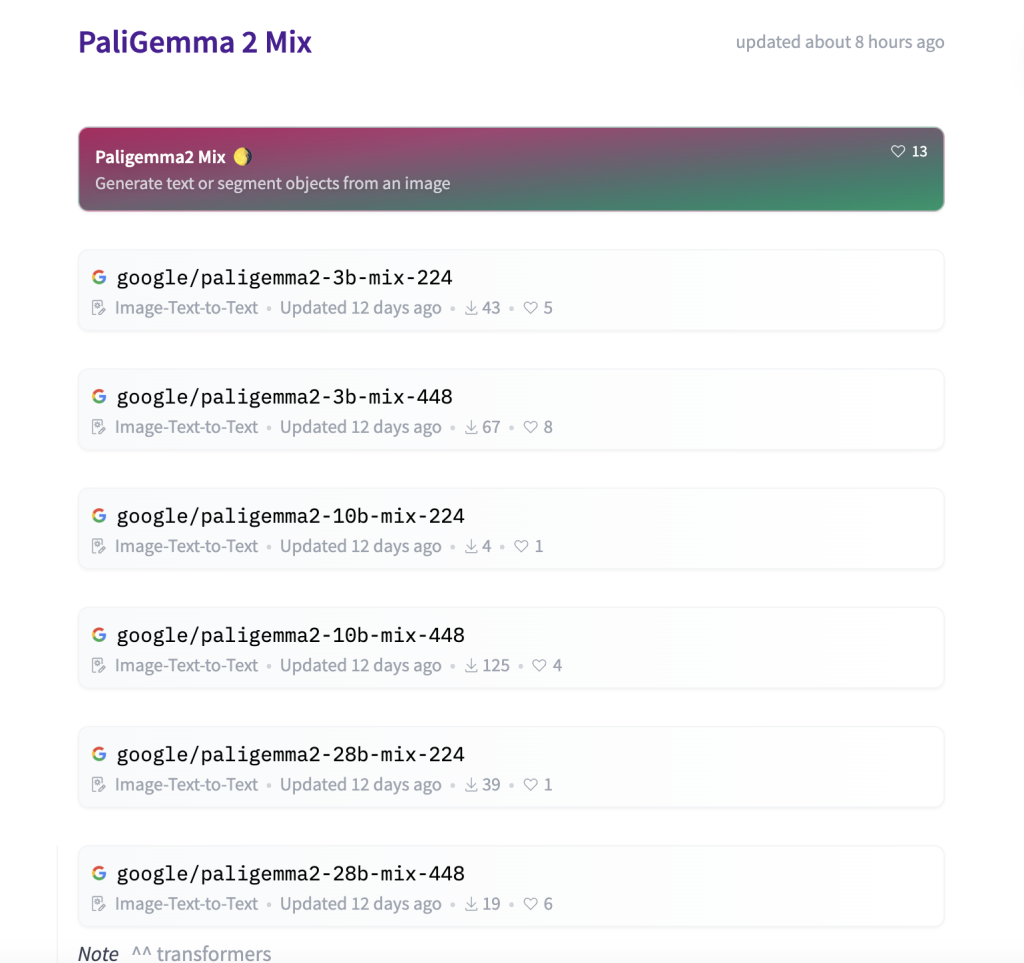

Google DeepMind has just unveiled a new set of PaliGemma 2 checkpoints that are tailor-made for use in applications such as OCR, image captioning, and beyond. These checkpoints come in a variety of sizes—from 3B to a massive 28B parameters—and are offered as open-weight models. One of the most striking features is that these models are fully integrated with the Transformers ecosystem, making them immediately accessible via popular libraries. Whether you are using the HF Transformers API for inference or adapting the model for further fine-tuning, the new checkpoints promise a streamlined workflow for developers and researchers alike. By offering multiple parameter scales and supporting a range of image resolutions (224×224, 448×448, and even 896×896), Google has ensured that practitioners can select the precise balance between computational efficiency and model accuracy needed for their specific tasks.

Technical Details and Benefits

At its core, PaliGemma 2 Mix builds upon the pre-trained PaliGemma 2 models, which themselves integrate the powerful SigLIP image encoder with the advanced Gemma 2 text decoder. The “Mix” models are a fine-tuned variant designed to perform robustly across a mix of vision-language tasks. They utilize open-ended prompt formats—such as “caption {lang}”, “describe {lang}”, “ocr”, and more—thereby offering enhanced flexibility. This fine-tuning approach not only improves task-specific performance but also provides a baseline that signals the model’s potential when adapted to downstream tasks.

The architecture supports both HF Transformers and JAX frameworks, meaning that users can run the models in different precision formats (e.g., bfloat16, 4-bit quantization with bitsandbytes) to suit various hardware configurations. This multi-resolution capability is a significant technical benefit, allowing the same base model to excel at coarse tasks (like simple captioning) and fine-grained tasks (such as detecting minute details in OCR) simply by adjusting the input resolution. Moreover, the open-weight nature of these checkpoints enables seamless integration into research pipelines and facilitates rapid iteration without the overhead of proprietary restrictions.

Performance Insights and Benchmark Results

Early benchmarks of the PaliGemma 2 Mix models are promising. In tests spanning general vision-language tasks, document understanding, localization tasks, and text recognition, the model variants show consistent performance improvements over their predecessors. For instance, when tasked with detailed image description, both the 3B and 10B checkpoints produced accurate and nuanced captions—correctly identifying objects and spatial relations in complex urban scenes.

In OCR tasks, the fine-tuned models demonstrated robust text extraction capabilities by accurately reading dates, prices, and other details from challenging ticket images. Moreover, for localization tasks involving object detection and segmentation, the model outputs include precise bounding box coordinates and segmentation masks. These outputs have been evaluated on standard benchmarks with metrics such as CIDEr scores for captioning and Intersection over Union (IoU) for segmentation. The results underscore the model’s ability to scale with increased parameter count and resolution: larger checkpoints generally yield higher performance, though at the cost of increased computational resource requirements. This scalability, combined with excellent performance in both quantitative benchmarks and qualitative real-world examples, positions PaliGemma 2 Mix as a versatile tool for a wide array of applications.

Conclusion

Google’s release of the PaliGemma 2 Mix checkpoints marks a significant milestone in the evolution of vision-language models. By addressing long-standing challenges—such as resolution sensitivity, context-rich captioning, and multi-task adaptability—these models empower developers to deploy AI solutions that are both flexible and highly performant. Whether for OCR, detailed image description, or object detection, the open-weight, transformer-compatible nature of PaliGemma 2 Mix provides an accessible platform that can be seamlessly integrated into various applications. As the AI community continues to push the boundaries of multimodal processing, tools like these will be critical in bridging the gap between raw visual data and meaningful language interpretation.

Check out the Technical details and Model on Hugging Face. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 75k+ ML SubReddit.

The post Google DeepMind Releases PaliGemma 2 Mix: New Instruction Vision Language Models Fine-Tuned on a Mix of Vision Language Tasks appeared first on MarkTechPost.

Source: Read MoreÂ