Vision Language Models have been a revolutionizing milestone in the development of language models, which overcomes the shortcomings of predecessor pre-trained LLMs like LLama, GPT, etc. Vision Language Models explore a new territory beyond single modularity to combine inputs from text and image videos. VLMs thus bestow a better understanding of visual-spatial relationships by expanding the representational boundaries of input, supporting a richer worldview. With new opportunities come new challenges, which is the case with VLMs. Currently, researchers across the globe are encountering and solving new challenges to make VLMs better, one at a time. Based on a survey by researchers from the University of Maryland and the University of Southern California, this article gives an intricate glimpse of what is going on in this field and what we can expect in the future of vision language models.

This article discusses a structured examination of VLMs developed over the past five years, encompassing architectures, training methodologies, benchmarks, applications, and the challenges inherent in the field. To begin with, let’s familiarize ourselves with some of the SOTA models in VLM and where they come from -CLIP by OpenAI, BLIP by Salesforce, Flamingo by DeepMind, and Gemini. These are the big fish in this domain, which is expanding rapidly to support multimodality user interaction.

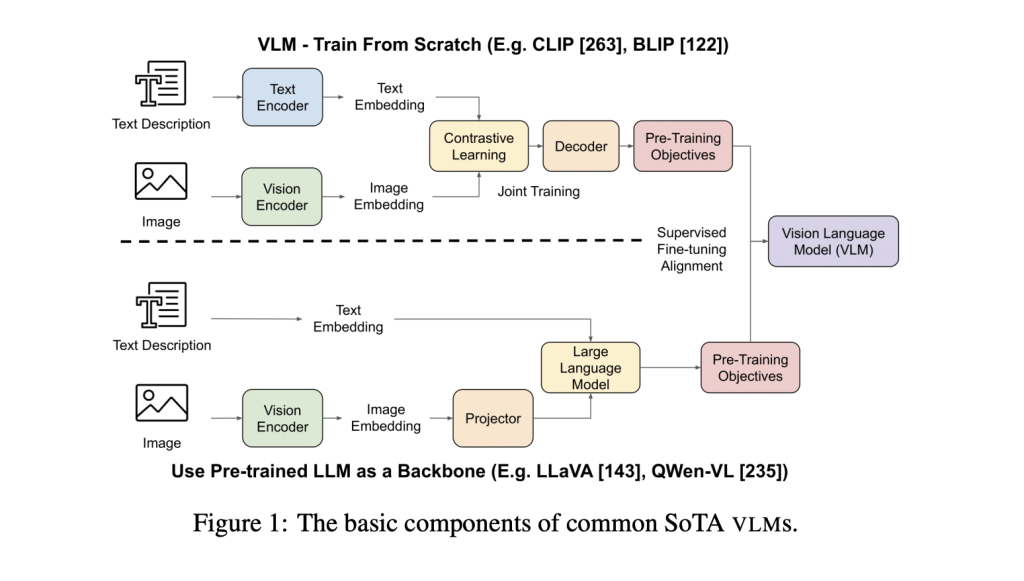

When we dissect a VLM to understand its structure, we find that certain blocks are fundamental to models, irrespective of their features or capabilities. These are Vision Encoder, Text Encoder, and Text Decoder. Further, the mechanism of cross-attention integrates information across modalities, but it is present in fewer. The architecture of VLMs is also evolving as developers now use pre-trained Large Language models as the backbone instead of training from scratch. Self-supervised methodologies such as masked image modeling and contrastive learning have been prevalent in the latter option. On the other hand, while using a pre-trained model backbone, the most common ways to align visual and pre-trained LLM text features are using a projector, joint training, and freezing training stages.

Another interesting development is how the latest models treat visual features as tokens. Besides, the transfusion treats discrete text tokens and continuous image vectors in parallel by introducing strategic breakpoints.

Now, we discuss the major categories of benchmarks in the domain that evaluate the various capabilities of a VLM. Most of the datasets are created via synthetic generation or human annotations. These benchmarks test various models’ capabilities, including visual text understanding, text-to-image generation, and multimodal general intelligence. There are also benchmarks that test challenges against hallucinations, etc. Answer matching, Multiple Choice Questions, and Image/Text Similarity scores have emerged as common evaluation techniques.

VLMs are adapted to a variety of tasks, from virtual-world applications such as virtual embodied agents to real-world applications such as robotics and autonomous driving. Embodied agents are an interesting use case that relies heavily on developing VLMs.Embodied agents are AI models with virtual or physical bodies that can interact with their environment.VLMs increase their user interaction and support system by enabling features like Visual Question Answering. Besides, Generative VLM models like GAN generate visual content like memes, etc. In robotics, VLMs find their use cases in ability manipulation, Navigation, Human-robot Interaction, and Autonomous Driving.

While VLMs have shown tremendous potential over their textual counterparts, researchers must overcome multiple limitations and challenges. There are considerable tradeoffs between flexibility and generalizability of models. Further Issues, such as visual hallucination, raise concerns about the model’s reliability. There are additional constraints on fairness and safety due to biases in training data. Furthermore, in technical challenges, we are yet to see an efficient training and fine-tuning paradigm when high-quality datasets are scarce. Also, the contextual deviations between modalities or misalignments lower the output quality.

Conclusion: The paper provides an overview of the ins and outs of Vision Language Models- a new field of research that integrates content from multiple modalities. We see the model’s architecture, innovations, and challenges in the present times.

Check out the Paper and GitHub Page. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 75k+ ML SubReddit.

The post All You Need to Know about Vision Language Models VLMs: A Survey Article appeared first on MarkTechPost.

Source: Read MoreÂ